Guide to Install and Configure RDA Fabric platform in on-premise environment.

1. RDAF platform and it's components

Robotic Data Automation Fabric (RDAF) is designed to manage data in a multi-cloud and multi-site environments at scale. It is built on microservices and distributed architecture which can be deployed in a Kubernetes cluster infrastructure or in a native docker container environment which is managed through RDA Fabric (RDAF) deployment CLI.

RDAF deployment CLI is built on top of docker-compose container management utility to automate the lifecycle management of RDAF platform components which includes, install, upgrades, patching, backup, recovery, other management and maintenance operations.

RDAF platform consists below set of services which can be deployed in a single virtual machine or a baremetal server or spread across multiple virtual machines or baremetal servers.

-

RDA core platform services

- registry

- api-server

- identity

- collector

- scheduler

- portal-ui

- portal-backend

-

RDA infrastructure services

- NATs

- MariaDB

- Minio

- Opensearch

- Kafka

- HAproxy

- GraphDB

Note

Note: CloudFabrix RDAF platform integrates with above opensource services and uses them as back-end components. However, these opensource service images are not bundled with RDAF platform by default. Customers can download CloudFabrix validated versions from publicly available docker repository (ex: quay.io or docker hub) and deploy them with GPL / AGPL or Commercial license as per their support requirements. RDAF deployment tool provides a hook to assist the Customer to download these opensource software images (supported versions) from the Customer's chosen repository.

-

RDA application services

- cfxOIA (Operations Intelligence & Analytics)

- cfxAIA (Asset Intelligence & Analytics)

-

RDA worker service

- RDA event gateway

- RDA edgecollector

- RDA studio

2. Docker registry access for RDAF platform services deployment

CloudFabrix provides secure docker images registry hosted on AWS cloud which contains all of the required docker images to deploy RDA Fabric platform, infrastructure and application services.

For deploying RDA Fabric services, the environment need to have access to CloudFabrix docker registry hosted on AWS cloud over internet. Below is the docker registry URL and port.

Outbound internet access:

- URL: https://docker1.cloudfabrix.io

- Port: 443

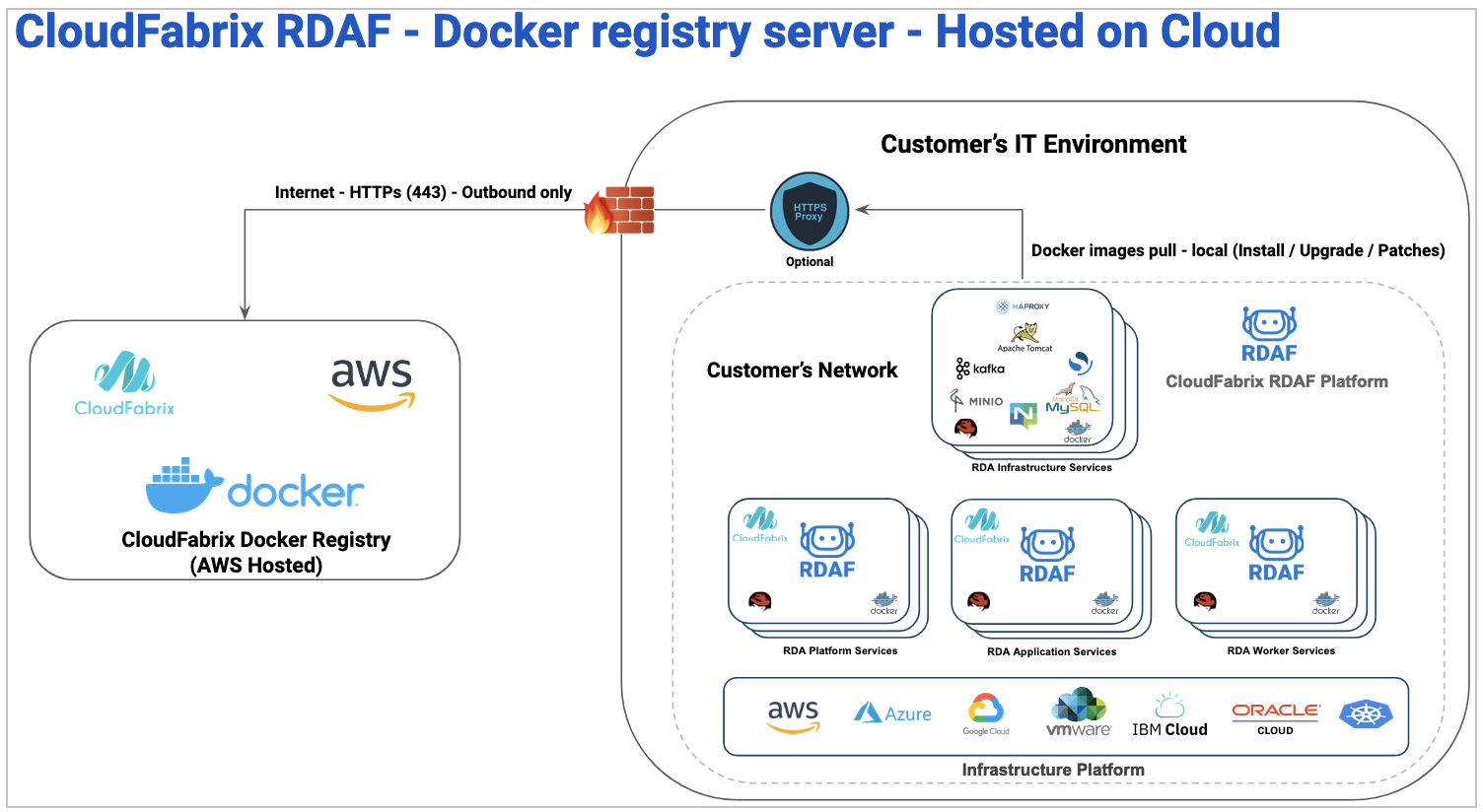

Below picture illustrates network access flow from on-premise environment to access CloudFabrix docker registry hosted on AWS cloud.

Docker registry hosted on AWS cloud

Tip

Please click on the picture below to enlarge and to go back to the page please click on the back arrow button on the top.

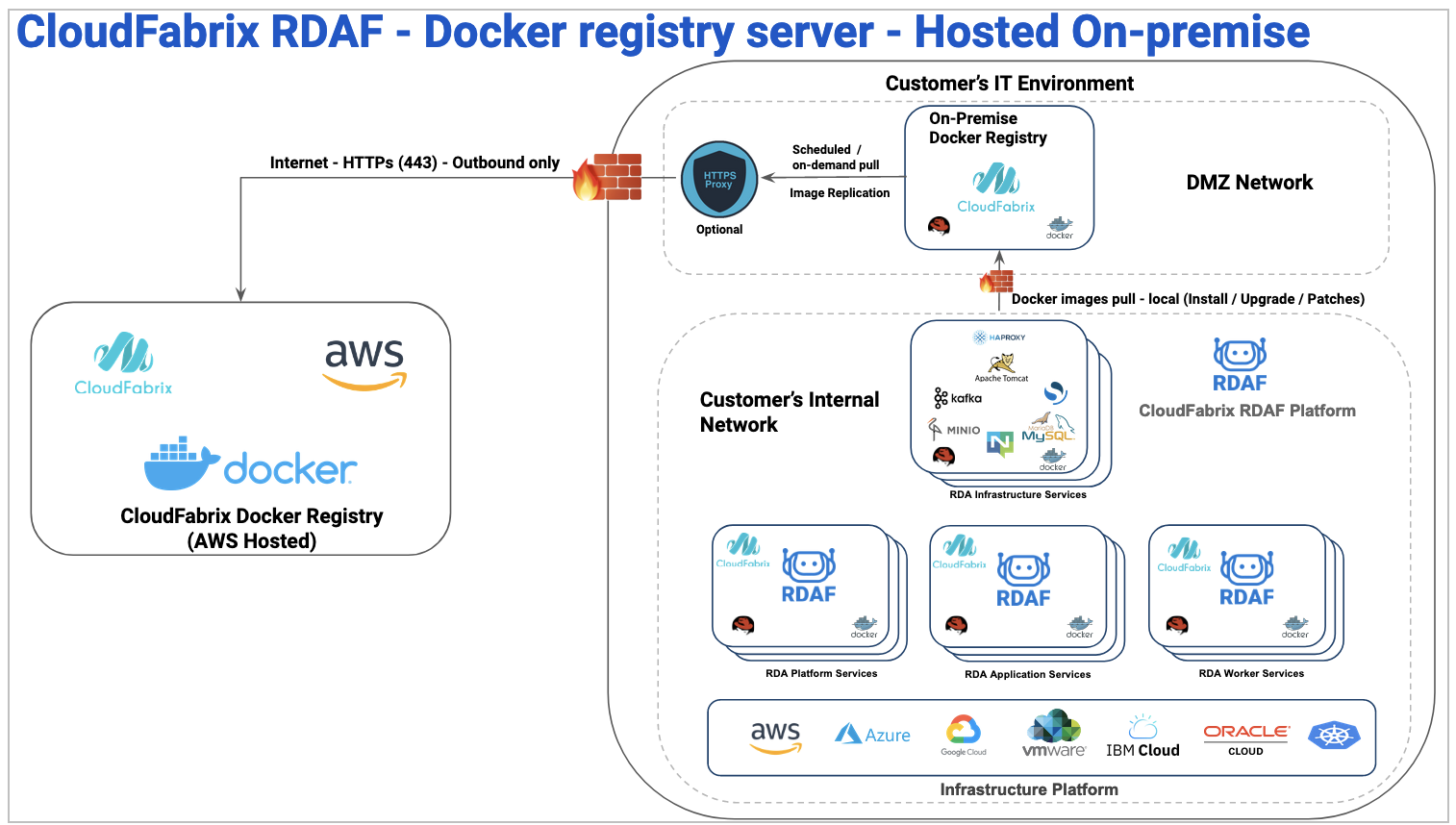

Additionally, CloudFabrix also support hosting an on-premise docker registry in a restricted environment where RDA Fabric VMs do not have direct internet access with or without HTTP proxy.

Once on-premise docker registry service is installed within the Customer's DMZ environment, it communicates with docker registry service hosted on AWS cloud and replicates the selective images required for RDA Fabric deployment for a new installation, on-going updates and patches.

RDA Fabric VMs will pull the images from on-premise docker registry locally.

Below picture illustrates network access flow from on-premise environment to access CloudFabrix docker registry hosted on AWS cloud.

Docker registry hosted On-premise

Tip

Please click on the picture below to enlarge and to go back to the page please click on the back arrow button on the top.

Please refer On-premise Docker registry setup to setup, configure, install and manage on-premise docker registry service and images.

Tip

On-premise docker registry server is recommended to be deployed. However, it is an optional service if there is no restriction in accessing the internet. With on-premise docker registry server, images can be downloaded offline and keep them ready for a fresh install or an update. It will avoid any network glitches or issues in downloading the images from internet during a production environment's installation or upgrade.

3. HTTP Proxy support for deployment

Note

RHEL is supported only for versions earlier than 8.0. Fresh installations with version 8.0 are not supported.

Optionally, RDA Fabric docker images can also be accessed over HTTP proxy during the deployment if one is configured to control the internet access.

On all of the RDA Fabric machines where the services going to be deployed, should be configured with HTTP proxy settings.

- Edit /etc/environment file and define HTTP Proxy server settings as shown below.

Note

If the Worker has already been deployed and if the HA Proxy Settings need to be enabled later, please follow the below mentioned steps.

- View the sample no_proxy and NO_PROXY elements that were added to the

rda_worker sectionin thevalues.yamlfile below.

rda_worker:

mem_limit: 8G

memswap_limit: 8G

privileged: false

Environment:

RDA_ENABLE_TRACES: 'no'

DISABLE_REMOTE_LOGGING_CONTROL: 'no'

RDA_SELF_HEALTH_RESTART_AFTER_FAILURES: 3

no_proxy: 127.0.0.1,localhost

NO_PROXY: 127.0.0.1,localhost

- Execute the below mentioned command to previous version from 3.7.2 to 3.7

-

Once the worker container is up with tag 3.7, validate proxy settings within the worker container by checking docker inspect command on that container

-

Execute the below mentioned command to upgrade from 3.7 to 3.7.2 Version

-

Once the worker container is up with tag 3.7.2, validate proxy settings within the worker container by checking docker inspect command on that container

-

Once the worker is upgraded, please check the proxy env via docker

exec -it <worker id>

Info

Note: IP Address details are given for a reference only. They need to be replaced with appropriate HTTP Proxy server IP and port applicable to your environment.

Warning

Note: For no_proxy and NO_PROXY environment variables, please include loopback and IP addresses of all RDA platform, infrastructure, application and worker nodes. This will ensure to avoid internal RDA Fabric's application traffic going through HTTP proxy server.

Additionally, include any target applications or devices IP address or DNS names where it doesn't require to go through HTTP Proxy server.

Optionally, RDA Fabric docker images can also be accessed over HTTP proxy during the deployment if one is configured to control the internet access.

On all of the RDA Fabric machines where the services going to be deployed, should be configured with HTTP proxy settings.

- Edit /etc/profile.d/proxy.sh file and define HTTP Proxy server settings as shown below.

- Update file permissions with execute and source the file or logout and login again to enable the http proxy settings.

- Configure http proxy for APT package manager by editing /etc/apt/apt.conf.d/80proxy file as shown below.

Note

If the Worker has already been deployed and if the HA Proxy Settings need to be enabled later, please follow the below mentioned steps.

- View the sample no_proxy and NO_PROXY elements that were added to the

rda_worker sectionin thevalues.yamlfile below.

rda_worker:

mem_limit: 8G

memswap_limit: 8G

privileged: false

Environment:

RDA_ENABLE_TRACES: 'no'

DISABLE_REMOTE_LOGGING_CONTROL: 'no'

RDA_SELF_HEALTH_RESTART_AFTER_FAILURES: 3

no_proxy: 127.0.0.1,localhost

NO_PROXY: 127.0.0.1,localhost

-

rdaf worker install command is used to deploy / install RDAF worker services.

-

Run the below command to deploy all RDAF worker services.

-

Once the worker container is up with tag 8.0.0, validate proxy settings within the worker container by checking docker inspect command.

-

Once the worker is installed, please check the proxy env via docker

exec -it <worker id>

Info

Note: IP Address details are given for a reference only. They need to be replaced with appropriate HTTP Proxy server IP and port applicable to your environment. Username and Password fields are optional and needed only if the HTTP Proxy is enabled with user authentication.

Warning

Note: For no_proxy and NO_PROXY environment variables, please include loopback and IP addresses of all RDA platform, infrastructure, application and worker nodes. This will ensure to avoid internal RDA Fabric's application traffic going through HTTP proxy server.

Additionally, include any target applications or devices IP address or DNS names where it doesn't require to go through HTTP Proxy server.

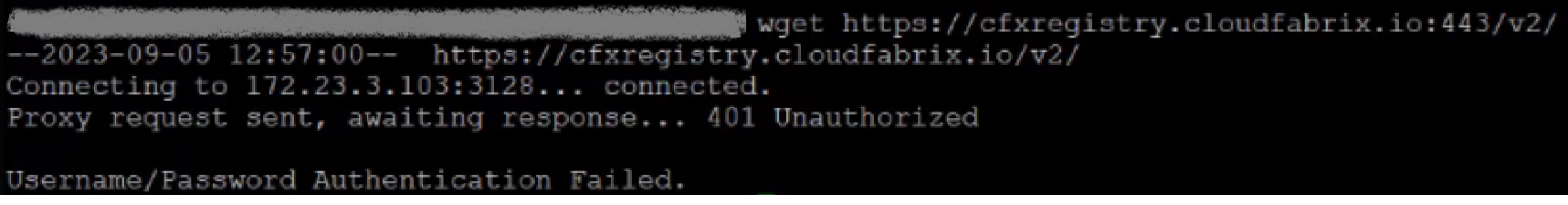

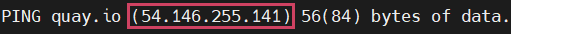

DNS Resolution and CFX Registry Access Issues

Note

If the above proxy settings are not working and seeing DNS challenge or CFX registry access, Please follow the below steps

- Steps to resolve DNS issues and access to CFX registry

- To update the DNS records we need to update the below domains in

etc/hostsfile

Note

quay.io is having a dynamic ip, so before updating the host file check the ip again by using the command mentioned below.

- Please check the DNS Server Settings by using the below command

- To update the additional DNS Servers please run the below command

network:

version: 2

renderer: networkd

ethernets:

ens160:

dhcp4: no

dhcp6: no

addresses: [192.168.125.66/24]

gateway4: 192.168.125.1

nameservers:

addresses: [192.168.159.101,192.168.159.100]

- To apply the above changes please run the below command

- If you still see any DNS Server settings issue, please run the below commands

- Configure Docker Daemon with HTTP Proxy server settings.

Create a file called http-proxy.conf under above directory and add the HTTP Proxy configuration lines as shown below.

Warning

Note: If there is an username and password required for HTTP Proxy server authentication, and if the username has any special characters like "\" (ex: username\domain), it need to be entered in HTTP encoded format. This is applicable only for Docker daemon. Please follow the below instructions.

HTTP Encode / Decode URL: https://www.urlencoder.org

If the username is john\acme.com : The HTTP encoded value is john%%5Cacme.com and the HTTP Proxy configuration looks like below.

-

Restart the RDA Platform, Infrastructure, Application and Worker node VMs to apply the HTTP Proxy server settings.

-

To apply the HTTP Proxy server settings at the docker level please run the below 2 given commands

- After restarting the docker services please verify the configuration by checking the docker environment using below command

Environment=HTTP_PROXY=http://192.168.125.66:3128 HTTPS_PROXY=https://192.168.125.66:3129 NO_PROXY=localhost,127.0.0.1,docker1.cloudfabrix.io

Note

You can find more info about docker proxy configuration in below URL https://docs.docker.com/config/daemon/systemd/#httphttps-proxy

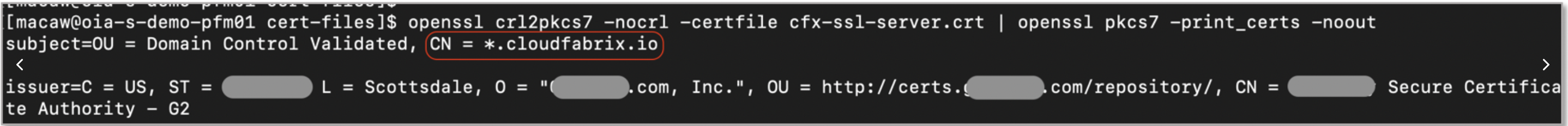

- Verify if you are able to connect to CloudFabrix docker registry URL running the below command.

curl -vv https://docker1.cloudfabrix.io:443

* Rebuilt URL to: https://docker1.cloudfabrix.io:443/external/

* Trying 54.177.20.202...

* TCP_NODELAY set

* Connected to docker1.cloudfabrix.io (68.121.166.41) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/pki/tls/certs/ca-bundle.crt

CApath: none

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

.

.

.

* SSL certificate verify ok.

> GET / HTTP/1.1

> Host: docker1.cloudfabrix.io

> User-Agent: curl/7.61.1

> Accept: */*

>

< HTTP/1.1 200 OK

- After configuring the Docker deamon, Please run the below

docker logincommand to verify if Docker daemon is able to access CloudFabrix docker registry service.

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /home/rdauser/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

Login Succeeded should be seen as shown in the above command's output.

4. RDAF platform resource requirements

RDA Fabric platform deployment can vary from simple to advanced depends on type of the environment and requirements.

A simple deployment can consist of one or more VMs for smaller and non-critical environments, while an advanced deployment consists of many RDA Fabric VMs to support high-availability, scale and for business critical environments.

CloudFabrix provided OVF support: VMware vSphere 6.0 or above

4.1 Single VM deployment:

The below configuration can be used for a demo or POC environments. In this single VM configuration, all of RDA Fabric platform, infrastructure and application services will be installed.

For deployment and configuration options, Please refer OVF based deployment or Deployment on RHEL/Ubuntu OS section within this document.

| Quantity | VM Type |

CPU / Memory / Network | Storage |

|---|---|---|---|

| 1 | OVF Profile: RDA Fabric Infra InstanceServices: Platform, Infrastructure, Application & Worker |

CPU: 8Memory: 64GBNetwork:1 Gbps/10 Gbps |

/ (root): 75GB/opt: 50GB/var/lib/docker: 100GB/minio-data: 50GB/kafka-logs: 25GB/var/mysql: 50GB/opensearch: 50GB/graphdb: 50GB |

4.2 Distributed VM deployment:

The below configuration can be used to distribute the RDA Fabric services for smaller or non-critical environments.

For deployment and configuration options, Please refer OVF based deployment or Deployment on RHEL/Ubuntu OS section within this document.

| Quantity | OVF Profile |

CPU / Memory / Network | Storage |

|---|---|---|---|

| 1 | OVF Profile: RDA Fabric Infra InstanceServices: Platform & Infrastructure |

CPU: 8Memory: 32GBNetwork:1 Gbps/10 Gbps |

/ (root): 75GB/opt: 50GB/var/lib/docker: 50GB/minio-data: 50GB/kafka-logs: 25GB/var/mysql: 50GB/opensearch: 50GB/grapgdb: 50GB |

| 1 | OVF Profile: RDA Fabric Platform InstanceServices: Application (OIA) |

CPU: 8Memory: 48GBNetwork:1 Gbps/10 Gbps |

/ (root): 75GB/opt: 50GB/var/lib/docker: 50GB |

| 1 | OVF Profile: RDA Fabric Platform InstanceServices: RDA Worker |

CPU: 8Memory: 32GBNetwork:1 Gbps/10 Gbps |

/ (root): 75GB/opt: 25GB/var/lib/docker: 25GB |

4.3 HA, Scale and Production deployment:

The below configuration can be used to distribute the RDA Fabric services for production environment to be highly-available and to support larger workloads.

For deployment and configuration options, Please refer OVF based deployment or Deployment on RHEL/Ubuntu OS section within this document.

| Quantity | OVF Profile |

CPU / Memory / Network | Storage |

|---|---|---|---|

| 3 | OVF Profile: RDA Fabric Infra InstanceServices: Infrastructure |

CPU: 8Memory: 48GBNetwork: 10 Gbps |

SSD Only/ (root): 75GB/opt: 50GB/var/lib/docker: 50GB/minio-data: 100GB/kafka-logs: 50GB/var/mysql: 150GB/opensearch: 100GB/graphdb: 50GB |

| 2 | OVF Profile: RDA Fabric Platform InstanceServices: RDA Platform |

CPU: 4Memory: 24GBNetwork: 10 Gbps |

/ (root): 75GB/opt: 50GB/var/lib/docker: 50GB |

| 3 or more | OVF Profile: RDA Fabric Platform InstanceServices: Application (OIA) |

CPU: 8Memory: 48GBNetwork: 10 Gbps |

/ (root): 75GB/opt: 50GB/var/lib/docker: 50GB |

| 3 or more | OVF Profile: RDA Fabric Platform InstanceServices: RDA Worker |

CPU: 8Memory: 32GBNetwork: 10 Gbps |

/ (root): 75GB/opt: 25GB/var/lib/docker: 25GB |

Important

For a production rollout, RDA Fabric platform's resources such as CPU, Memory and Storage need to be sized appropriately depends on the environment size in terms of alert ingestion per minute, total number of assets for discovery and frequency, data retention of assets, alerts/events, incidents and observability data. Please contact support@cloudfabrix.com for a guidance on resource sizing.

Important

Minio service requires minimum of 4 VMs to run it in HA mode and to provide 1 node failure tolerance. For this requirement, an additional disk /minio-data need to be added on one of the RDA Fabric Platform or Application VMs. So, the Minio cluster service spans across 3 RDA Fabric Infrastructure VMs + 1 of the RDA Fabric Platform or Application VMs. Please refer Adding additional disk for Minio for more information on how to add and configure it.

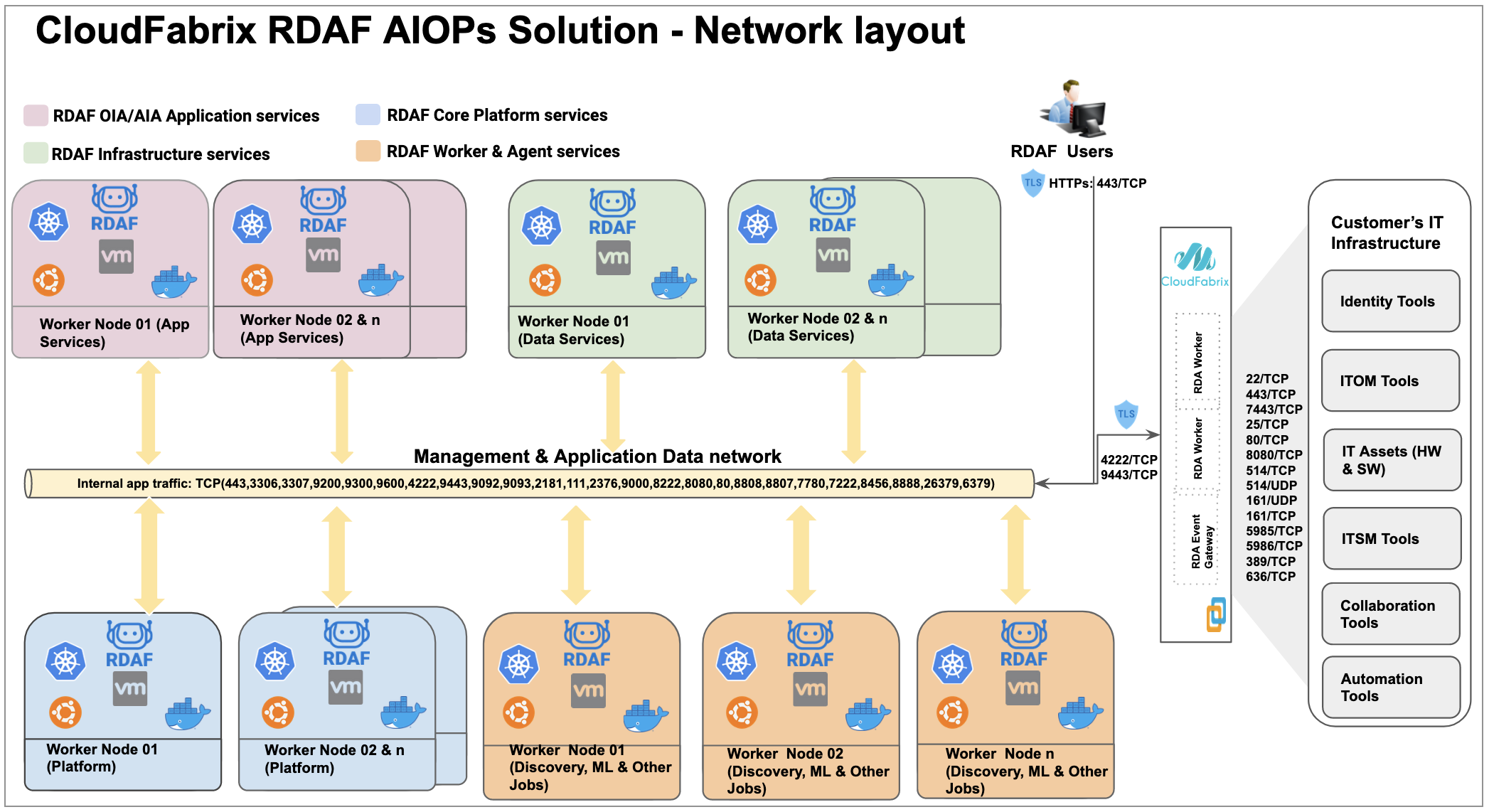

4.4 Network layout and ports:

Please refer the below picture which outlines the network access layout between the RDAF services and the used ports by them. These are applicable for both Kubernetes and non Kubernetes environments as well.

Tip

Please click on the picture below to enlarge and to go back to the page please click on the back arrow button on the top.

RDAF Services & Network ports:

| Service Type | Service Name | Network Ports |

|---|---|---|

| RDAF Infrastructure Service | Minio | 9000/TCP (Internal) 9443/TCP (External) |

| RDAF Infrastructure Service | MariDB | 3306/TCP (Internal) 3307/TCP (Internal) 4567/TCP (Internal) 4568/TCP (Internal) 4444/TCP (Internal) |

| RDAF Infrastructure Service | Opensearch | 9200/TCP (Internal) 9300/TCP (Internal) 9600/TCP (Internal) |

| RDAF Infrastructure Service | Kafka | 9092/TCP (Internal) 9093/TCP (Internal & External) |

| RDAF Infrastructure Service | NATs | 4222/TCP (External) 6222/TCP (Internal) 8222/TCP (Internal) |

| RDAF Infrastructure Service | HAProxy | 443/TCP (External) 7443/TCP (External) 25/TCP (External) 7222/TCP (External) |

| RDAF Core Platform Service | RDA API Server | 8807/TCP (Internal) 8808/TCP (Internal) |

| RDAF Core Platform Service | RDA Portal Backend | 7780/TCP (Internal) |

| RDAF Core Platform Service | RDA Portal Frontend (Nginx) | 8080/TCP (Internal) |

| RDAF Application Service | RDA Webhook Server | 8888/TCP (Internal) |

| RDAF Application Service | RDA SMTP Server | 8456/TCP (Internal) |

| RDAF Monitoring Service | Log Monitoring | 5048/TCP (Internal) 5049/TCP (External) |

Internal Network ports: These ports are used by RDAF services for internal communication between them.

External Network ports: These ports are exposed for incoming traffic into RDAF platform, such as, portal UI access, RDA Fabric access (NATs,Minio & Kafka) for RDA workers & agents that were deployed at the edge locations, Webhook based alerts, SMTP email based alerts etc.

Asset Discovery and Integrations: Network Ports

| Access Protocol Details | Endpoint Network Ports |

|---|---|

| Windows AD or LDAP - Identity and Access Management - RDAF platform --> endpoints (Windows AD or LDAP) |

389/TCP 636/TCP |

| SSH based discovery - RDA Worker/Agent (EdgeCollector) --> endpoints (Ex: Linux/Unix, Network/Storage Devices) |

22/TCP |

| HTTP API based discovery/Integration: RDA Worker / AIOps app --> endpoints (Ex: SNOW, CMDB, vCenter, K8s, AWS etc..) |

443/TCP 80/TCP 8080/TCP |

| Windows OS discovery using WinRM/SSH protocol RDA Worker --> Windows Servers |

5985/TCP 5986/TCP 22/TCP |

| SNMP based discovery: - RDA Agent (EdgeCollector) --> endpoints (Ex: network devices like switches, routers, firewall, load balancers etc) |

161/UDP 161/TCP |

5. RDAF platform VMs deployment using OVF

Download the latest OVF image from CloudFabrix (or contact support@cloudfabrix.com).

Supported VMware vSphere version: 6.5 or above

-

OVF OS Version: Ubuntu 24.04.x LTS

-

OVF Download Link: https://macaw-amer.s3.us-east-1.amazonaws.com/images/vmware/v8.0.0/CFX-Ubuntu-v8.0.0.zip

Step-1: Login to VMware vCenter Webclient to install RDA Fabric platform VMs using the downloaded OVF image.

Info

Note: It is expected that the user who is deploying VMs for CloudFabrix RDA Fabric platform, have sufficient VMware vCenter privileges. Also, has necessary pre-requisite credentials and details handy (e.g IP Address/FQDN, Gateway, DNS & NTP server details).

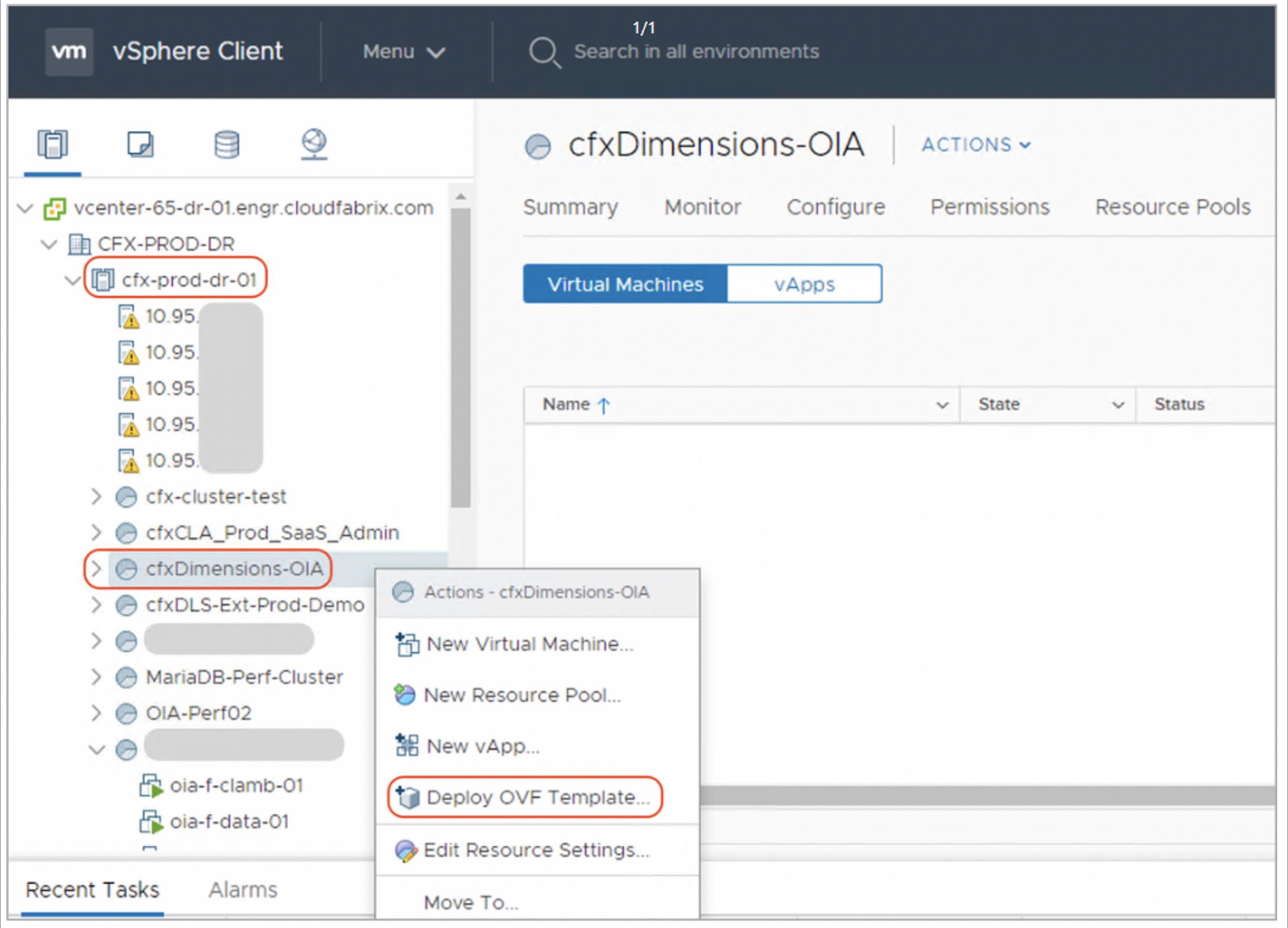

Step-2: Select a vSphere cluster/resource pool in vCenter and right click on it and then select -> Deploy OVF Template as shown below.

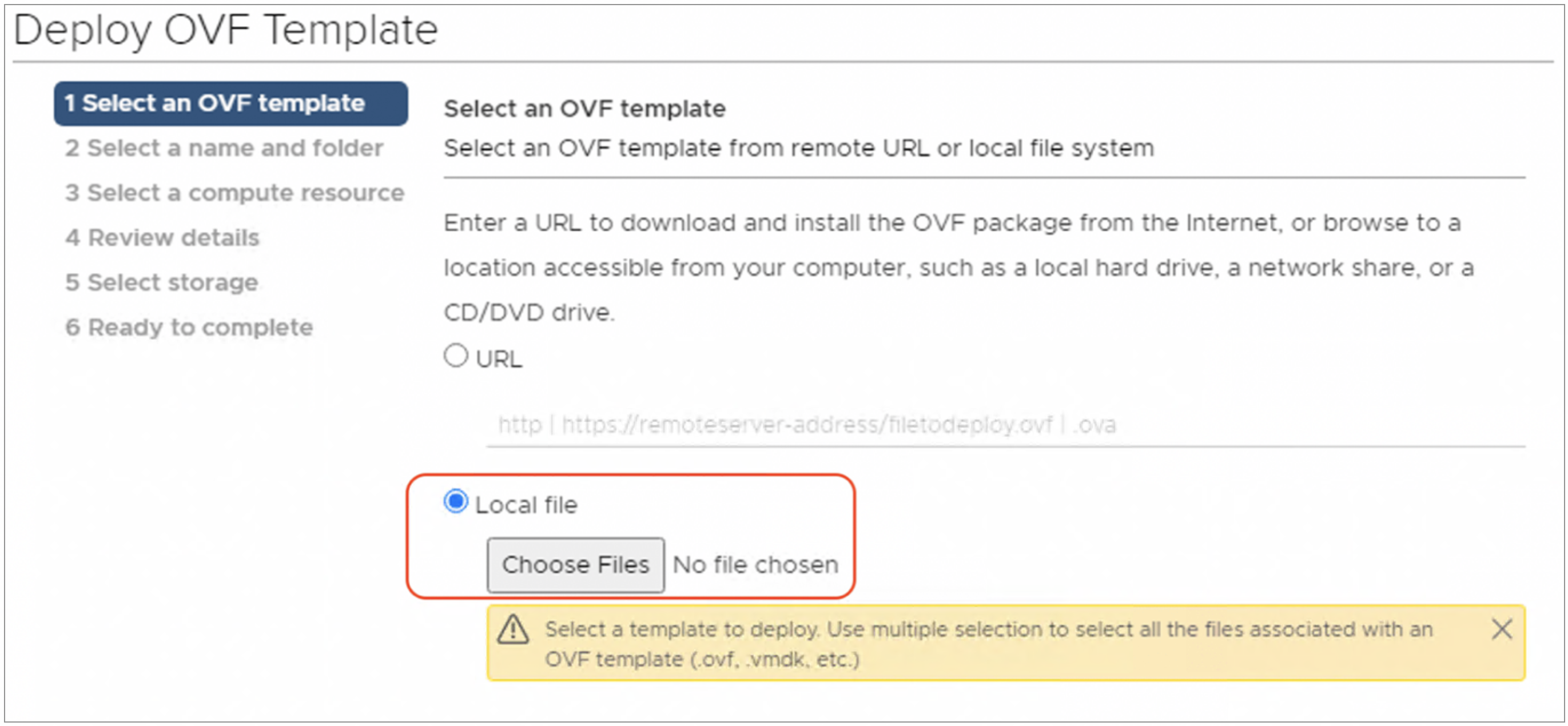

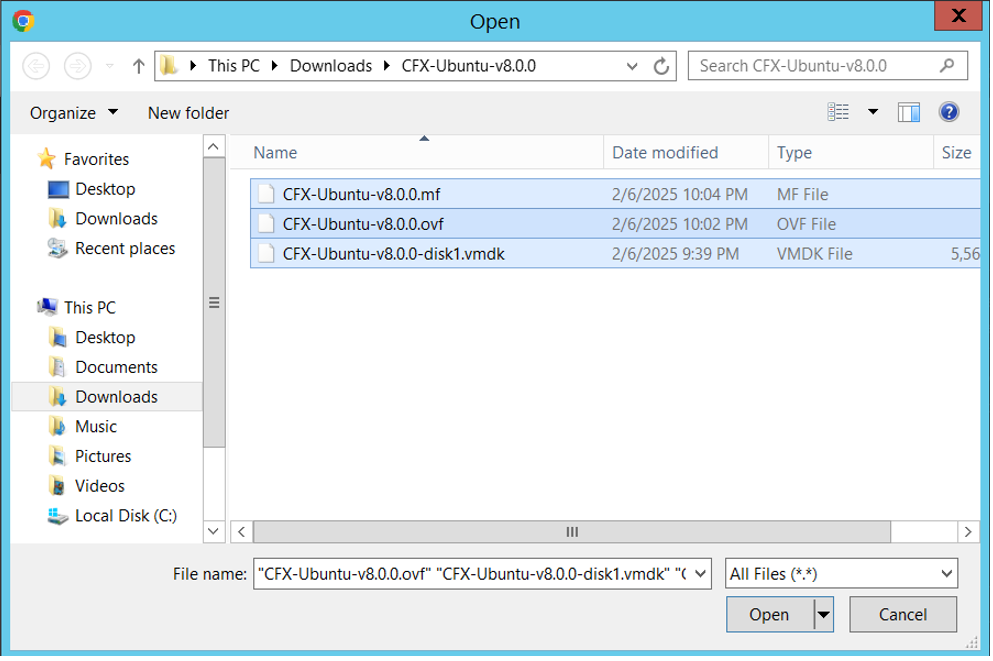

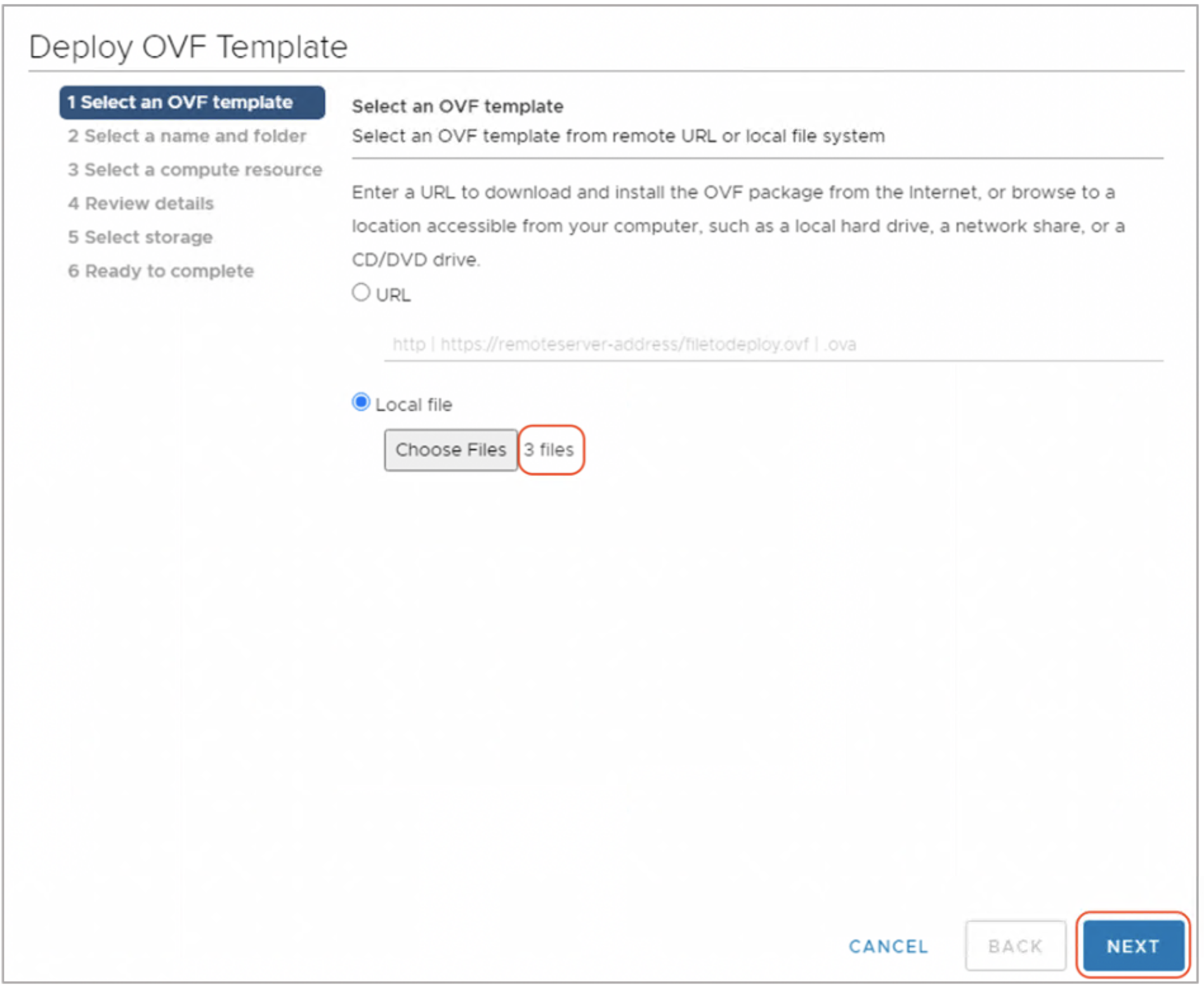

Step-3: Select the OVF image from the location it was downloaded.

Info

Note: When VMware vSphere Webclient is used to deploy OVF, it expects to select all the files necessary to deploy OVF template. Select all the binary files (.ovf, .mf, .vmdk files) for deploying VM.

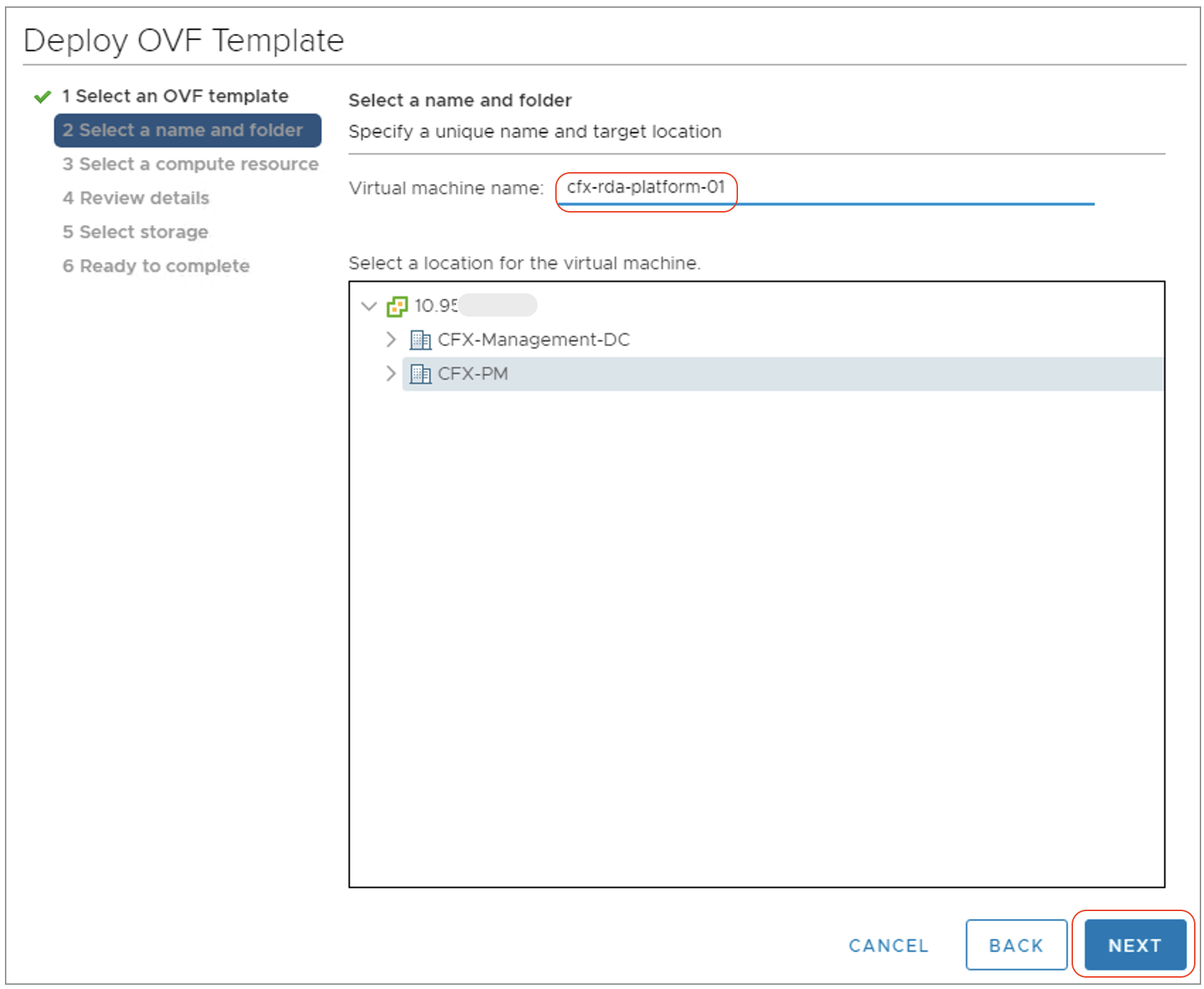

Step-4: Click Next and Enter appropriate 'VM Name' and select an appropriate Datacenter on which it is going to be deployed.

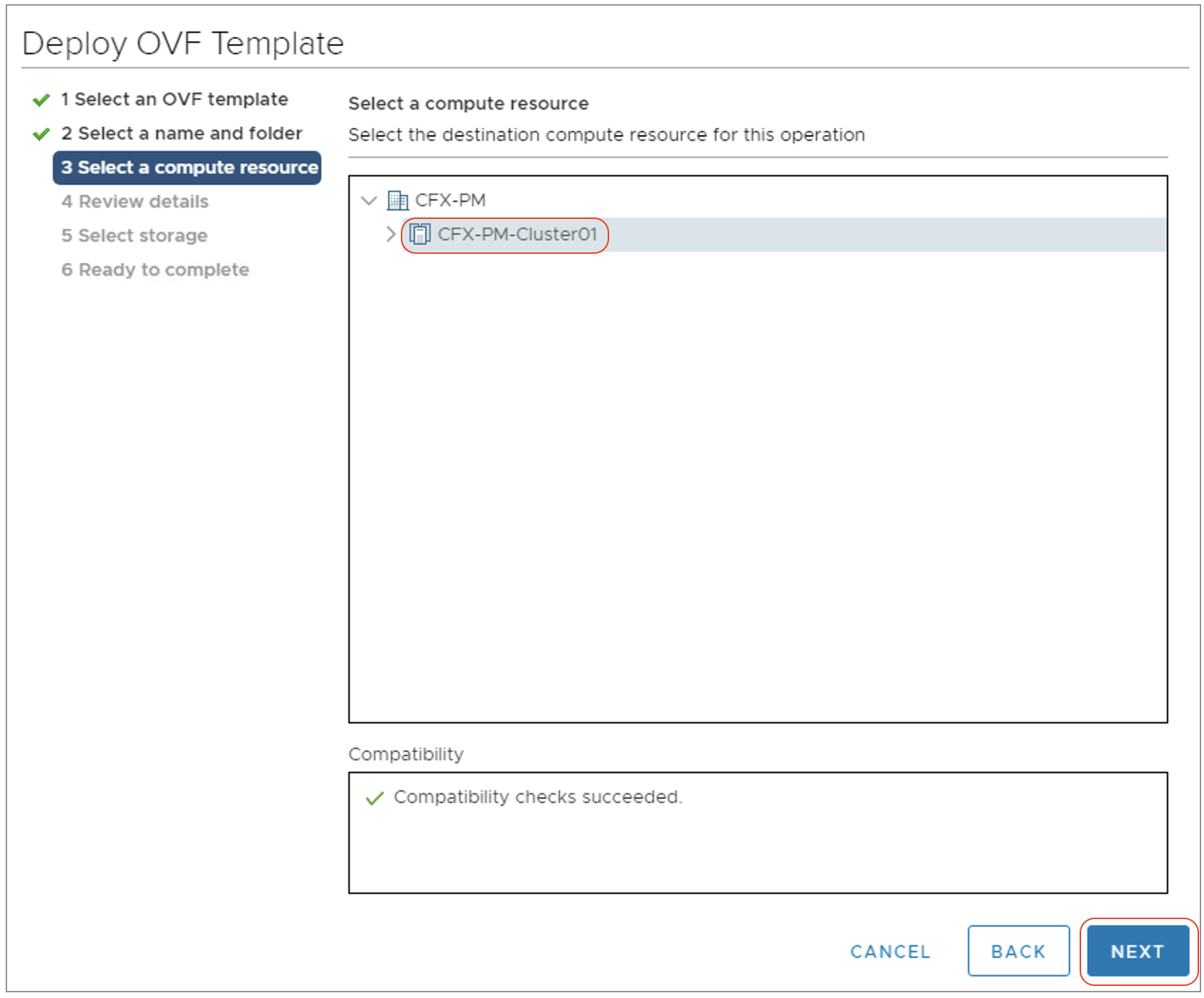

Step-5: Click Next and select an appropriate vSphere Cluster / Resource pool where the RDA Fabric VM is going to be deployed.

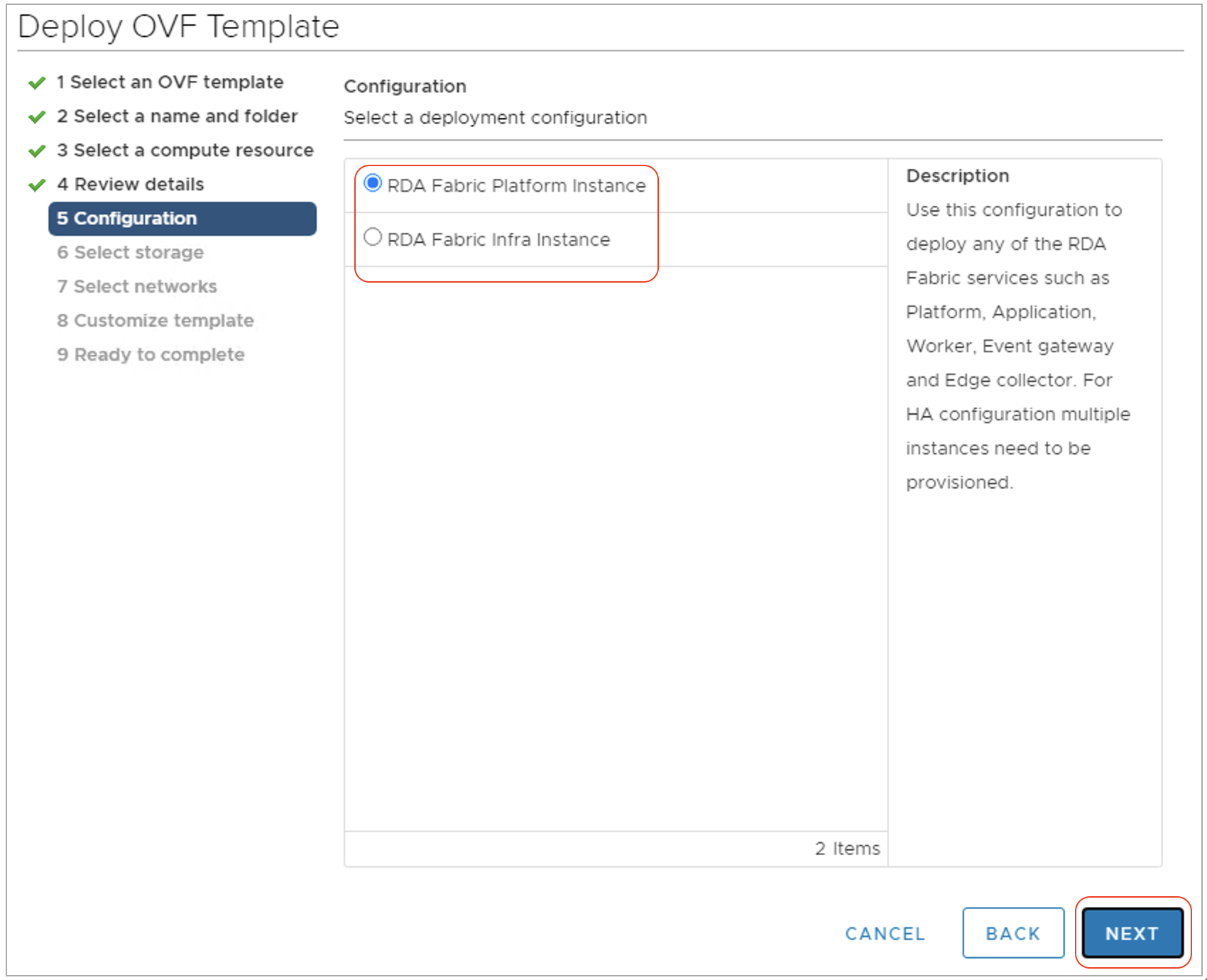

Step-6: Click Next and you are navigated to deployment configuration view. The following configuration options are available to choose from during the deployment.

-

RDA Fabric Platform Instance: Select this option to deploy any of the RDA Fabric services such as Platform, Application, Worker, Event gateway and Edge collector. For HA configuration, multiple instances need to be provisioned.

-

RDA Fabric Infra Instance: Select this option to deploy RDA Fabric infrastructure services such as MariaDB, Kafka, Minio, NATs and Opensearch. For HA configuration, multiple instances need to be provisioned.

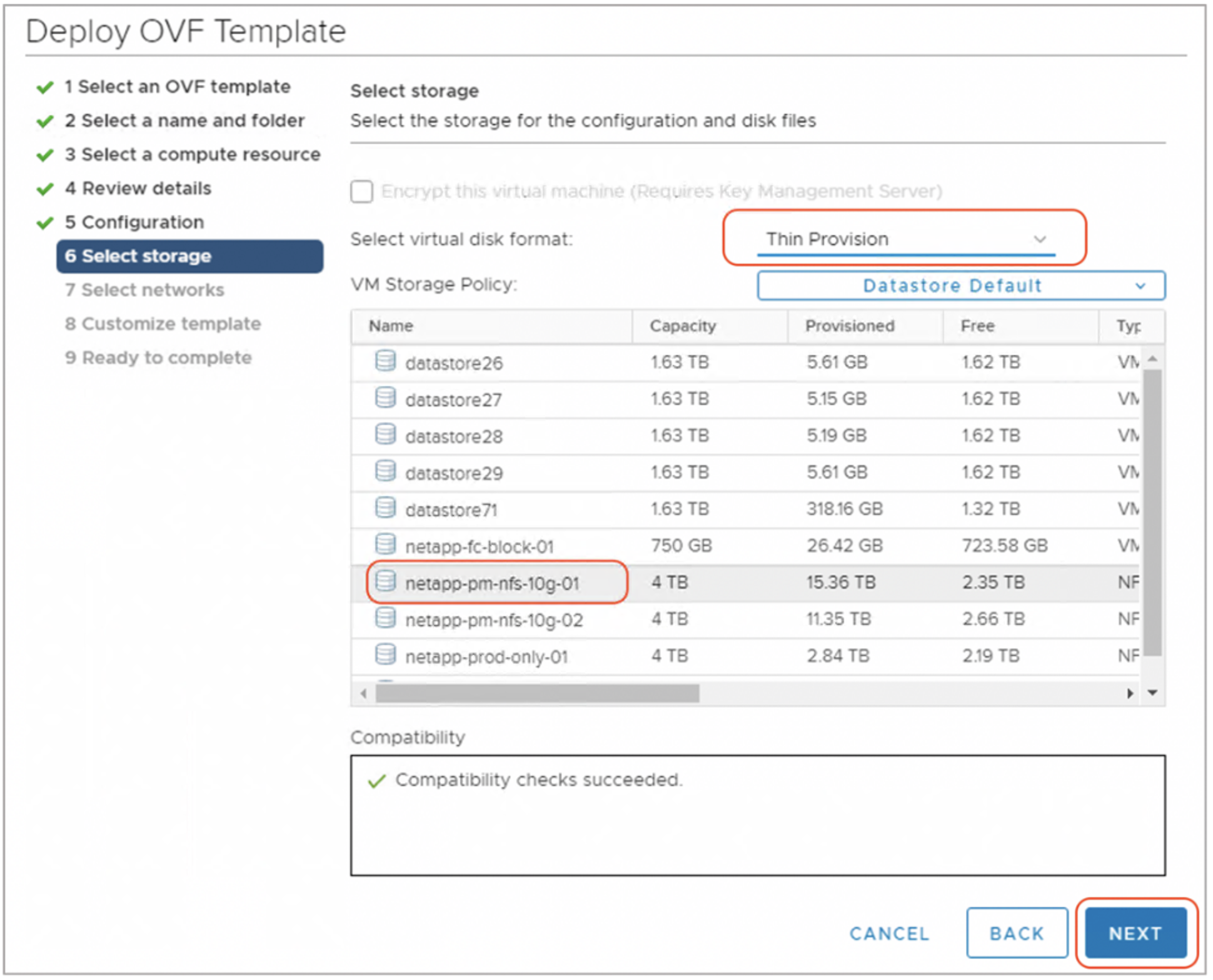

Step-7: Click Next and you are navigated to selecting the Datastore (Virtual Storage). Select Datastore / Datastore Cluster where you want to deploy the VM. Make sure you select 'Thin Provision' option as highlighted in the below screenshot.

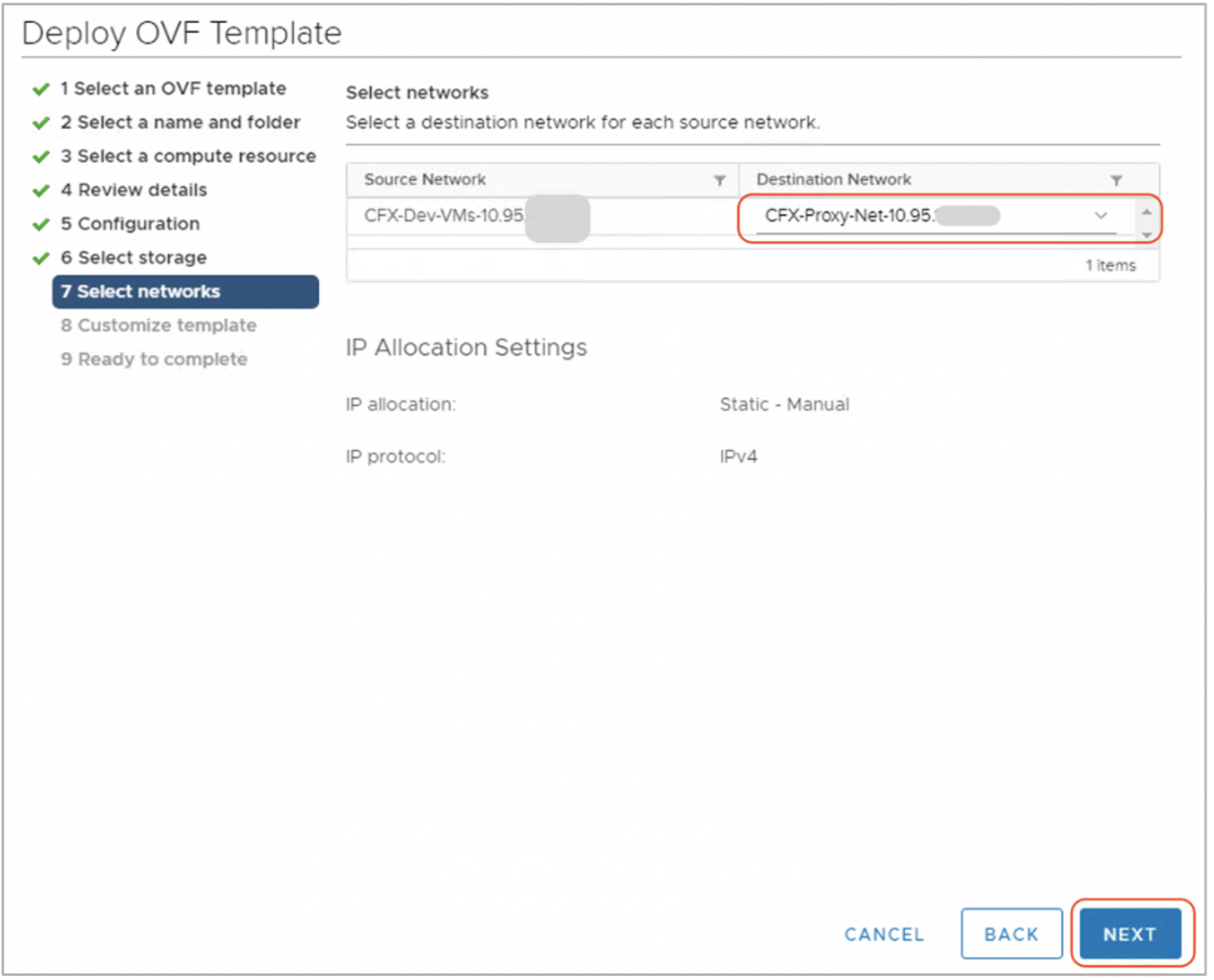

Step-8: Click Next and you are navigated to Network port-group view. Select the appropriate Virtual Network port-group as shown below.

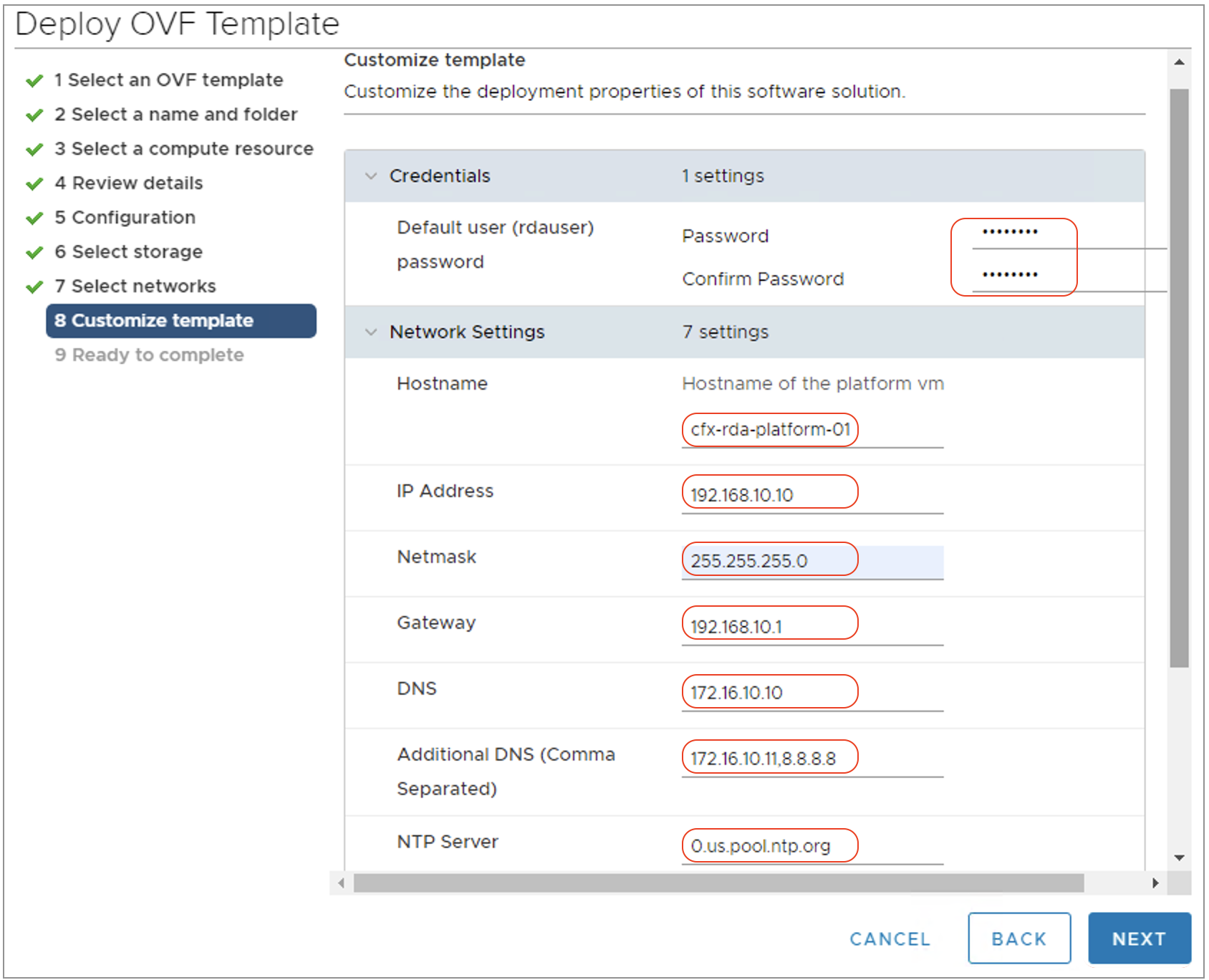

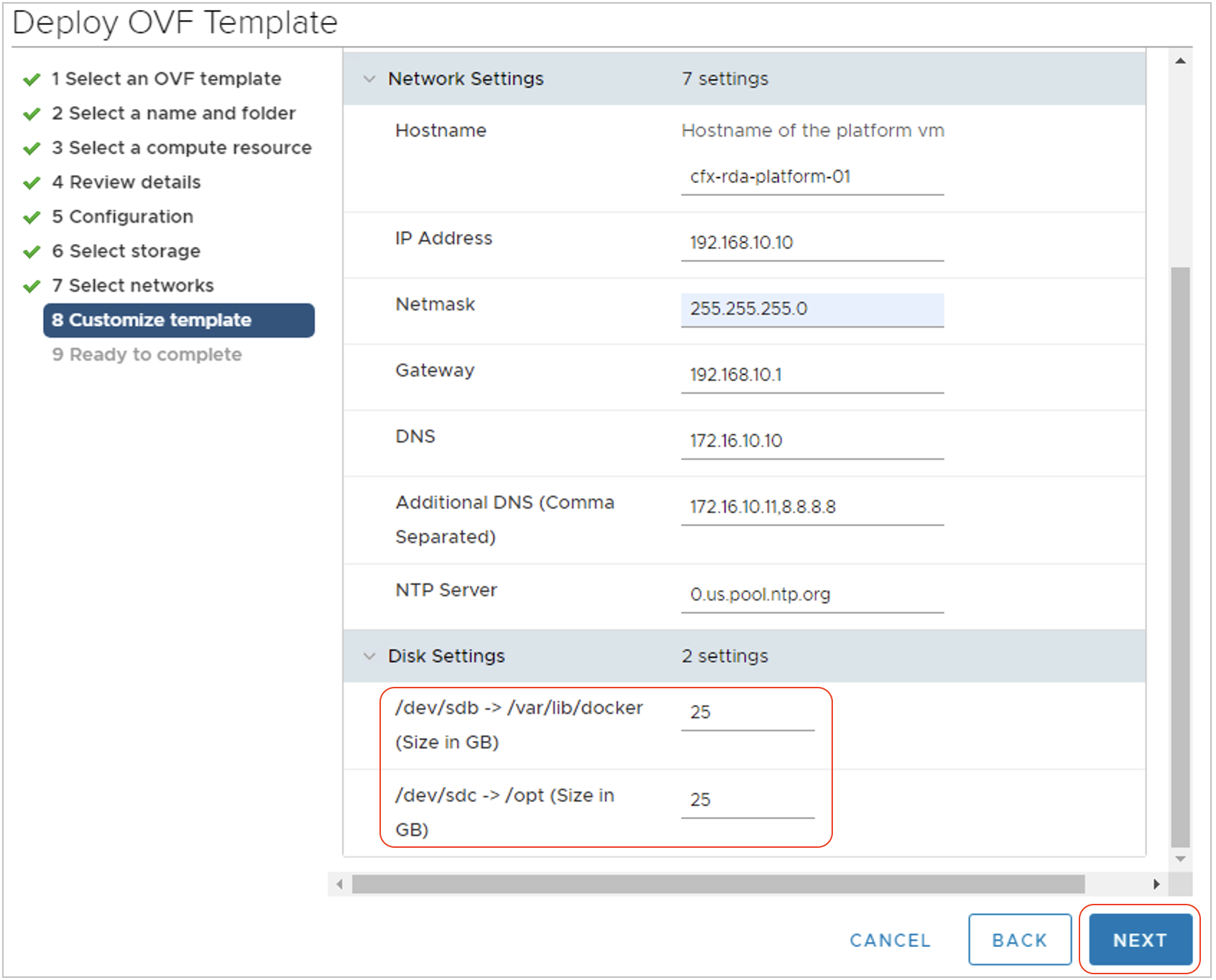

Step-9: Click Next and you are taken to Network Settings/Properties as shown below. Please enter all the necessary details such as password, network settings and disk size as per the requirements.

- Default OVF username and password is rdauser and rdauser1234 (Update the password field to change default password)

Warning

Note: Please make sure to configure same password for rdauser user on all of the RDA Fabric VMs.

Step-10: Adjust the Disk size settings based on the environment size. For production deployments, please adjust the disk size for /var/lib/docker and /opt to 75GB.

Step-11: Click Next to see a summary of OVF deployment settings and Click on Finish to deploy the VM.

Step-12: Before powering ON the deployed VM, Edit the VM settings and adjust the CPU and Memory settings based on the environment size. For any help/guidance on resource sizing, please contact support@cloudfabrix.com.

Step-13: Power ON the Vs and wait until it is completely up with OVF settings. It usually takes around 2 to 5 minutes.

Info

Note: Repeat the above OVF deployment steps (step-1 through step-13) for provisioning additional required VMs for RDA Fabric platform.

5.1 Post OS Image / OVF Deployment Configuration

Below steps are applicable for Ubuntu 24.x environment.

Step 1: Login into RDA Fabric VMs using any SSH client (ex: putty). Default username is rdauser

Step 2: Verify that NTP time is in sync on all of the RDA Fabric Platform, Infrastructure, Application, Worker & On-premise docker service VMs.

Timezone settings: Below are some of the useful commands to view / change / set Timezone on RDA Fabric VMs.

Important

The date & time settings should be in sync across all of RDA Fabric VMs for the application services to function appropriately.

To manually sync VM's time with NTP server, run the below commands.

To configure and update the NTP server settings, please update /etc/chrony/chrony.conf with NTP server details and restart the Chronyd service

Firewall Configuration:

Verify network bandwidth between RDAF VMs:

For production deployment, the network bandwidth between RDAF VMs should be minimum of 10Gbps. CloudFabrix provided OVF comes with iperf utility which can be used to measure the network bandwidth.

To verify network bandwidth between RDAF platform service VM and infrastructure VM, follow the below steps.

Login into RDAF platform service VM as rdauser using SSH client to access the CLI and start iperf utility as a server.

Info

By default iperf listens on port 5001 over tcp

Enable the iperf server port using the below command.

Start the iperf server as shown below.

$ iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

Now, login into RDAF infrastructure service VM as rdauser using SSH client to access the CLI and start iperf utility as a client.

operf utility connects to RDAF platform service VM as shown below. It will connect and verify the network bandwidth speed.

------------------------------------------------------------

Client connecting to 192.168.125.143, TCP port 5001

TCP window size: 2.86 MByte (default)

------------------------------------------------------------

[ 3] local 192.168.125.141 port 10654 connected with 192.168.125.143 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 21.2 GBytes 18.2 Gbits/sec

Repeat the above steps between all of the RDAF VMs in both directions to make sure the network bandwidth speed is minimum of 10Gbps.

Note

RHEL is supported only for versions earlier than 8.0. Fresh installations with version 8.0 are not supported.

To verify network bandwidth between RDAF platform service VM and infrastructure VM, follow the below steps.

Login into RDAF platform service VM as rdauser using SSH client to access the CLI and start iperf utility as a server.

Info

By default iperf listens on port 5001 over tcp

Enable the iperf server port using the below command.

Start the iperf server as shown below.

$ iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

Now, login into RDAF infrastructure service VM as rdauser using SSH client to access the CLI and start iperf utility as a client.

operf utility connects to RDAF platform service VM as shown below. It will connect and verify the network bandwidth speed.

------------------------------------------------------------

Client connecting to 192.168.125.143, TCP port 5001

TCP window size: 2.86 MByte (default)

------------------------------------------------------------

[ 3] local 192.168.125.141 port 10654 connected with 192.168.125.143 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 21.2 GBytes 18.2 Gbits/sec

Repeat the above steps between all of the RDAF VMs in both directions to make sure the network bandwidth speed is minimum of 10Gbps.

5.2 Adding additional disk for Minio 4th HA node

Info

This step is only applicable for Minio infrastructure service when RDA Fabric VMs are deployed in HA configuration. Minio service requires minimum of 4 nodes to form HA cluster to provide 1 node failure tolerance.

Step-1: Login into VMware vCenter and Shutdown one of the RDA Fabric platform or application services VM.

Step-2: Edit the VM settings of RDA Fabric platform services VM that was shutdown in the above. Add a new disk and allocate the same size as other Minio service nodes. Please refer Minio disk size (/minio-data) for HA configuration

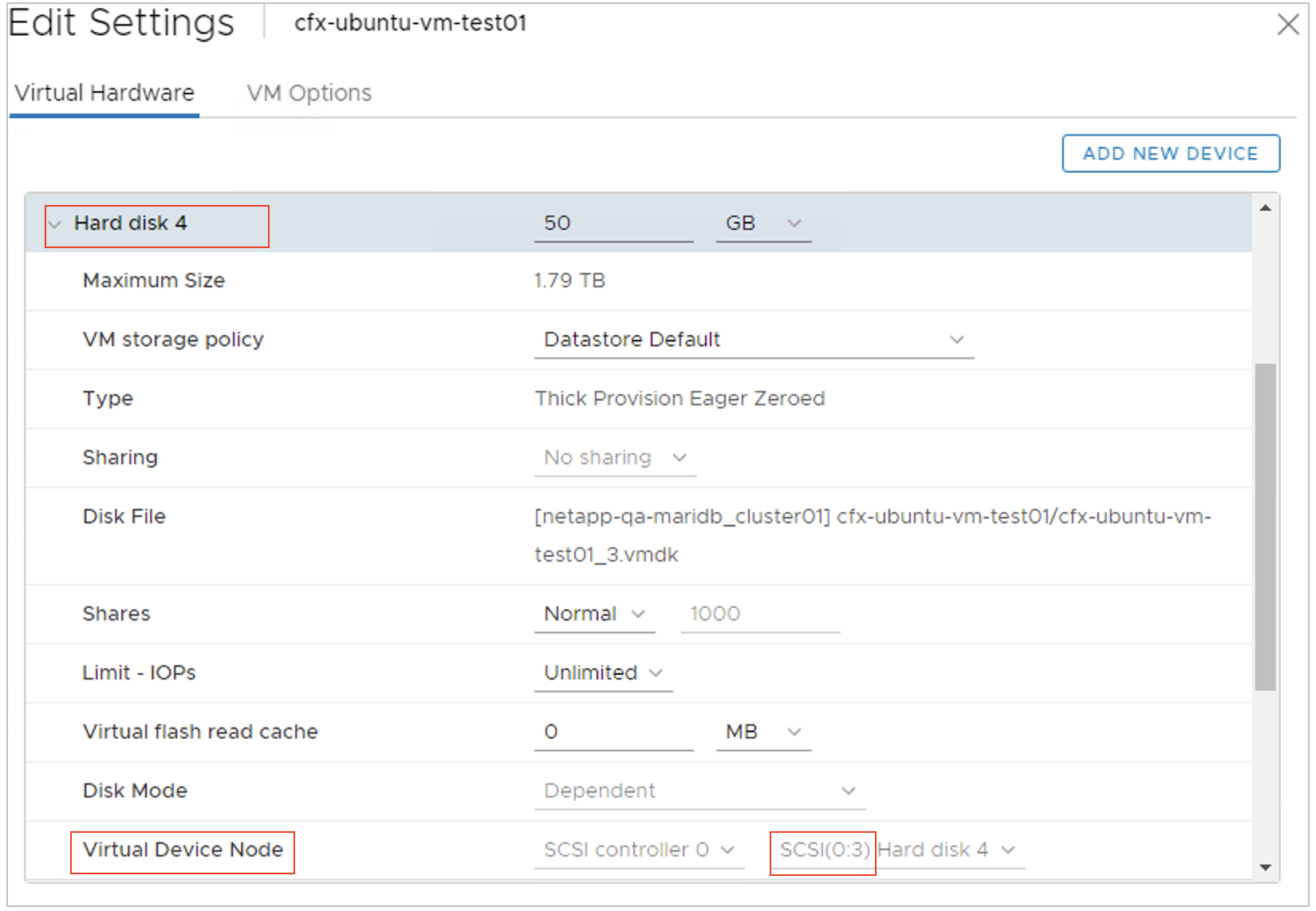

Step-3: Edit the VM settings of RDA Fabric platform services VM again on which new disk was added and note down the SCSI Disk ID as shown below.

Step-4: Power ON the RDA Fabric platform services VM on which a new disk has been added in the above step.

Step-5: Login into RDA Fabric Platform VM using any SSH client (ex: putty). Default username is rdauser

Step-6: Run the below command to list all of the SCSI disks of the VM with their SCSI Disk IDs

Run the below command to see the new disk along with used disks with their mount points

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sda 8:0 0 75G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 1.5G 0 part /boot

└─sda3 8:3 0 48.5G 0 part

└─ubuntu--vg-ubuntu--lv 253:0 0 48.3G 0 lvm /

sdb 8:16 0 25G 0 disk /var/lib/docker

sdc 8:32 0 25G 0 disk /opt

sdd 8:48 0 50G 0 disk

In the above example command outputs, the newly added disk is sdd and it's size is 50GB

Step-7: Run the below command to create a new XFS filesystem and create a mount point directory.

Step-8: Run the below command to get the UUID of the newly created filesystem on /dev/sdd

Step-9: Update /etc/fstab to mount the /dev/sdd disk to /minio-data mount point

Step-10: Mount the /minio-data mount point and verify the mount point is mounted.

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lv 48G 8.3G 37G 19% /

...

/dev/sda2 1.5G 209M 1.2G 16% /boot

/dev/sdb 25G 211M 25G 1% /var/lib/docker

/dev/sdc 25G 566M 25G 3% /opt

/dev/sdd 50G 390M 50G 1% /minio-data

5.3 Extending the Root (/) filesystem

Warning

Note-1: The below provided instructions to extend the Root (/) filesystem are applicable only for the virtual machines that are provisioned using CloudFabrix provided Ubuntu OVF

Note-2: As a precautionary step, please take VMware VM snapshot before making the changes to Root (/) filesystem.

Step-1: Check on which disk the Root (/) filesystem was created using the below command. In the below example, it was created on disk /dev/sda and partition 3 i.e. sda3.

On partition sda3, a logical volume ubuntu--vg-ubuntu--lv was created and mounted as Root (/) filesystem.

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 62M 1 loop

loop1 7:1 0 62M 1 loop /snap/core20/1593

loop2 7:2 0 67.2M 1 loop /snap/lxd/21835

loop3 7:3 0 67.8M 1 loop /snap/lxd/22753

loop4 7:4 0 44.7M 1 loop /snap/snapd/15904

loop5 7:5 0 47M 1 loop /snap/snapd/16292

loop7 7:7 0 62M 1 loop /snap/core20/1611

sda 8:0 0 75G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 1.5G 0 part /boot

└─sda3 8:3 0 48.5G 0 part

└─ubuntu--vg-ubuntu--lv 253:0 0 48.3G 0 lvm /

sdb 8:16 0 100G 0 disk /var/lib/docker

sdc 8:32 0 75G 0 disk /opt

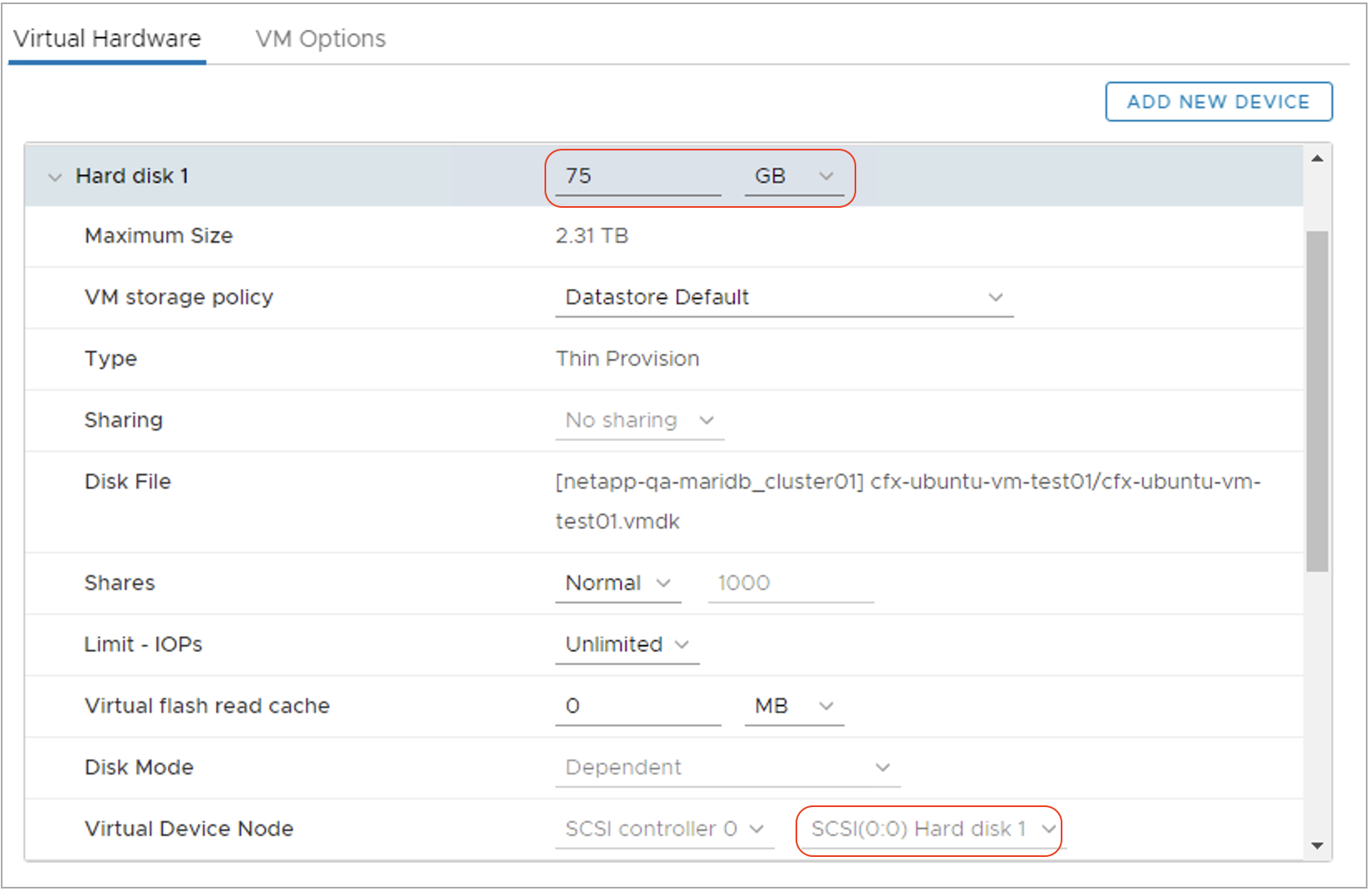

Step-2: Verify the SCSI disk id of the disk on which Root (/) filesystem was created using the below command.

In the below example, the SCSI disk id of root disk sda is 2:0:0:0, i.e. the SCSI disk id is 0 (third digit)

NAME HCTL TYPE VENDOR MODEL REV TRAN

sda 2:0:0:0 disk VMware Virtual_disk 1.0

sdb 2:0:1:0 disk VMware Virtual_disk 1.0

sdc 2:0:2:0 disk VMware Virtual_disk 1.0

Step-3: Edit the virtual machine's properties on vCenter and identify the Root disk sda using the above SCSI disk id 2:0:0:0 as highlighted in the below screenshot.

Increase the disk size from 75GB to higher desired value in GB.

Step-4: Login back to Ubuntu VM CLI using SSH client as rdauser

Switch to sudo user

Execute the below command to rescan the Root disk i.e. sda to reflect the increased disk size.

Execute the below command to see the increased size for Root disk sda

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 62M 1 loop /snap/core20/1611

loop1 7:1 0 67.8M 1 loop /snap/lxd/22753

loop2 7:2 0 67.2M 1 loop /snap/lxd/21835

loop3 7:3 0 62M 1 loop

loop4 7:4 0 47M 1 loop /snap/snapd/16292

loop5 7:5 0 44.7M 1 loop /snap/snapd/15904

loop6 7:6 0 62M 1 loop /snap/core20/1593

sda 8:0 0 100G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 1.5G 0 part /boot

└─sda3 8:3 0 48.5G 0 part

└─ubuntu--vg-ubuntu--lv 253:0 0 48.3G 0 lvm /

sdb 8:16 0 25G 0 disk /var/lib/docker

sdc 8:32 0 25G 0 disk /opt

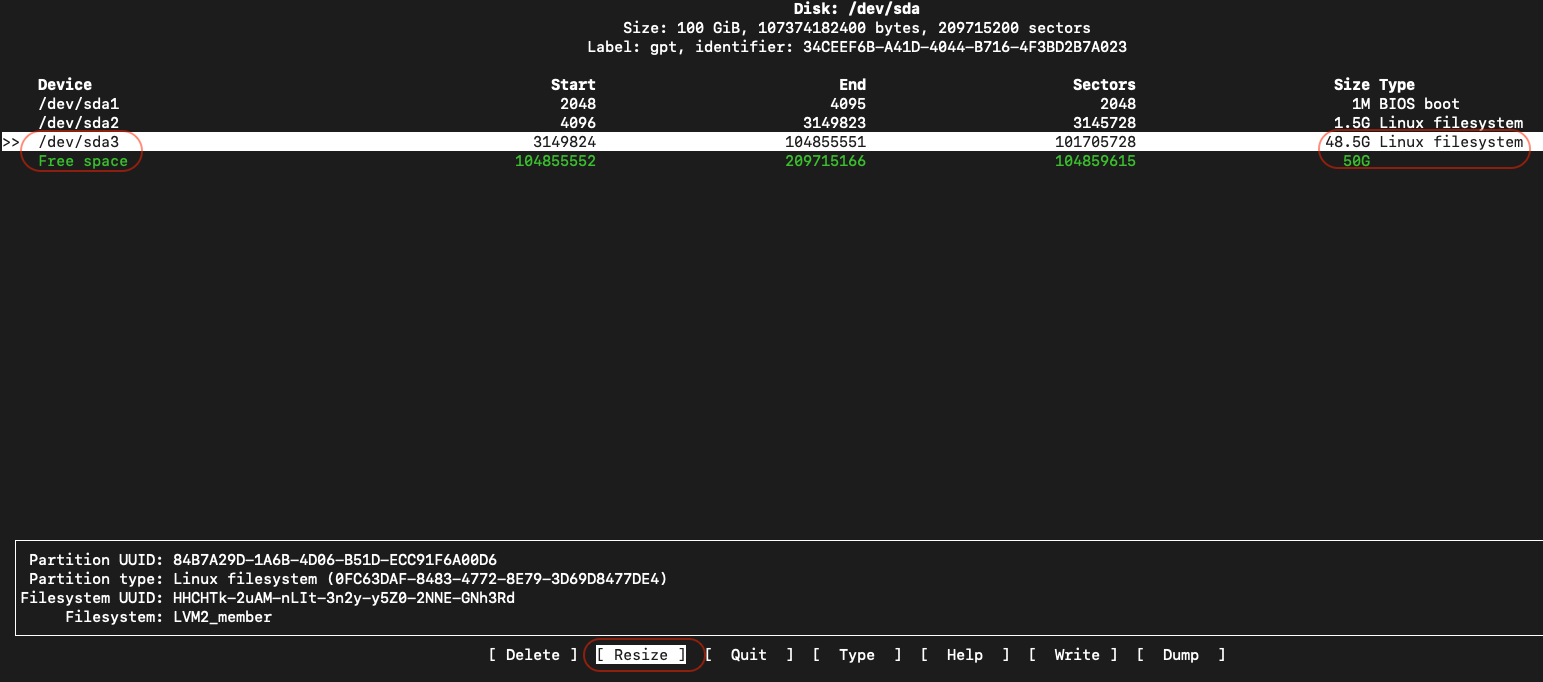

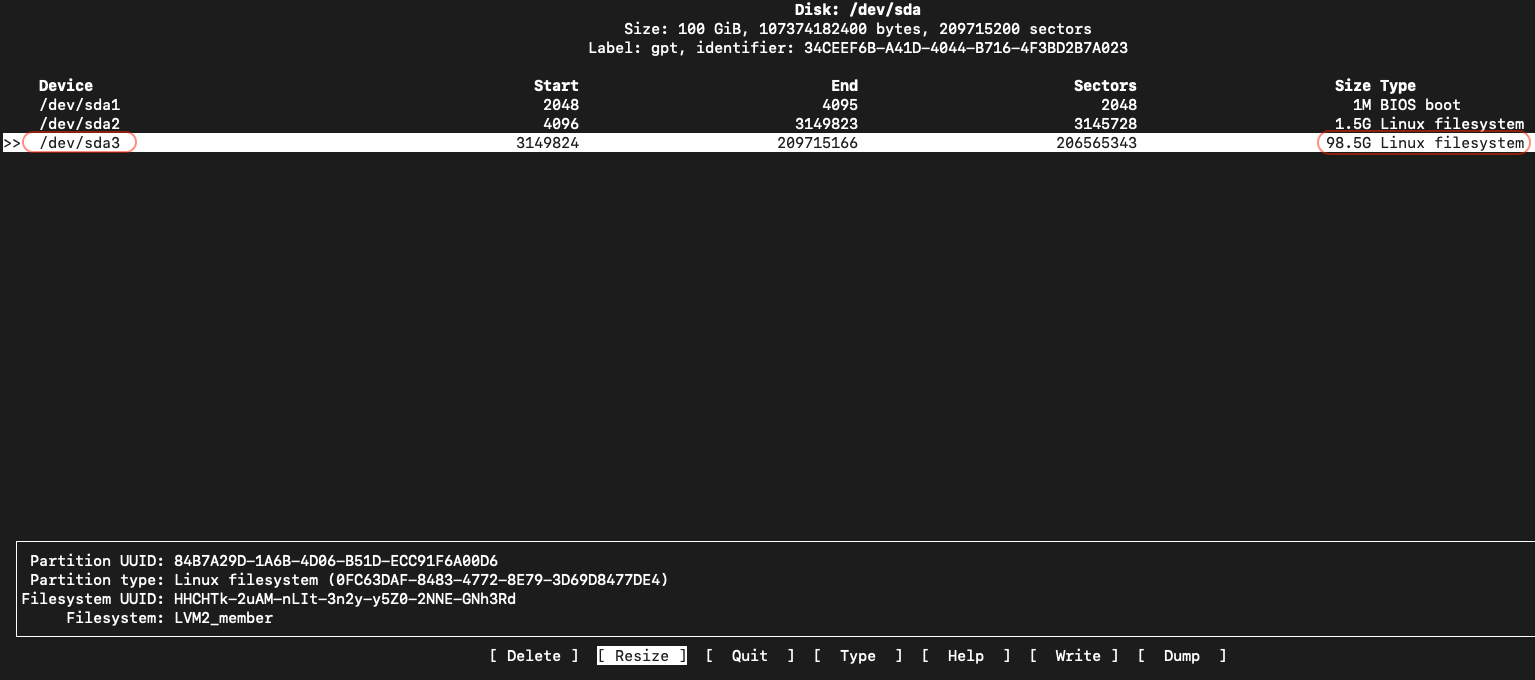

Step-5: In the above command output, identify the Root (/) filesystem's disk partition, i.e. sda3

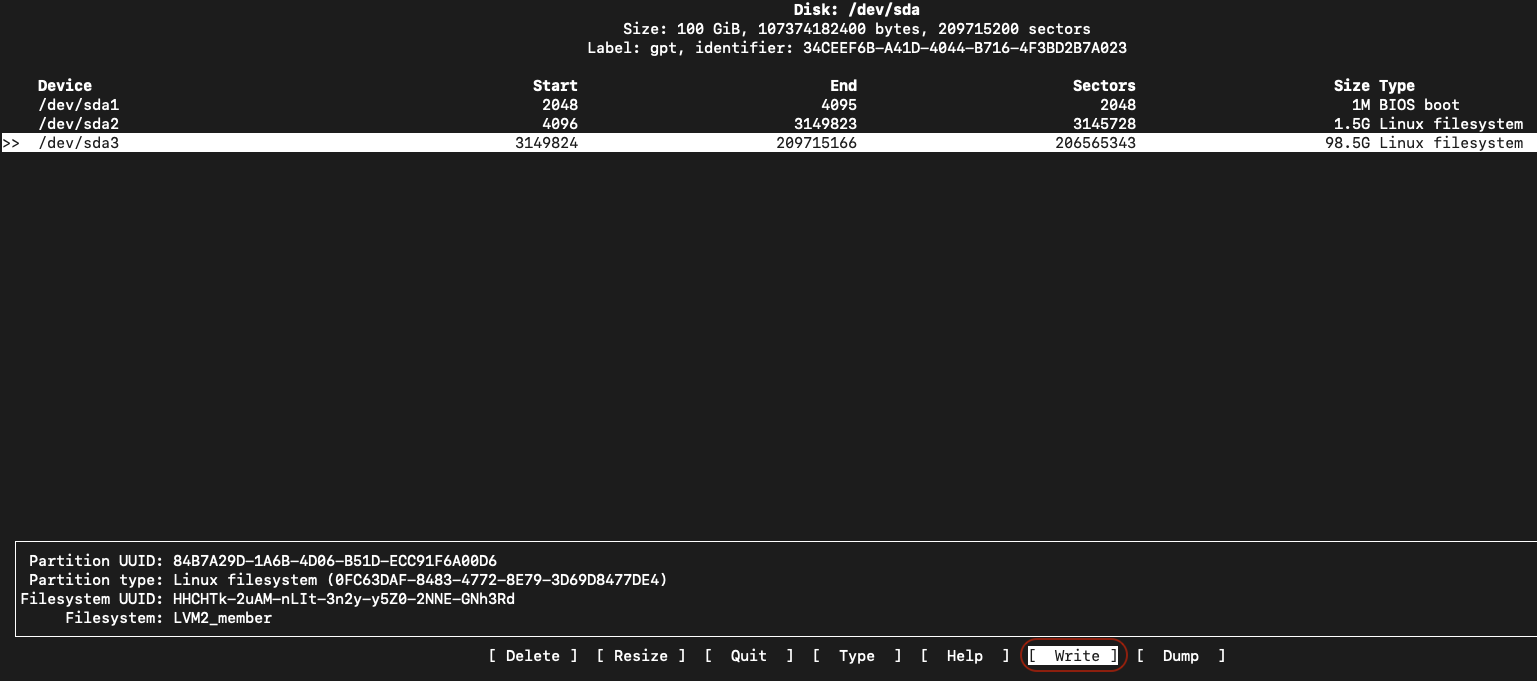

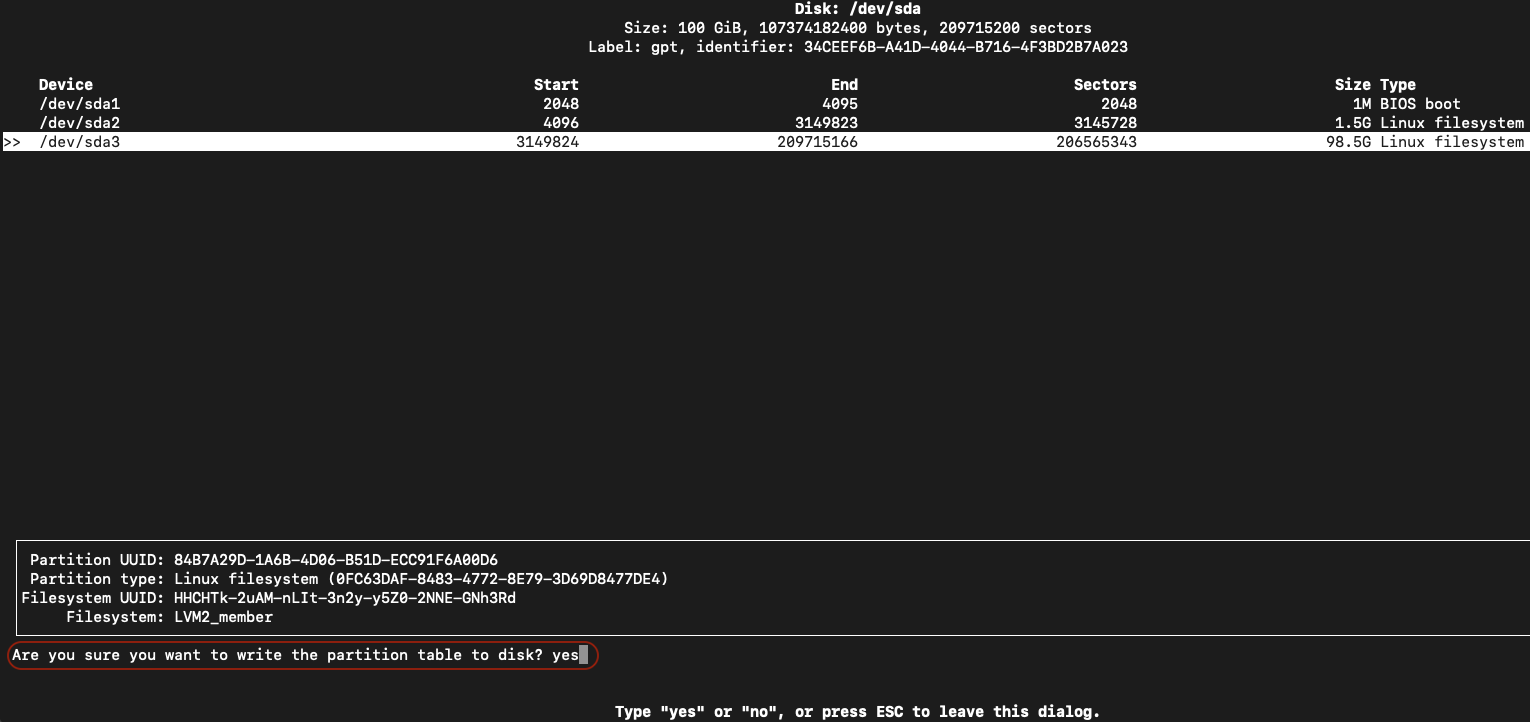

Run the below command cfdisk to resize the Root (/) filesystem.

- Highlight /dev/sda3 partition using Down arrow on the keyboard as shown in the below screen, use Left/Right arrow to highlight the Resize option and hit Enter

- Adjust the disk Resize in GB as desired.

Warning

The Resize disk value in GB cannot be smaller than the current size of the Root (/) filesystem.

- Once the /dev/sda3 partition is resized, use Left/Right arrow to highlight the Write option and hit Enter

- Confirm the resize by typing yes and hit Enter

- Use Left/Right arrow to highlight the Quit option and hit Enter to quit the Disk resizing utility.

Step-6:

Disable the swap before resizing the Root (/) filesystem using the below command.

Resize the physical volume of Root (/) filesystem i.e. /dev/sda3

Resize the logical volume of Root (/) filesystem i.e. /dev/mapper/ubuntu--vg-ubuntu--lv. In the below example, the logical volume of Root (/) filesystem is increased by 20GB

Resize the Root (/) filesystem

Enable the swap after resizing the Root (/) filesystem

Step-7:

Verify the increased Root (/) filesystem disk's space using the below command.

Filesystem Size Used Avail Use% Mounted on

udev 3.9G 0 3.9G 0% /dev

tmpfs 793M 50M 744M 7% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 68G 8.6G 56G 14% /

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/loop2 68M 68M 0 100% /snap/lxd/21835

/dev/loop4 47M 47M 0 100% /snap/snapd/16292

/dev/loop1 68M 68M 0 100% /snap/lxd/22753

/dev/loop5 45M 45M 0 100% /snap/snapd/15904

/dev/sda2 1.5G 209M 1.2G 16% /boot

/dev/sdb 25G 211M 25G 1% /var/lib/docker

/dev/sdc 25G 566M 25G 3% /opt

6. RDAF Platform VMs deployment on RHEL/Ubuntu OS

Below steps outlines the required pre-requisites and the configuration to be applied on RHEL or Ubuntu OS VM instances when CloudFabrix provided OVF is not used, to deploy and install RDA Fabric platform, infrastructure, application, worker and on-premise docker registry services.

Software Pre-requisites:

- RHEL: RHEL 8.3 or above

- Ubuntu: Ubuntu 24.04.x or above

- Python: 3.12 or above

- Docker: 27.1.x or above

- Docker-compose: 1.29.x or above

For resource requirements such as CPU, Memory, Network and Storage, please refer RDA Fabric VMs resource requirements

Note

RHEL is supported only for versions earlier than 8.0. Fresh installations with version 8.0 are not supported.

- Once RHEL 8.3 or above OS version is deployed, register and apply the OS licenses using the below commands

- Create a new user called rdauser and configure the password.

sudo adduser rdauser

sudo passwd rdauser

sudo chown -R rdauser:rdauser /home/rdauser

sudo groupadd docker

sudo usermod -aG docker rdauser

- Add rdauser to /etc/sudoers file. Add the below line at the end of the sudoers file.

- Modify the SSH service configuration with the below settings. Edit /etc/ssh/sshd_config file and update the below settings as shown below.

- Restart the SSH service

-

Logout and Login back as newly created user rdauser

-

Format the disks with xfs filesystem and mount the disks as per the disk requirements outlined in RDA Fabric VMs resource requirements section.

-

Make sure disk mounts are updated in /etc/fstab to make them persistent across VM reboots.

-

In /etc/fstab, use filesystem's UUID instead of using SCSI disk names. Below command provides UUID of filesystem created on a disk or disk partition.

Sample disk mount point entry on /etc/fstab file.

Installing OS utilities and Python

- Run the below commands to install the required software packages.

- Download and install the below software packages.

- Download and install Python 3.7 or above. Skip this step if Python is already installed as part of the OS install.

Installing Docker and Docker-compose

- Run the below commands to install docker runtime environment

- Configure docker service configuration updating /etc/docker/daemon.json as shown below.

- Download and execute macaw-docker.py script to configure TLS for docker service. (Note: Make sure python2.7 is installed)

- Edit /lib/systemd/system/docker.service file and update the below line and restart the docker service

From:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

To:

ExecStart=/usr/bin/dockerd

- Restart docker service and verify the status

- Update

/etc/sysctl.conffile with below performance tuning settings.

#Performance Tuning.

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 2500

net.core.somaxconn = 65000

net.ipv4.tcp_ecn = 0

net.ipv4.tcp_window_scaling = 1

net.ipv4.ip_local_port_range = 10000 65535

vm.max_map_count = 1048575

net.core.wmem_default=262144

net.core.wmem_max=4194304

net.core.rmem_default=262144

net.core.rmem_max=4194304

#file max

fs.file-max=518144

#swapiness

vm.swappiness = 1

#Set runtime for kernel.randomize_va_space

kernel.randomize_va_space = 2

net.ipv4.ip_forward = 1

net.ipv4.ip_nonlocal_bind=1

- Install JAVA software package

- Add the JAVA software binary to PATH variable

- Reboot the host

-

Once Ubuntu 24.04.x or above OS version is deployed, please apply the below configuration.

-

Create a new user called rdauser and configure the password.

- Add rdauser to /etc/sudoers file. Add the below line at the end of the sudoers file.

- Modify the SSH service configuration with the below settings. Edit /etc/ssh/sshd_config file and update as shown below.

- Restart the SSH service

-

Logout and Login back as newly created user rdauser

-

Format the disks with xfs filesystem and mount the disks as per the disk requirements outlined in RDA Fabric VMs resource requirements section.

-

Make sure disk mounts are updated in /etc/fstab to make them persistent across VM reboots.

-

In /etc/fstab, use filesystem's UUID instead of using SCSI disk names. Below command provides UUID of filesystem created on a disk or disk partition.

Sample disk mount point entry on /etc/fstab file.

Installing OS utilities and Python

- Run the below commands to install the required software packages.

- Download and install Python 3.12.x or above. Skip this step if Python 3.12.x or above is already installed as part of the OS install.

Installing Docker and Docker-compose

- Run the below commands to install docker runtime environment

- Edit /lib/systemd/system/docker.service file and update the below line and restart the docker service

sudo vi /lib/systemd/system/docker.service

From:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

To:

ExecStart=/usr/bin/dockerd

- Configure docker service configuration updating /etc/docker/daemon.json as shown below.

- Start and verify the docker service

- Update

/etc/sysctl.conffile with below performance tuning settings.

#Performance Tuning.

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 2500

net.core.somaxconn = 65000

net.ipv4.tcp_ecn = 0

net.ipv4.tcp_window_scaling = 1

net.ipv4.ip_local_port_range = 10000 65535

vm.max_map_count = 1048575

net.core.wmem_default=262144

net.core.wmem_max=4194304

net.core.rmem_default=262144

net.core.rmem_max=4194304

#file max

fs.file-max=518144

#swapiness

vm.swappiness = 1

#Set runtime for kernel.randomize_va_space

kernel.randomize_va_space = 2

net.ipv4.ip_forward = 1

net.ipv4.ip_nonlocal_bind=1

- Download and execute macaw-docker.py script to configure TLS for docker service.

- Install JAVA software package

- Add the JAVA software binary to PATH variable

- Reboot the host

-

Once Ubuntu 20.04.x or above OS version is deployed, please apply the below configuration.

-

Create a new user called rdauser and configure the password.

- Add rdauser to /etc/sudoers file. Add the below line at the end of the sudoers file.

- Modify the SSH service configuration with the below settings. Edit /etc/ssh/sshd_config file and update as shown below.

- Restart the SSH service

-

Logout and Login back as newly created user rdauser

-

Format the disks with xfs filesystem and mount the disks as per the disk requirements outlined in RDA Fabric VMs resource requirements section.

-

Make sure disk mounts are updated in /etc/fstab to make them persistent across VM reboots.

-

In /etc/fstab, use filesystem's UUID instead of using SCSI disk names. Below command provides UUID of filesystem created on a disk or disk partition.

Sample disk mount point entry on /etc/fstab file.

Installing OS utilities and Python

- Run the below commands to install the required software packages.

- Download and install Python 3.7.4 or above. Skip this step if Python 3.7.4 or above is already installed as part of the OS install.

Installing Docker and Docker-compose

- Run the below commands to install docker runtime environment

- Edit /lib/systemd/system/docker.service file and update the below line and restart the docker service

sudo vi /lib/systemd/system/docker.service

From:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

To:

ExecStart=/usr/bin/dockerd

- Configure docker service configuration updating /etc/docker/daemon.json as shown below.

- Start and verify the docker service

- Update

/etc/sysctl.conffile with below performance tuning settings.

#Performance Tuning.

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 2500

net.core.somaxconn = 65000

net.ipv4.tcp_ecn = 0

net.ipv4.tcp_window_scaling = 1

net.ipv4.ip_local_port_range = 10000 65535

vm.max_map_count = 1048575

net.core.wmem_default=262144

net.core.wmem_max=4194304

net.core.rmem_default=262144

net.core.rmem_max=4194304

#file max

fs.file-max=518144

#swapiness

vm.swappiness = 1

#Set runtime for kernel.randomize_va_space

kernel.randomize_va_space = 2

net.ipv4.ip_forward = 1

net.ipv4.ip_nonlocal_bind=1

- Download and execute macaw-docker.py script to configure TLS for docker service.

- Install JAVA software package

- Add the JAVA software binary to PATH variable

- Reboot the host

7. RDAF Platform deployment on Cloud

- To setup and configure RDAF Platform on AWS EC2 environment for Non-K8s, Please refer RDAF Platform on AWS

8. RDAF Platform Installation

This section provides information about end to end deployment steps for RDA Fabric Platform on both Kubernetes and Non-Kubernetes environments.

Note

Please make sure to complete the below pre-requisites before proceeding to next steps to deploy RDAF Platform.

- RDAF Platform Resource Requirements

- Deploy VMs for RDAF Platform using CloudFabric provided OVFs

- Deploy VMs for RDAF Platform using Custom RHEL or Ubuntu OS Images

- Network layout and Port requirements

- Configure HTTP Proxy (if applicable)

8.1 Install RDAF deployment CLI for K8s & Non-K8s Environments

Software Versions:

Below are the required container image tags for RDAF platform deployment

-

RDAF Deployment CLI: 1.4.0

-

RDAF Registry service: 1.0.3

-

RDAF Infra services: 1.0.3, 1.0.3.3 (haproxy)

-

RDAF Platform: 8.0.0

-

RDAF Worker: 8.0.0

-

RDAF Client (rdac) CLI: 8.0.0

-

RDAF OIA (AIOps) Application: 8.0.0

-

RDAF Studio: 8.0.0

Below are the required container image tags for RDAF platform deployment

-

RDAF Deployment CLI: 1.4.0

-

RDAF Registry service: 1.0.3

-

RDAF Infra services: 1.0.3, 1.0.3.3 (haproxy)

-

RDAF Platform: 8.0.0

-

RDAF Worker: 8.0.0

-

RDAF Client (rdac) CLI: 8.0.0

-

RDAF OIA (AIOps) Application: 8.0.0

-

RDAF Studio: 8.0.0

8.2 RDAF Deployment CLI Installation

Please follow the below given steps to install RDAF deployment CLI.

Note

Please install RDAF Deployment CLI on both the on-premise docker registry VM and the RDAF Platform's management VM if provisioned separately. In most cases, on-premise docker registry service and RDAF platform's setup and configuration are maintained on the same VM.

Login into the VM as rdauser where RDAF deployment CLI to be installed for docker on-premise registry service and managing Kubernetes or Non-kubernetes deployments.

- Download the RDAF Deployment CLI's newer version 1.4.0 bundle.

wget https://macaw-amer.s3.us-east-1.amazonaws.com/releases/rdaf-platform/1.4.0/rdafcli-1.4.0.tar.gz

- Install the

rdaf & rdafk8sCLI to version 1.4.0

- Verify the installed

rdaf & rdafk8sCLI version is upgraded to 1.4.0

- Download the RDAF Deployment CLI's newer version 1.4.0 bundle and copy it to RDAF management VM on which

rdaf & rdafk8sdeployment CLI was installed.

- Download the RDAF Deployment CLI's newer version 1.4.0 bundle

wget https://macaw-amer.s3.us-east-1.amazonaws.com/releases/rdaf-platform/1.4.0/rdafcli-1.4.0.tar.gz

- Install the

rdafCLI to version 1.4.0

- Verify the installed

rdafCLI version is upgraded to 1.4.0

- Download the RDAF Deployment CLI's newer version 1.4.0 bundle and copy it to RDAF management VM on which

rdaf & rdafk8sdeployment CLI was installed.

8.3 RDAF On-Premise Docker Registry Setup

CloudFabrix support hosting an on-premise docker registry which will download and synchronize RDA Fabric's platform, infrastructure and application services from CloudFabrix's public docker registry that is securely hosted on AWS and from other public docker registries as well. For more information on on-premise docker registry, please refer Docker registry access for RDAF platform services.

rdaf registry setup

Run the below command to setup and configure on-premise docker registry service. In the below command example, 10.99.120.140 is the machine on which on-premise registry service is going to installed.

docker1.cloudfabrix.io is the CloudFabrix's public docker registry hosted on AWS from which RDA Fabric docker images are going to be downloaded.

Run the below command to install the on-premise docker registry service.

Info

- For latest tag version, please contact support@cloudfabrix.com

- On-premise docker registry service runs on port TCP/5000. This port may need to be enabled on firewall device if on-premise docker registry service and RDA Fabric service VMs are deployed in different network environments.

To check the status of the on-premise docker registry service, run the below command.

rdaf registry fetch

Once on-premise docker registry service is installed, run the below command to download one or more tags to pre-stage the docker images for RDA Fabric services deployment for fresh install.

Minio object storage service image need to be downloaded explicitly using the below command.

Info

Note: It may take few minutes to few hours depends on the outbound internet access bandwidth and the number of docker images to be downloaded. The default location path for the downloaded docker images is /opt/rdaf-registry/data/docker/registry. This path can be overridden/changed during rdaf registry setup command using --install-root option if needed.

rdaf registry list-tags

Run the below command to list the downloaded images and their corresponding tags / versions.

Please make sure 1.0.3 image tag is downloaded for the below RDAF Infra service.

- rda-platform-kafka

- rda-platform-mariadb

- rda-platform-opensearch

- rda-platform-nats

- rda-platform-busybox

- rda-platform-nats-box

- rda-platform-nats-boot-config

- rda-platform-nats-server-config-reloader

- rda-platform-prometheus-nats-exporter

- rda-platform-arangodb-starter

- rda-platform-kube-arangodb

- rda-platform-arangodb

- rda-platform-kubectl

- rda-platform-logstash

- rda-platform-fluent-bit

Please make sure 1.0.3.3 image tag is downloaded for the below RDAF Infra services.

- rda-platform-haproxy

Please make sure 1.0.3.1 image tag is downloaded for the below RDAF telegraph service.

- rda-platform-telegraf

Please make sure RELEASE.2023-09-30T07-02-29Z image tag is downloaded for the below RDAF Infra service.

- minio

Please make sure 8.0.0 image tag is downloaded for the below RDAF Platform services.

- rda-client-api-server

- rda-registry

- rda-scheduler

- rda-collector

- rda-identity

- rda-fsm

- rda-asm

- rda-stack-mgr

- rda-access-manager

- rda-resource-manager

- rda-user-preferences

- onprem-portal

- onprem-portal-nginx

- rda-worker-all

- onprem-portal-dbinit

- cfxdx-nb-nginx-all

- rda-event-gateway

- rda-chat-helper

- rdac

- rdac-full

- cfxcollector

- bulk_stats

Please make sure 8.0.0 image tag is downloaded for the below RDAF OIA Application services.

- rda-app-controller

- rda-alert-processor

- rda-file-browser

- rda-smtp-server

- rda-ingestion-tracker

- rda-reports-registry

- rda-ml-config

- rda-event-consumer

- rda-webhook-server

- rda-irm-service

- rda-alert-ingester

- rda-collaboration

- rda-notification-service

- rda-configuration-service

- rda-alert-processor-companion

8.4 RDAF Platform Setup

Important

When on-premise docker repository service is used, please make sure to add the insecure-registries parameter to /etc/docker/daemon.json file and restart the docker daemon as shown below on all of RDA Fabric VMs before the deployment. This is to bypass SSL certificate validation as on-premise docker repository will be installed with self-signed certificates.

Edit /etc/docker/daemon.json to configure the on-premise docker repository as shown below.

...

...

rdauser@k8mater108112:~$ docker info

Client: Docker Engine - Community

Version: 27.1.2

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.16.2

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.29.1

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 23

Running: 12

Paused: 0

Stopped: 11

Images: 9

Server Version: 27.1.2

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 8fc6bcff51318944179630522a095cc9dbf9f353

runc version: v1.1.13-0-g58aa920

init version: de40ad0

Security Options:

apparmor

seccomp

Profile: builtin

Kernel Version: 6.8.0-48-generic

Operating System: Ubuntu 24.04 LTS

OSType: linux

Architecture: x86_64

CPUs: 4

Total Memory: 15.57GiB

Name: k8mater108112

ID: d9a59caa-e3a8-4e50-87a9-c358ab115bae

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: true

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: true

rdauser@k8mater108112:~$

rdafk8s setregistry

When on-premise docker registry is deployed, set the default docker registry configuration to on-premise docker registry host to pull and install the RDA Fabric platform services.

- Before proceeding, please copy the

/opt/rdaf-registry/cert/ca/ca.crtfile from on-premise registry VM.

scp rdauser@<on-premise-registry-ip>:/opt/rdaf-registry/cert/ca/ca.crt /opt/rdaf-registry/registry-ca-cert.crt

Tip

The location of the on-premise docker registry's CA certificate file ca.crt is located under /opt/rdaf-registry/cert/ca. This file ca.crt need to be copied to the machine on which RDAF CLI is used to setup, configure and install RDA Fabric platform and all of the required services using on-premise docker registry. This step is not applicable when cloud hosted docker registry docker1.cloudfabrix.io is used. Also, this step is not needed, when on-premise docker registry service VM is used to setup, configure and deploy RDAF platform as well.

- Run the below command to set the docker-registry to on-premise one.

rdafk8s setregistry --host <on-premise-docker-registry-ip-or-dns> --port 5000 --cert-path /opt/rdaf-registry/registry-ca-cert.crt

Tip

Please verify if on-premise registry is accessible on port 5000 using either of the below commands.

- telnet

<on-premise-docker-registry-ip-or-dns>5000 - curl -vv telnet://

<on-premise-docker-registry-ip-or-dns>:5000

rdafk8s setup

Run the below rdafk8s setup command to create the RDAF platform's deployment configuration. It is a pre-requisite before RDAF infrastructure, platform and application services can be installed on Kubernetes Cluser.

It will prompt for all the necessary configuration details.

- Accept the EULA

- Enter the rdauser SSH password for all of the Kubernetes worker nodes on which RDAF services are going to be installed.

What is the SSH password for the SSH user used to communicate between hosts

SSH password:

Re-enter SSH password:

Tip

Please make sure rdauser's SSH password on all of the Kubernetes cluster worker nodes is same during the rdafk8s setup command.

- Enter additional IP address(es) or DNS names (e.g., *.acme.com) that can be as SANs (Subject alt names) while generating self-signed certificates. It is important to include any public facing IP addresse(s) (e.g., AWS, Azure and GCP environments) that is/are different from RDAF platform instance VM's ip addresses which are specified as part of the

rdafk8s setupcommand. Also, include the IP addresses of secondary NIC (if configured) of all RDAF platform VMs.

Tip

SANs (Subject alt names) also known as multi-domain certificates which allows to create a single unified SSL certificate which includes more than one Common Name (CN). Common Name can be an IP Address or DNS Name or a wildcard DNS Name (ex: *.acme.com)

Provide any Subject alt name(s) to be used while generating SAN certs

Subject alt name(s) for certs[]: 100.30.10.10

- Enter Kubernetes worker node IPs on which RDAF Platform services need to be installed. For HA configuration, please enter comma separated values. Minimum of 2 worker nodes are required for the HA configuration. If it is a non-HA deployment, only one Kubernetes worker node's ip address or DNS name is required.

What are the host(s) on which you want the RDAF platform services to be installed?

Platform service host(s)[rda-platform-vm01]: 192.168.125.141,192.168.125.142

- Answer if the RDAF application services are going to be deployed in HA mode or standalone.

- Enter Kubernetes worker node IPs on which RDAF Application services (OIA) need to be installed. For HA configuration, please enter comma separated values. Minimum of 2 hosts or more are required for the HA configuration. If it is a non-HA deployment, only one Kubernetes worker node's ip address or DNS name is required.

What are the host(s) on which you want the application services to be installed?

Application service host(s)[rda-platform-vm01]: 192.168.125.143,192.168.125.144

- Enter the name of the Organization. In the below example,

ACME_IT_Servicesis used as the Organization name. It is for a reference only.

What is the organization you want to use for the admin user created?

Admin organization[CloudFabrix]: ACME_IT_Services

What is the ca cert to use to communicate to on-prem docker registry

Docker Registry CA cert path[]:

- Enter Kubernetes worker node IPs on which RDAF Worker services need to be installed. For HA configuration, please enter comma separated values. Minimum of 2 hosts or more are required for the HA configuration. If it is a non-HA deployment, only one Kubernetes worker node's ip address or DNS name is required.

What are the host(s) on which you want the Worker to be installed?

Worker host(s)[rda-platform-vm01]: 192.168.125.145

- Enter Kubernetes worker node IPs on which RDAF NATs infrastructure service need to be installed. For HA configuration, please enter comma separated values. Minimum of 2 Kubernetes worker nodes are required for the

NATsHA configuration. If it is a non-HA deployment, only one Kubernetes worker node's IP or DNS Name is required.

What is the "host/path-on-host" on which you want the Nats to be deployed?

Nats host/path[192.168.125.141]: 192.168.125.145,192.168.125.146

- Enter Kubernetes worker node IPs on which RDAF Minio infrastructure service need to be installed. For HA configuration, please enter comma separated values. Minimum of 4 Kubernetes worker nodes are required for the

MinioHA configuration. If it is a non-HA deployment, only one Kubernetes worker node's IP or DNS Name is required.

What is the "host/path-on-host" where you want Minio to be provisioned?

Minio server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147,192.168.125.148

- Change the default

Miniouser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for Minio root user that will be created and used by the RDAF platform?

Minio user[rdafadmin]:

What is the password you want to use for the newly created Minio root user?

Minio password[Q8aJ63PT]:

- Enter Kubernetes worker node IPs on which RDAF MariaDB infrastructure service need to be installed. For HA configuration, please enter comma separated values. Minimum of 3 Kubernetes worker nodes are required for the

MariDBdatabase HA configuration. If it is a non-HA deployment, only one Kubernetes worker node's IP or DNS Name is required.

What is the "host/path-on-host" on which you want the MariaDB server to be provisioned?

MariaDB server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Change the default

MariaDBuser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for MariaDB admin user that will be created and used by the RDAF platform?

MariaDB user[rdafadmin]:

What is the password you want to use for the newly created MariaDB root user?

MariaDB password[jffqjAaZ]:

- Enter Kubernetes worker node IPs on which RDAF Opensearch infrastructure service need to be installed. For HA configuration, please enter comma separated values. Minimum of 3 Kubernetes worker nodes are required for the

OpensearchHA configuration. If it is a non-HA deployment, only one Kubernetes worker node's IP or DNS Name is required.

What is the "host/path-on-host" on which you want the opensearch server to be provisioned?

opensearch server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Change the default

Opensearchuser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for Opensearch admin user that will be created and used by the RDAF platform?

Opensearch user[rdafadmin]:

What is the password you want to use for the newly created Opensearch admin user?

Opensearch password[sLmr4ICX]:

- Enter Kubernetes worker node IPs on which RDAF Kafka infrastructure service need to be installed. For HA configuration, please enter comma separated values. Minimum of 3 Kubernetes worker nodes are required for the

KafkaHA configuration. If it is a non-HA deployment, only one Kubernetes worker node's IP or DNS Name is required.

What is the "host/path-on-host" on which you want the Kafka server to be provisioned?

Kafka server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Enter RDAF infrastructure service

HAProxy(load-balancer) host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts are required for theHAProxyHA configuration. If it is a non-HA deployment, only one RDAFHAProxyservice host's ip address or DNS name is required.

What is the host on which you want HAProxy to be provisioned?

HAProxy host[192.168.125.141]: 192.168.125.145,192.168.125.146

- Select the network interface name which is used for UI portal access. Ex:

eth0orens160etc.

What is the network interface on which you want the rdaf to be accessible externally?

Advertised external interface[eth0]: ens160

- Enter the

HAProxyservice's virtual IP address when it is configured in HA configuration. Virtual IP address should be an unused IP address. This step is not applicable whenHAProxyservice is deployed in non-HA configuration.

What is the host on which you want the platform to be externally accessible?

Advertised external host[192.168.125.143]: 192.168.125.149

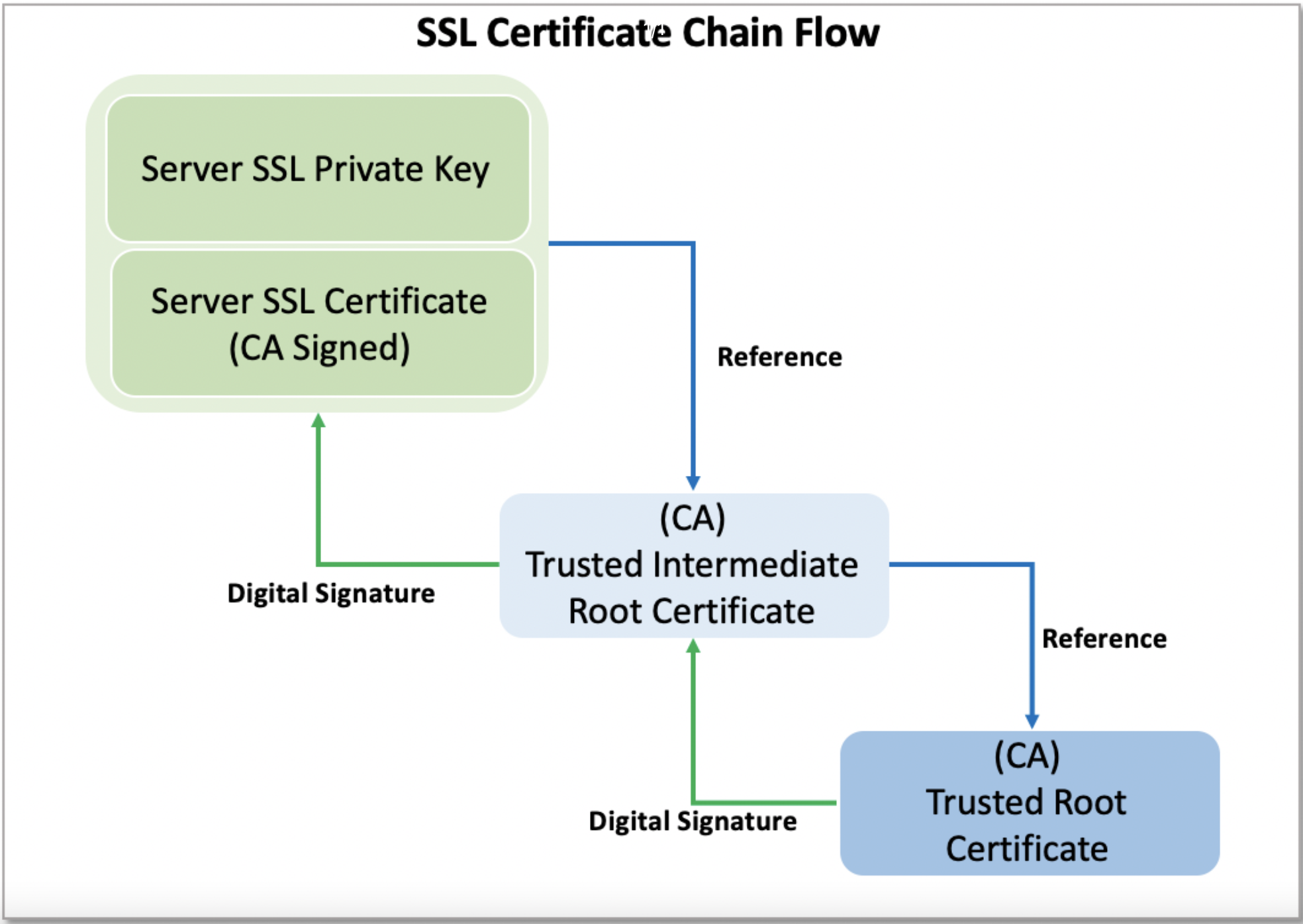

After entering the required inputs as above, rdaf setup generates self-signed SSL certificates, creates the required directory structure, configures SSH key based authentication on all of the RDAF hosts and generates rdaf.cfg configuration file under /opt/rdaf directory.

It creates the below director structure on all of the RDAF hosts.

- /opt/rdaf/cert: It contains the generated self-signed SSL certificates for all of the RDAF hosts.

- /opt/rdaf/config: It contains the required configuration file for each deployed RDAF service where applicable.

- /opt/rdaf/data: It contains the persistent data for some of the RDAF services.

- /opt/rdaf/deployment-scripts: It contains the docker-compose

.ymlfile of the services that are configured to be provisioned on RDAF host. - /opt/rdaf/logs: It contains the RDAF services log files.

Important

When on-premise docker repository service is used, please make sure to add the insecure-registries parameter to /etc/docker/daemon.json file and restart the docker daemon as shown below on all of RDA Fabric VMs before the deployment. This is to bypass SSL certificate validation as on-premise docker repository will be installed with self-signed certificates.

Edit /etc/docker/daemon.json to configure the on-premise docker repository as shown below.

...

...

Kernel Version: 5.4.0-110-generic

Operating System: Ubuntu 24.04.x LTS

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 7.741GiB

Name: rdaf-onprem-docker-repo

ID: OLZF:ZKWN:TIQJ:ZMNV:2STT:JHR3:3RAT:TAL5:TF47:OGVQ:LHY7:RMHH

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

10.99.120.140:5000

127.0.0.0/8

Live Restore Enabled: true

rdaf setregistry

When on-premise docker registry is deployed, set the default docker registry configuration to on-premise docker registry host to pull and install the RDA Fabric platform services.

- Before proceeding, please copy the

/opt/rdaf-registry/cert/ca/ca.crtfile from on-premise registry VM.

scp rdauser@<on-premise-registry-ip>:/opt/rdaf-registry/cert/ca/ca.crt /opt/rdaf-registry/registry-ca-cert.crt

Tip

The location of the on-premise docker registry's CA certificate file ca.crt is located under /opt/rdaf-registry/cert/ca. This file ca.crt need to be copied to the machine on which RDAF CLI is used to setup, configure and install RDA Fabric platform and all of the required services using on-premise docker registry. This step is not applicable when cloud hosted docker registry docker1.cloudfabrix.io is used. Also, this step is not needed, when on-premise docker registry service VM is used to setup, configure and deploy RDAF platform as well.

- Run the below command to set the docker-registry to on-premise one.

rdaf setregistry --host <on-premise-docker-registry-ip-or-dns> --port 5000 --cert-path /opt/rdaf-registry/registry-ca-cert.crt

Tip

Please verify if on-premise registry is accessible on port 5000 using either of the below commands.

- telnet

<on-premise-docker-registry-ip-or-dns>5000 - curl -vv telnet://

<on-premise-docker-registry-ip-or-dns>:5000

- RDAF Setup

Please use the below command for Silent Installation

Note

Create a file with name input.json and use the below sample content for input.json file

{

"accept_eula": true,

"alt_names": "",

"app_service_ha": true,

"platform_service_ha": true,

"admin_organization": "cfx",

"docker_registry_ca": "/opt/rdaf-registry/cert/ca/ca.crt",

"nats_host": ["192.168.108.50","192.168.108.56"],

"minio_server_host": ["192.168.108.50","192.168.108.56","192.168.108.58","192.168.108.51"],

"minio_user": "rdafadmin",

"minio_password": "rdaf1234",

"mariadb_server_host": ["192.168.108.50","192.168.108.56","192.168.108.58"],

"mariadb_user": "rdafadmin",

"mariadb_password": "rdaf1234",

"kafka_server_host": ["192.168.108.50","192.168.108.56","192.168.108.58"],

"opensearch_server_host": ["192.168.108.50","192.168.108.56","192.168.108.58"],

"opensearch_user": "rdafadmin",

"opensearch_password": "rdaf1234",

"graphdb_host": ["192.168.108.50","192.168.108.56","192.168.108.58"],

"graphdb_password": "rdaf1234",

"graphdb_user": "rdafadmin",

"haproxy_host": ["192.168.108.50","192.168.108.56"],

"advertised_ext_host": "192.168.108.59",

"advertised_ext_interface": "ens160",

"bind_internal_inf": false,

"advertised_int_host": "",

"advertised_int_interface": "",

"platform_service_host": ["192.168.108.51","192.168.108.52"],

"service_host": ["192.168.108.51","192.168.108.52"],

"worker_host": ["192.168.108.53","192.168.108.54"],

"rda_event_gateway_host": ["192.168.108.53","192.168.108.54"],

"ssh_password": "Rda$4301!",

"ssh_user": "rdauser",

"no_prompt": true

}

Run the below rdaf setup command to create the RDAF platform's deployment configuration. It is a pre-requisite to deploy RDAF infrastructure, platform and application services.

It will prompt for all the necessary configuration details.

- Accept the EULA

- Enter the rdauser SSH password for all of the RDAF hosts.

What is the SSH password for the SSH user used to communicate between hosts

SSH password:

Re-enter SSH password:

Tip

Please make sure rdauser's SSH password on all of the RDAF hosts is same during the rdaf setup command.

- Enter additional IP address(es) such as VIPs (virtual ip addresses) or DNS names (e.g., *.acme.com) that need to be used as SANs (Subject alt names) while generating self-signed certificates. It is important to include any public facing IP addresse(s) (e.g., AWS, Azure and GCP environments) that is/are different from RDAF platform instance VM's ip addresses which are specified as part of the

rdaf setupcommand. Also, include the IP addresses of secondary NIC (if configured) of all RDAF platform VMs.

Tip

SANs (Subject alt names) also known as multi-domain certificates which allows to create a single unified SSL certificate which includes more than one Common Name (CN). Common Name can be an IP Address or DNS Name or a wildcard DNS Name (ex: *.acme.com)

Provide any Subject alt name(s) to be used while generating SAN certs

Subject alt name(s) for certs[]:

- Enter RDAF Platform host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts are required for the HA configuration. If it is a non-HA deployment, only one RDAF platform host's ip address or DNS name is required.

What are the host(s) on which you want the RDAF platform services to be installed?

Platform service host(s)[rda-platform-vm01]: 192.168.125.141,192.168.125.142

- Answer if the RDAF application services are going to be deployed in HA mode or standalone.

- Enter RDAF Application services host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts or more are required for the HA configuration. If it is a non-HA deployment, only one RDAF application service host's ip address or DNS name is required.

What are the host(s) on which you want the application services to be installed?

Application service host(s)[rda-platform-vm01]: 192.168.125.143,192.168.125.144

- Enter the name of the Organization. In the below example,

ACME_IT_Servicesis used as the Organization name. It is for a reference only.

What is the organization you want to use for the admin user created?

Admin organization[CloudFabrix]: ACME_IT_Services

What is the ca cert to use to communicate to on-prem docker registry

Docker Registry CA cert path[]:

- Enter RDAF Worker service host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts or more are required for the HA configuration. If it is a non-HA deployment, only one RDAF worker service host's ip address or DNS name is required.

What are the host(s) on which you want the Worker to be installed?

Worker host(s)[rda-platform-vm01]: 192.168.125.145

- Enter ip address on which RDAF Event Gateway needs to be Installed, For HA configuration please enter comma separated values. Minimum of 2 hosts or more are required for the HA configuration. If it is a non-HA deployment, only one RDAF Event Gateway host's ip address or DNS name is required.

What are the host(s) on which you want the Event Gateway to be installed?

Event Gateway host(s)[rda-platform-vm01]: 192.168.125.67

- Enter RDAF infrastructure service

NATshost(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts are required for theNATsHA configuration. If it is a non-HA deployment, only one RDAFNATsservice host's ip address or DNS name is required.

What is the "host/path-on-host" on which you want the Nats to be deployed?

Nats host/path[192.168.125.141]: 192.168.125.145,192.168.125.146

- Enter RDAF infrastructure service

Miniohost(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 4 hosts are required for theMinioHA configuration. If it is a non-HA deployment, only one RDAFMinioservice host's ip address or DNS name is required.

What is the "host/path-on-host" where you want Minio to be provisioned?

Minio server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147,192.168.125.148

- Change the default

Miniouser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for Minio root user that will be created and used by the RDAF platform?

Minio user[rdafadmin]:

What is the password you want to use for the newly created Minio root user?

Minio password[Q8aJ63PT]:

- Enter RDAF infrastructure service

MariDBdatabase host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 3 hosts are required for theMariDBdatabase HA configuration. If it is a non-HA deployment, only one RDAFMariaDBservice host's ip address or DNS name is required.

What is the "host/path-on-host" on which you want the MariaDB server to be provisioned?

MariaDB server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Change the default

MariaDBuser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for MariaDB admin user that will be created and used by the RDAF platform?

MariaDB user[rdafadmin]:

What is the password you want to use for the newly created MariaDB root user?

MariaDB password[jffqjAaZ]:

- Enter RDAF infrastructure service

Opensearchhost(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 3 hosts are required for theOpensearchHA configuration. If it is a non-HA deployment, only one RDAFOpensearchservice host's ip address or DNS name is required.

What is the "host/path-on-host" on which you want the opensearch server to be provisioned?

opensearch server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Change the default

Opensearchuser credentials if needed or press Enter to accept the defaults.

What is the user name you want to give for Opensearch admin user that will be created and used by the RDAF platform?

Opensearch user[rdafadmin]:

What is the password you want to use for the newly created Opensearch admin user?

Opensearch password[sLmr4ICX]:

- Enter RDAF infrastructure service

Kafkahost(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 3 hosts are required for theKafkaHA configuration. If it is a non-HA deployment, only one RDAFKafkaservice host's ip address or DNS name is required.

What is the "host/path-on-host" on which you want the Kafka server to be provisioned?

Kafka server host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Enter RDAF infrastructure service

GraphDBhost(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 3 hosts are required for theGraphDBHA configuration. If it is a non-HA deployment, only one RDAFGraphDBservice host's ip address or DNS name is required.

What is the "host/path-on-host" on which you want the GraphDB to be deployed?

GraphDB host/path[192.168.125.141]: 192.168.125.145,192.168.125.146,192.168.125.147

- Enter RDAF infrastructure service

HAProxy(load-balancer) host(s) ip address or DNS name. For HA configuration, please enter comma separated values. Minimum of 2 hosts are required for theHAProxyHA configuration. If it is a non-HA deployment, only one RDAFHAProxyservice host's ip address or DNS name is required.

What is the host on which you want HAProxy to be provisioned?

HAProxy host[192.168.125.141]: 192.168.125.145,192.168.125.146

- Select the network interface name which is used for UI portal access. Ex:

eth0orens160etc.

What is the network interface on which you want the rdaf to be accessible externally?

Advertised external interface[eth0]: ens160

- Enter the

HAProxyservice's virtual IP address when it is configured in HA configuration. Virtual IP address should be an unused IP address. This step is not applicable whenHAProxyservice is deployed in non-HA configuration.

What is the host on which you want the platform to be externally accessible?

Advertised external host[192.168.125.143]: 192.168.125.149

- Enter the ip address of the Internal accessible advertised host

Note

Internal advertized host ip is only needed when RDA Fabric VMs are configured with dual NIC interfaces, one is for management network for UI access, second one is for internal app to app communication using non-routable ip address network scheme which is isolated from management network.

Dual network configuration is primarily used to support DR solution where the RDA Fabric VMs are replicated from one site to another site using VM level replication or underlying storage array replication (volume to volume or LUN to LUN on which RDA Fabric VMs are hosted). When RDA Fabric VMs are recovered on a DR site, management network IPs need be changed as per DR site network's subnet, while secondary NIC's ip address scheme can be maintained with same as primary site to avoid RDA Fabric's application reconfiguration.

After entering the required inputs as above, rdaf setup generates self-signed SSL certificates, creates the required directory structure, configures SSH key based authentication on all of the RDAF hosts and generates rdaf.cfg configuration file under /opt/rdaf directory.

It creates the below director structure on all of the RDAF hosts.

- /opt/rdaf/cert: It contains the generated self-signed SSL certificates for all of the RDAF hosts.