RDA Client Command Line Interface

1. Installing RDA Command Line Tool

RDA Command Line Interface tool comes as a docker image to make it easy to run RDA commands on any Laptop, Desktop or in a Cloud VM.

To run this tool, following are required:

- Operating Systems: Linux or MacOS

- Docker installed on that system

- At least Python 3.0

STEP-1: Download the python script

Make the script an executable:

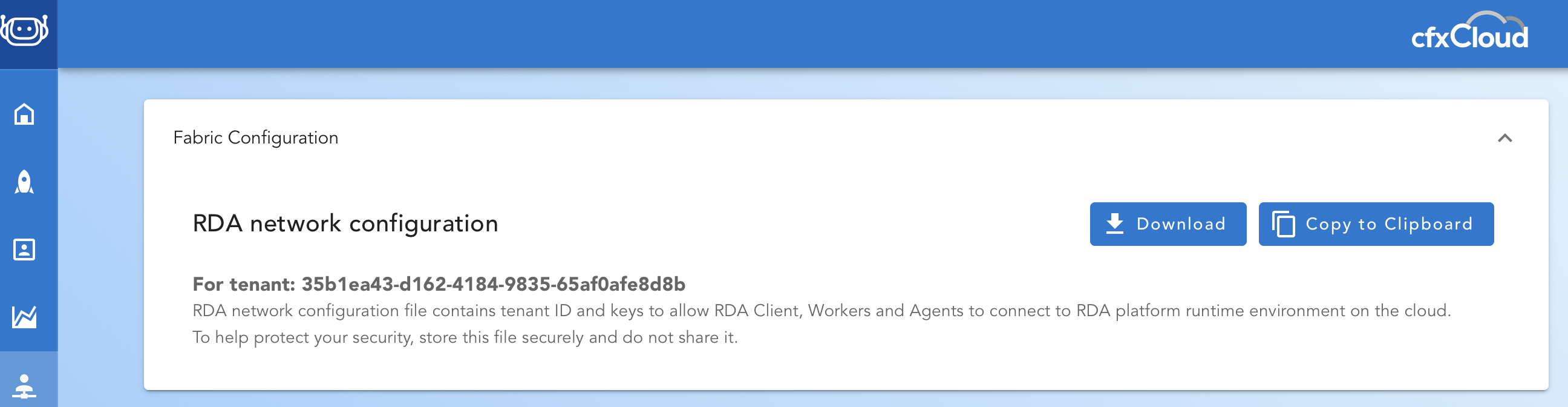

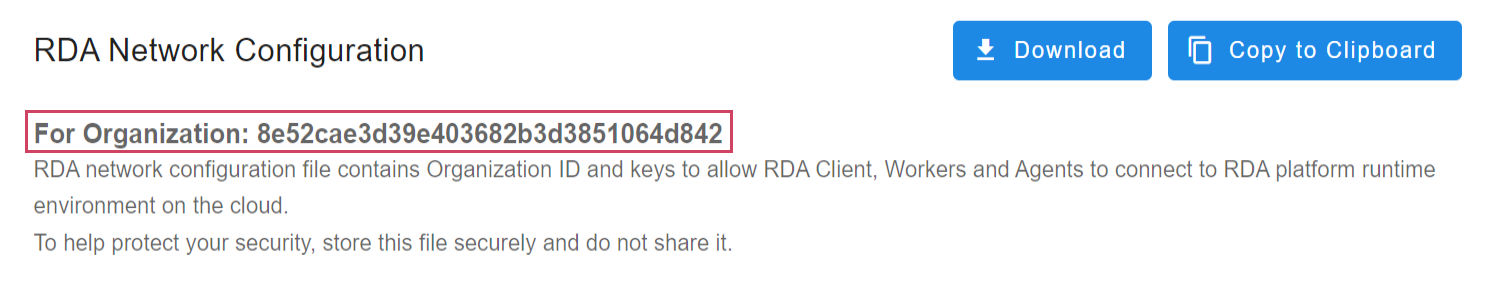

STEP-2: Download RDA network configuration

From your cfxCloud account, you can download a copy the RDA Network configuration.

Download the RDA Network configuration file and save it under $HOME/.rda/rda_network_config.json

STEP-3: Verify Script

Verify that rdac.py is working correctly by using one of the following commands:

Or

For very first time, above script will validate dependencies such OS, Python version and availability of Docker. If the validation is successful, it will download the docker container image for RDA CLI and run it.

Subsequently, if you want to update the docker container image to latest version, run following command:

2. RDA Commands: Cheat Sheet

This section lists few most commonly used RDA Commands.

Listing RDA Platform Microservices

RDA Fabric is for each tenant has a set of microservices (pods) deployed as containers either using Kubernetes or as simple docker containers.

Following command lists all active microservices in your RDA Fabric:

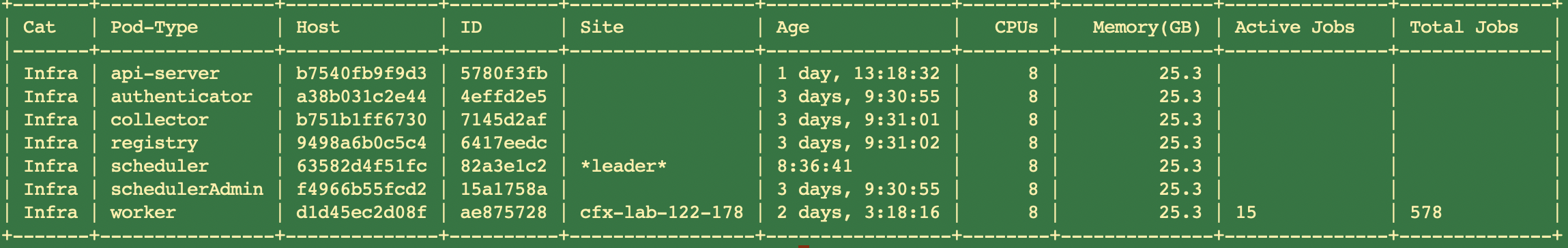

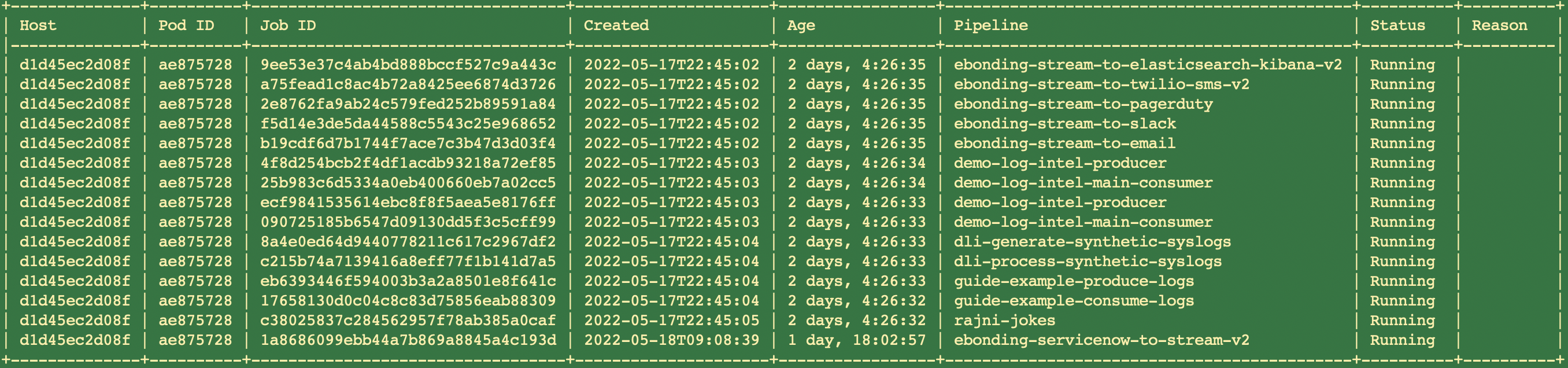

Typical output for pods command would look like:

Most of RDA Commands support option --json which would print output in a JSON format instead of tabular format.

Example rdac pods JSON Output

Partial output of --json option:

{

"now": "2022-05-20T02:16:31.054287",

"started_at": "2022-05-17T22:44:13.602509",

"pod_type": "worker",

"pod_category": "rda_infra",

"pod_id": "ae875728",

"hostname": "d1d45ec2d08f",

"proc_id": 1,

"labels": {

"tenant_name": "dev-1-unified",

"rda_platform_version": "22.5.13.3",

"rda_messenger_version": "22.5.15.1",

"rda_pod_version": "22.5.17.1",

"rda_license_valid": "no",

"rda_license_not_expired": "no",

"rda_license_expiration_date": ""

},

"build_tag": "daily",

"requests": {

"auto": "tenants.2dddab0e52544f4eb2de067057aaac31.worker.group.3571581d876b.auto",

"direct": "tenants.2dddab0e52544f4eb2de067057aaac31.worker.group.3571581d876b.direct.ae875728"

},

"resources": {

"cpu_count": 8,

"cpu_load1": 2.24,

"cpu_load5": 2.43,

"cpu_load15": 2.52,

"mem_total_gb": 25.3,

"mem_available_gb": 9.7,

"mem_percent": 61.7,

"mem_used_gb": 15.01,

"mem_free_gb": 2.93,

"mem_active_gb": 11.49,

"mem_inactive_gb": 7.64,

"pod_usage_active_jobs": 15,

"pod_usage_total_jobs": 578

},

"pod_leader": false,

"objstore_info": {

"host": "10.10.10.100:9000",

"config_checksum": "8936434b"

},

"group": "cfx-lab-122-178",

"group_id": "3571581d876b",

"site_name": "cfx-lab-122-178",

"site_id": "3571581d876b",

"public_access": false,

"capacity_filter": "cpu_load1 <= 7.0 and mem_percent < 98 and pod_usage_active_jobs < 20",

"_local_time": 1653012991.0593688

}

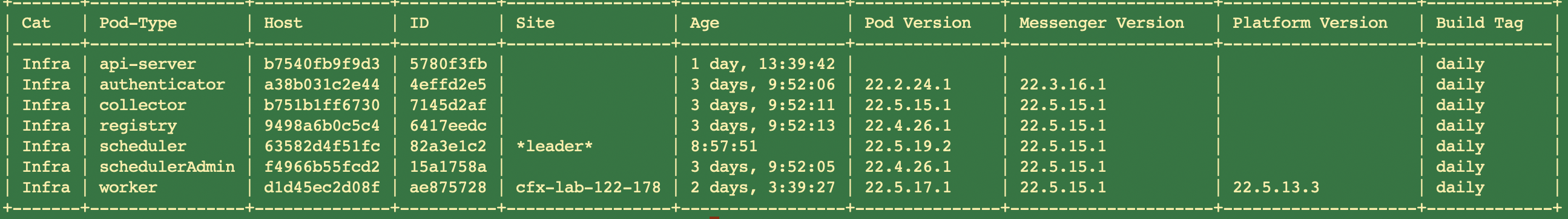

Listing RDA Platform Microservices with Versions

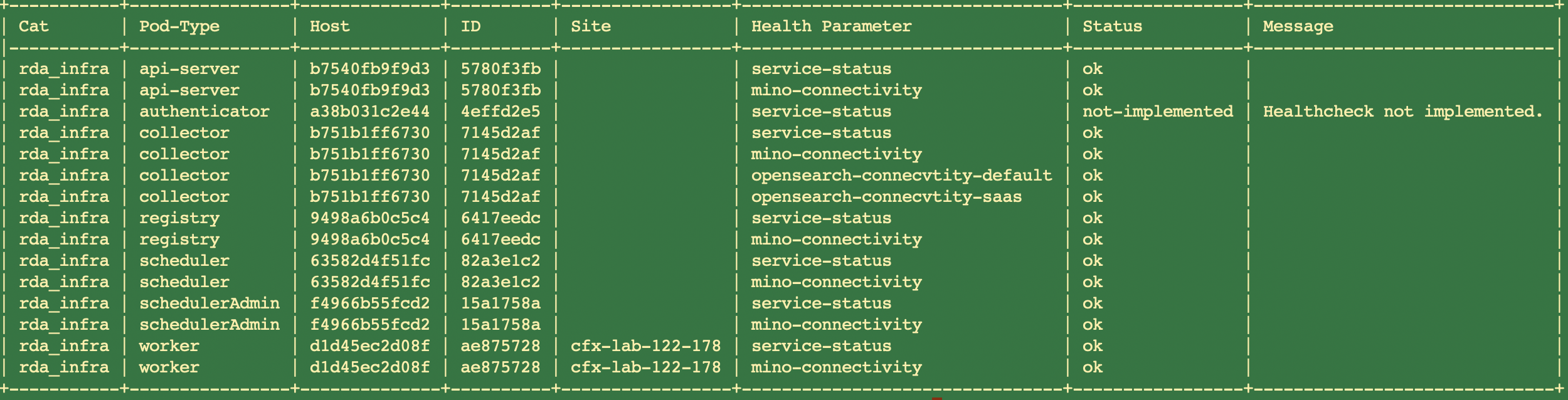

Performing a Health Check on RDA Microservices

Following command performs a health check on all microservices and returns status of each health parameter.

Listing all Running Pipeline Jobs

Following command lists all active jobs created using Portal, CLI, Scheduler or via Service Blueprints.

Evicting a Job

Following command can be used to evict a specific Job from RDA Worker. If the job was created by Scheduler or by a Service Blueprint, a new job may be re-created immediately after the job has been evicted.

This script attempts to evict the job with ID c38025837c284562957f78ab385a0caf

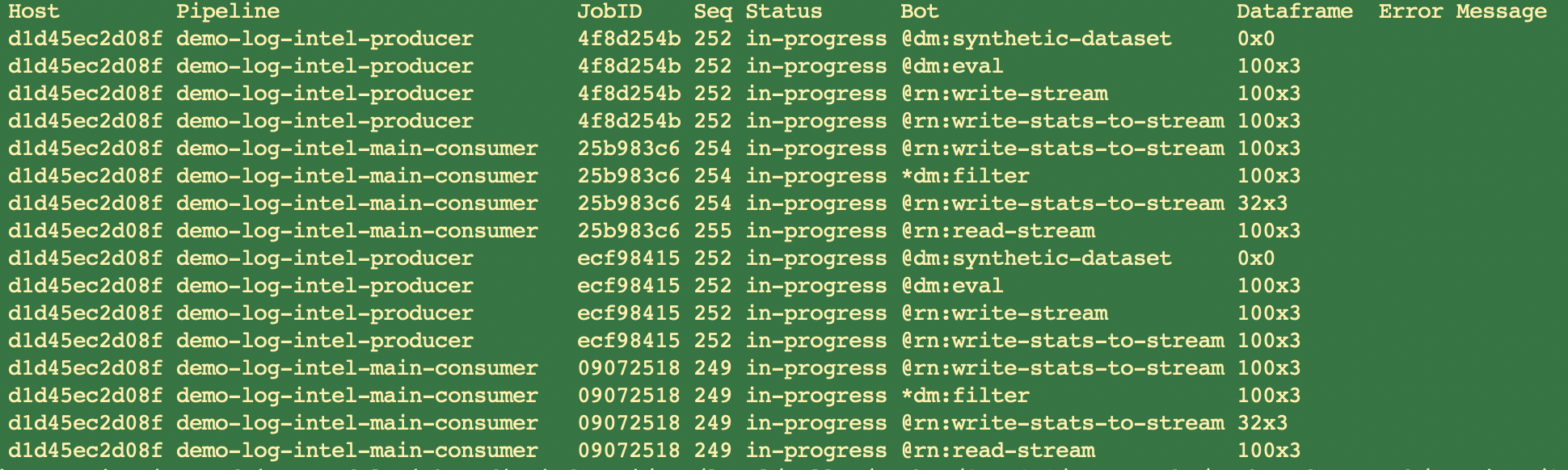

Observing Pipeline Execution Traces from CLI

Following command can be used watch (observe) all traces from all workers and all the pipelines that are getting executed anywhere in the RDA Fabric.

List all datasets currently saved in RDA Fabric

Adding a new dataset to RDA Fabric

Datasets can be added if the data is available as a local file on your system where rdac.py is available or if the data is available via URL. Supported formats are CSV, JSON, XLS, Parquet, ORC and many compresses formats for CSV.

To add a local file as a dataset:

Note: rdac.py mounts current directory as /home inside the docker container. You may also place the data in your home directory folder$HOME/rdac_data/ and access it as --file /data/mydata.csv

You may also add a dataset if the data is accessible via http or https URL.

3. List of All RDA CLI Sub Commands

| Sub Command | Description |

|---|---|

| agent-bots | List all bots registered by agents for the current tenant |

| agents | List all agents for the current tenant |

| alert-rules | Alert Rule management commands |

| bots-by-source | List bots available for given sources |

| check-credentials | Perform credential check for one or more sources on a worker pod |

| checksum | Compute checksums for pipeline contents locally for a given JSON file |

| content-to-object | Convert data from a column into objects |

| copy-to-objstore | Deploy files specified in a ZIP file to the Object Store |

| dashboard | User defined dashboard management commands |

| dataset | Dataset management commands |

| demo | Demo related commands |

| deployment | Service Blueprints (Deployments) management commands |

| event-gw-status | List status of all ingestion endpoints at all the event gateways |

| evict | Evict a job from a worker pod |

| file-ops | Perform various operations on local files |

| file-to-object | Convert files from a column into objects |

| fmt-template | Formatting Templates management commands |

| healthcheck | Perform healthcheck on each of the Pods |

| invoke-agent-bot | Invoke a bot published by an agent |

| jobs | List all jobs for the current tenant |

| logarchive | Logarchive management commands |

| merge-logarchive-files | Merge multiple locally downloaded Log Archive (.gz) filles into a single CSV/Parquet file |

| object | RDA Object management commands |

| output | Get the output of a Job using jobid. |

| pipeline | Pipeline management commands |

| pods | List all pods for the current tenant |

| pod-logging | Commands to set and get logging configuration of pods |

| pod-logging-handler-set | To change log levels for any required pod |

| project | Project management commands. Projects can be used to link different tenants / projects from this RDA Fabric or a remote RDA Fabric |

| pstream | Add a new Persistent stream |

| purge-outputs | Purge outputs of completed jobs |

| read-stream | Read messages from an RDA stream |

| run | Run a pipeline on a worker pod |

| run-get-output | Run a pipeline on a worker, wait for the completion, get the final output |

| schedule-add | Add a new schedule for pipeline execution |

| schedule-delete | Delete an existing schedule |

| schedule-edit | Edit an existing schedule |

| schedule-info | Get details of a schedule |

| schedule-list | List all schedules |

| schedule-update-status | Update status of an existing schedule |

| schema | Dataset Model Schema management commands |

| secret | Credentials (Secrets) management commands |

| set-pod-log-level | Update the logging level for a given RDA Pod |

| site-profile | Site Profile management commands |

| site-summary | Show summary by Site and Overall |

| stack | Application Dependency Mapping (Stack) management commands |

| staging-area | Staging Area based data ingestion management commands |

| subscription | Show current CloudFabrix RDA subscription details |

| synthetics | Data synthesizing management commands |

| verify-pipeline | Verify the pipeline on a worker pod |

| viz | Visualize data from a file within the console (terminal) |

| watch | Commands to watch various streams such sas trace, logs and change notifications by microservices |

| worker-obj-info | List all worker pods with their current Object Store configuration |

| write-stream | Write data to the specified stream |

Sub Command: agent-bots

Description: List all bots registered by agents for the current tenant

usage: rdac [-h] [--json] [--type AGENT_TYPE] [--group AGENT_GROUP]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of

tabular format

--type AGENT_TYPE Show only the agents that match the specified agent

type

--group AGENT_GROUP Show only the agents that match the specified agent

group

- Following is the syntax for agent-bots

[

{

"name": "get-status",

"description": "List all endpoints configured at this gateway and current status",

"query-type": "api-endpoint",

"mode": "source-any",

"model": {},

"agent_type": "rda-event-gateway",

"site_name": "event_gateway_site01",

"pod_id": "250951da"

}

]

Sub Command: agents

Description: List all agents for the current tenant

Usage: agents [-h] [--json] [--type AGENT_TYPE] [--group AGENT_GROUP]

[--site SITE_NAME]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of

tabular format

--type AGENT_TYPE Show only the agents that match the specified agent

type

--group AGENT_GROUP Deprecated. Use --site. Show only the agents that match

the specified site

--site SITE_NAME Show only the agents that match the specified site

+-------------------+----------------+----------+----------------------+-------------------+--------+--------------+

| Agent-Type | Host | ID | Site | Age | CPUs | Memory(GB) |

|-------------------+----------------+----------+----------------------+-------------------+--------+--------------|

| rda-event-gateway | saaswrk72.qa.e | 250951da | event_gateway_site01 | 28 days, 21:54:20 | 4 | 31.33 |

| agent-ml | 5339ca9ca765 | c4d7b94e | mlagent | 23:04:10 | 4 | 31.33 |

| agent-irm | aa932951e71e | 0fbc78ec | irmagent | 23:03:42 | 4 | 31.33 |

+-------------------+----------------+----------+----------------------+-------------------+--------+--------------+

Sub Command: alert-rules

Following are the valid Sub-Commands for the alert-rules

Sub Commands |

Description |

|---|---|

| add | Add or update alert ruleset |

| get | Get YAML data for an alert ruleset |

| delete | Delete an alert ruleset |

| list | List all alert rulesets. |

Following are valid sub-commands for alert-rules:

add Add or update alert ruleset

get Get YAML data for an alert ruleset

delete Delete an alert ruleset

list List all alert rulesets.

Sub Command: add

Description: Add or update alert ruleset

Usage: alert-rules-add [-h] --file INPUT_FILE [--overwrite]

optional arguments:

-h, --help show this help message and exit

--file INPUT_FILE YAML file containing alert ruleset definition

--overwrite Overwrite even if a ruleset already exists with a name.

- Following is the syntax for alert-rules add

cat > alertrulestest1.yml << 'EOF'

name: alertruletest

description: syslog from filebeat

realtime-alerts:

- name: filebeat_syslog_msgs

description: VPX Finish task msgs

groupBy: host_name

condition: severity = 'INFO'

severity: CRITICAL

suppress-for-minutes: 5

saved_time: '2022-02-19T22:34:10.888947'

EOF

rdac alert-rules add --file alertrulestest1.yml

Sub Command: delete

Description: Delete an alert ruleset

Usage: alert-rules-delete [-h] --name RULESET_NAME

optional arguments:

-h, --help show this help message and exit

--name RULESET_NAME Name of the alert ruleset to delete

- Following is the syntax for alert-rules delete

Sub Command: get

Description: Get YAML data for an alert ruleset

Usage: alert-rules-get [-h] --name RULESET_NAME

optional arguments:

-h, --help show this help message and exit

--name RULESET_NAME Name of the alert ruleset to display

- Following is the syntax for alert-rules get

description: Alert_Rules

name: ATest_ZRules

aggregate-alerts:

- rule_a

- rule_b

realtime-alerts:

- rule_1

- rule_2

saved_time: '2022-12-20T05:16:41.716023'

Sub Command: list

Description: List all alert rulesets.

Usage: alert-rules-list [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for alert-rules list

name description saved_time num_realtime_alert_rules num_aggr_alert_rules

-- ---------------- -------------------- -------------------------- -------------------------- ----------------------

0 alertruletestnew syslog from filebeat 2023-01-03T05:01:55.361186 1 0

Cleaning up socket for process: 1. Socket file: /tmp/rdf_log_socket_57602b71-8ea5-49c5-acbd-54c9908a0680

Exiting out of LogRecordSocketReceiver. pid: 1. Socket file: /tmp/rdf_log_socket_57602b71-8ea5-49c5-acbd-54c9908a0680

Sub Command: bot-package

Following are the valid Sub-Commands for the bot-package

Sub Commands |

Description |

|---|---|

| add | Add or update Bot Package |

| get | Get meta data for a Bot Package |

| delete | Delete a Bot Package |

| list | List all Bot Packages |

| build | Build the specified bot package |

| ut | Run Unit Tests |

| generate | Generate the specified bot package |

| list-runtimes | List available Bot Package runtime environments on each worker |

Following are valid sub-commands for bot-package:

add Add or update Bot Package

get Get meta data for a Bot Package

delete Delete a Bot Package

list List all Bot Packages

build Build the specified bot package

ut Run Unit Tests

generate Generate the specified bot package

list-runtimes List available Bot Package runtime environments on each worker

Sub Command: list

Description: List all Bot Packages

usage: bot-package [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for bot-package list

Name Version Description Has Dist Publisher Saved Time

------------------------- --------- --------------------------------------- ---------- ----------- --------------------------

botpkg_demo_proactivecase 22.11.9 ProctiveCase API for ServiceNow Tickets Yes CloudFabrix 2022-11-14T21:16:30.201550

Sub Command: get

Description: Get meta data for a Bot Package

optional arguments:

-h, --help show this help message and exit

--name PACKAGE_NAME Name of the Bot Package

extension:

namespace: demo

type: proactivecase

version: 22.11.9

description: ProctiveCase API for ServiceNow Tickets

default_name: proactivecase

publisher: CloudFabrix

support_email: mohammed.rahman@cloudfabrix.com

config_template:

hostname: null

port: 443

uri_suffix: internal/proactiveCaseAPI/v1.1

client_id: null

secret: null

$secure:

- secret

$mandatory:

- hostname

- client_id

- secret

$labels:

hostname: Host

port: Port

client_id: Client ID

secret: Secret Value

implementation:

code: proactivecase.ProactiveCase

bots:

- name: get-ticket

description: Get ServiceNow Ticket

bot_type: source

model_type: api

model_parameters:

- name: ticket_id

description: comma separated ticket IDs

type: text

mandatory: true

- name: source

description: Source

type: text

mandatory: true

Sub Command: generate

Description: Generate the specified bot package

usage: bot-package [-h] --namespace NAMESPACE --name NAME [--version VERSION]

[--bots NUM_OF_BOTS] --output_dir OUTPUT_DIR

optional arguments:

-h, --help show this help message and exit

--namespace NAMESPACE

Namespace for Bot extension

--name NAME Name of the bot extension package

--version VERSION Version for Bot extension

--bots NUM_OF_BOTS Number of Bots to be added to the package

--output_dir OUTPUT_DIR

Output directory for creating bot package

- Following is the syntax for bot-package generate

rdac bot-package generate --namespace demo --name proactivecasenew --version 23.01.03 --bot 2 --output_dir new11

Configure : Custom Bot 1

Name*: get-ticket

Bot Type*: source

Number of bot input parameters*: 2

Configure : Bot input parameter 1

Name*: ticket_id

Type: text

Is mandatory[yes/no]*: yes

Configure : Bot input parameter 2

Name*: source

Type: text

Is mandatory[yes/no]*: yes

Configure : Custom Bot 2

Name*: get-ticket1

Bot Type*: source

Number of bot input parameters*: 1

Configure : Bot input parameter 2 1

Name*: ticket_id1

Type: text

Is mandatory[yes/no]*: yes

Generated: new11/bots.yml

Sub Command: list-runtimes

Description: List available Bot Package runtime environments on each worker

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for bot-package list-runtimes

2022-12-22:09:44:17 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

+--------------+----------+-------------+-------------+-------------+--------------+----------+

| Host | Pod ID | Site | Python3.7 | Python3.9 | Python3.10 | Java11 |

|--------------+----------+-------------+-------------+-------------+--------------+----------|

| 05969789d903 | b6bb8486 | rda-site-01 | yes | | | |

+--------------+----------+-------------+-------------+-------------+--------------+----------+

Sub Command: bots-by-source

Description: List bots available for given sources

Usage: bots-by-source [-h] [--sources SOURCES] [--group WORKER_GROUP]

[--site WORKER_SITE] [--lfilter LABEL_FILTER]

[--rfilter RESOURCE_FILTER] [--maxwait MAX_WAIT] [--json]

optional arguments:

-h, --help show this help message and exit

--sources SOURCES Comma separated list of sources to find bots (in

addition to built-in sources)

--group WORKER_GROUP Deprecated. Use --site option. Specify a worker site

name. If not specified, will use any available worker.

--site WORKER_SITE Specify a worker site name. If not specified, will use

any available worker.

--lfilter LABEL_FILTER

CFXQL style query to narrow down workers using their

labels

--rfilter RESOURCE_FILTER

CFXQL style query to narrow down workers using their

resources

--maxwait MAX_WAIT Maximum wait time (seconds) for credential check to

complete.

--json Print detailed information in JSON format instead of

tabular format

- Following is the syntax for bots-by-source

{

"status": "started",

"reason": "",

"results": [],

"now": "2023-01-03T06:20:38.129086",

"status-subject": "tenants.545590daa4ba44a3b32cb3b33f69df13.worker.group.f4a56ba6388c.direct.2d30eab7",

"jobid": "b244f76f663a4033964301e7c3916ddc"

}

Completed:

Bot Type Description Source

-------------------------------------- -------------------- ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- --------

@c:new-block api-endpoint Start a new block within the pipeline c

@c:simple-loop api-endpoint Start a simple looping block using 'loop_var' as list of values c

@c:data-loop api-endpoint Start a looping block using 'dataset' name of the saved dataset, and unique values from 'columns' c

@c:count-loop api-endpoint Start a looping block that counts from 'start' to 'end' with 'increment' numerical values c

@c:timed-loop api-endpoint Start a looping block that waits 'interval' seconds between each iteration

Sub Command: check-credentials

Description: Perform credential check for one or more sources on a worker pod

Usage: check-credentials [-h] --config CONFIG [--group WORKER_GROUP] [--site WORKER_SITE]

[--maxwait MAX_WAIT]

optional arguments:

-h, --help show this help message and exit

--config CONFIG File containing pipeline contents or configuration

--group WORKER_GROUP Deprecated. Use --site. Specify a worker site name. If

not specified, will use any available worker.

--site WORKER_SITE Specify a worker Site name. If not specified, will use

any available worker.

--maxwait MAX_WAIT Maximum wait time (seconds) for credential check to

complete.

- Following is the syntax for check-credentials

Initiating Credential check

{

"status": "started",

"reason": "",

"results": [],

"now": "2021-07-28T02:12:46.577687",

"status-subject": "tenants.2dddab0e52544f4eb2de067057aaac31.worker.group.c640b839efec.direct.255941bb",

"jobid": "328ea2d5f0454ed29b64ccdb287c5626"

}

{

"jobid": "328ea2d5f0454ed29b64ccdb287c5626",

"status-subject": "tenants.2dddab0e52544f4eb2de067057aaac31.worker.group.c640b839efec.direct.255941bb"

}

Running:

Running:

Running:

Completed:

+---------------+---------------+----------+----------+-----------------+

| Source Name | Source Type | Status | Reason | Duration (ms) |

|---------------+---------------+----------+----------+-----------------|

| aws-dev | aws | OK | | 1473.79 |

| aws-prod | aws | OK | | 1404.15 |

+---------------+---------------+----------+----------+-----------------+

Sub Command: checksum

Description: Compute checksums for pipeline contents locally for a given JSON file

Usage: checksum [-h] --pipeline PIPELINE

optional arguments:

-h, --help show this help message and exit

--pipeline PIPELINE File containing pipeline information in JSON format

Sub Command: content-to-object

Description: Convert data from a column into objects

Usage: content-to-object [-h] --inpcol INPUT_CONTENT_COLUMN --outcol OUTPUT_COLUMN --file

INPUT_FILE --outfolder OUTPUT_FOLDER --outfile OUTPUT_FILE

optional arguments:

-h, --help show this help message and exit

--inpcol INPUT_CONTENT_COLUMN

Name of the column in input that contains the data

--outcol OUTPUT_COLUMN

Column name where object names will be inserted

--file INPUT_FILE Input csv filename

--outfolder OUTPUT_FOLDER

Folder name where objects will be stored

--outfile OUTPUT_FILE

Name of output csv file that has object location

stored

Sub Command: copy-to-objstore

Description: Deploy files specified in a ZIP file to the Object Store

Usage: copy-to-objstore [-h] --file ZIP_FILENAME [--verify] [--force]

optional arguments:

-h, --help show this help message and exit

--file ZIP_FILENAME ZIP filename (or URL) containing bucket/object entries.

If bucket name is 'default', this tool will use the

target bucket as specified in configuration.

--verify Do not upload files, only verify if the objects in the

ZIP file exists on the target object store

--force Upload the files even if they exist on the target

system with same size

Sub Command: dashboard

Following are the valid Sub-Commands for the dashboard

Sub Commands |

Description |

|---|---|

| add | Add or update dashboard |

| get | Get YAML data for a dashboard |

| list | List all dashboards |

| convert | Convert all dashboards from YAML to JSON |

| delete | Delete a dashboard |

| enable | Change the status of a dashboard to 'enabled' |

| disable | Change the status of a dashboard to 'disabled' |

| verify | Verify the dashboard and any pages inside it for PStreams and columns |

| to-app | Convert a tabbed or sectioned dashboard into multi-paged app |

| live-edit | Supports live edit of dashboards using local editor |

Following are valid sub-commands for dashboard:

add Add or update dashboard

get Get YAML data for a dashboard

list List all dashboards

convert Convert all dashboards from YAML to JSON

delete Delete a dashboard

enable Change the status of a dashboard to 'enabled'

disable Change the status of a dashboard to 'disabled'

verify Verify the dashboard and any pages inside it for PStreams and columns

to-app Convert a tabbed or sectioned dashboard into multi-paged app

live-edit Supports live edit of dashboards using local editor

add

Description: Add or update dashboard

optional arguments:

-h, --help show this help message and exit

--file INPUT_FILE YAML file containing dashboard definition

--overwrite Overwrite even if a dashboard already exists with the

specified name.

Note

Before running the add cmd ,create a yaml file containing dashboard definition

- Following is the syntax for dashboard add

2023-01-04:10:24:09 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:10:24:09 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:10:24:09 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Added dashboard Appdynamics cpu metrics analysis-shaded chartnew

Sub Command: get

Description: Get YAML data for a dashboard

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard

- Following is the syntax for dashboard get

label: Appdynamics CPU metrics

description: Shaded chart for Appdynamics metrics

enabled: true

dashboard_style: tabbed

dashboard_filters:

time_filter: true

columns_filter: []

group_filters: []

debug: true

dashboard_sections:

- title: Appdynamics_cpu_metrics

show_filter: true

widgets:

- widget_type: shaded_chart

title: Appdynamics-cpumetrics

stream: Appdynamics_cpu_metrics

ts_column: timestamp

baseline_column: baseline

anomalies_column: anomalies

predicted_column: predicted

upperBound_column: upperBound

lowerBound_column: lowerBound

duration_hours: 5000

synchronized-group: 0

markers-def:

- message: Now

color: '#E53935'

timestamp: 1647814186

- message: Tomorrow

color: '#E53935'

timestamp: 1648937386

- message: Current

color: '#E53935'

timestamp: 1658355595

show-markers: true

downsample: true

downsample-to-percent: 10

downsample-limit-rows: 500

widget_id: b0d45ad1

saved_time: '2022-12-14T06:07:11.835323'

Sub Command: list

Description: List all dashboards

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for dashboard list

name dashboard_type label description enabled saved_time

--- ---------------------------------------------------- ---------------- --------------------------------------------------------- ----------------------------------------------------------------------------------------------------------------------------------------- --------- --------------------------

0 rda-mgmt-page-alert-rules dashboard Alert Rules Alert Rules Page True 2022-10-06T13:43:06.707246

1 rda-mgmt-page-credentials dashboard Credentials Credentials Management Page True 2022-10-06T13:43:07.346093

2 Test_IFRAME dashboard IFRAME Test ACME Platform Sanity Dash False 2022-10-06T13:43:07.367278

3 olb-observability-data_page_Incidents dashboard OLB L2/L3 Dashboard, Page: Incidents Online Banking App Observability Data for L2/L3 Users, Page: Incidents False 2022-11-18T09:24:50.445315

4 olb-experience-desk_page_KPIs template Experience Desk Dashboard, Page: KPIs Equipped with Events, Alerts and Incidents Information to Maximize Customer Experience and Satisfaction, Page: KPIs False 2022-10-06T13:43:07.443675

5 ACME_Test_Preview_App template Test Case Preview Dashboard to preview commits and logs for a test case False 2022-10-06T13:43:07.509264

6 l1-service-health template Service Health - L1 Users L1 Service Health False 2022-12-21T05:19:02.332379

7 rda-integrations-app app RDA Integrations Robotic Data Automation Integrations True 2022-10-06T13:43:07.622712

8 l2-l3-dashboard app L2/L3 Dashboard Dashboard L2/L3 Users True 2022-12-21T05:19:02.396260

9 rda-dashboard-errors dashboard Dashboard Errors Query errors in RDA Dashboard widgets True 2022-10-07T01:49:51.552796

10 Appdynamics cpu metrics analysis-shaded chart dashboard Appdynamics CPU metrics Shaded chart for Appdynamics metrics True 2022-12-14T06:07:11.835323

Sub Command: convert

Description : Convert all dashboards from YAML to JSON

- Following is the syntax for dashboard convert

Migrating to JSON: rda-mgmt-page-alert-rules

2023-01-04:05:30:41 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:05:30:41 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:05:30:41 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Migrating to JSON: rda-mgmt-page-credentials

Migrating to JSON: Test_IFRAME

Migrating to JSON: olb-observability-data_page_Incidents

Migrating to JSON: olb-experience-desk_page_KPIs

Migrating to JSON: ACME_Test_Preview_App

Migrating to JSON: l1-service-health

Migrating to JSON: rda-integrations-app

Migrating to JSON: l2-l3-dashboard

Migrating to JSON: rda-dashboard-errors

Migrating to JSON: Appdynamics cpu metrics analysis-shaded chart

Migrating to JSON: metric_anomalies_template

Migrating to JSON: olb-engineering-dashboard_page_Metrics

Migrating to JSON: olb-observability-data_page_Metrics__with_Anomalies

Migrating to JSON: olb-observability-data_page_Metric Analysis

Sub Command: delete

Description: Delete a dashboard

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard to delete

- Following is the syntax for dashboard delete

2023-01-04:09:34:58 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:09:34:58 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:09:34:58 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Deleted dashboard: Appdynamics cpu metrics analysis-shaded chart 2

Sub Command: enable

Description: Change the status of a dashboard to 'enabled'

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard

- Following is the syntax for dashboard enable

2023-01-04:05:45:46 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:05:45:46 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:05:45:46 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Changed status of Appdynamics cpu metrics analysis-shaded chart to enabled

Sub Command: disable

Description: Change the status of a dashboard to 'disabled'

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard

- Following is the syntax for dashboard disable

2023-01-04:05:44:47 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:05:44:47 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:05:44:47 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Changed status of Appdynamics cpu metrics analysis-shaded chart to disabled

Sub Command: verify

Description: Verify the dashboard and any pages inside it for PStreams and columns

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard to verify

- Following is the syntax for dashboard verify

2023-01-04:06:28:41 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

[

{

"dashboard": "Appdynamics cpu metrics analysis-shaded chart",

"type": "widget",

"widget_type": "shaded_chart",

"title": "Appdynamics-cpumetrics",

"stream": "Appdynamics_cpu_metrics",

"columns": "timestamp",

"stream_status": "found",

"missing_columns": ""

}

]

Sub Command: to-app

Description: Convert a tabbed or sectioned dashboard into multi-paged app

usage: dashboard [-h] --name DASHBOARD_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_NAME

Name of the dashboard

- Following is the syntax for dashboard to-app

Adding new internal dashboard: rda-microservice-traces_page_Traces

2023-01-04:06:37:48 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:06:37:48 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:06:37:48 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Adding new internal dashboard: rda-microservice-traces_page_Healthchecks

Updating dashboard {dashboard_name} as app...

rdauser@manojp-rda-platform:~$

Sub Command: dashgroup

Following are the valid Sub-Commands for the dashgroup

Sub Commands |

Description |

|---|---|

| add | Add or update dashboard group |

| get | Get JSON data for a dashboard group |

| list | List all dashboard groups |

| delete | Delete a dashboard group |

| enable | Change the status of a dashboard group to 'enabled' |

| disable | Change the status of a dashboard group to 'disabled' |

Following are valid sub-commands for dashgroup:

add Add or update dashboard group

get Get JSON data for a dashboard group

list List all dashboard groups

delete Delete a dashboard group

enable Change the status of a dashboard group to 'enabled'

disable Change the status of a dashboard group to 'disabled'

Sub Command: add

Description: Add or update dashboard group

usage: dashgroup [-h] --file INPUT_FILE [--overwrite]

optional arguments:

-h, --help show this help message and exit

--file INPUT_FILE JSON file containing dashboard group definition

--overwrite Overwrite even if a dashboard group already exists with

the specified name.

Note

Before running the add cmd ,create a JSON file containing dashboard group definition

- Following is the syntax for dashgroup add

2023-01-04:11:13:12 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:11:13:12 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:11:13:12 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Added dashboard group l1-user new

Sub Command: get

Description: Get JSON data for a dashboard group

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_GROUP_NAME

Name of the dashboard group

- Following is the syntax for dashgroup get

{

"name": "l1-users",

"label": "L1 Users",

"dashboardList": [

{

"id": "user-dashboard-incident-topology",

"name": "incident-topology"

},

{

"id": "user-dashboard-incident-metrics",

"name": "incident-metrics"

},

{

"id": "user-dashboard-incident-collaboration",

"name": "incident-collaboration"

},

{

"id": "user-dashboard-l1-main-app",

"name": "l1-main-app"

}

],

"users": "l1-user@cfx.com",

"enabled": true,

"description": "Dashboards for L1 Users",

"saved_time": "2022-09-28T05:56:11.325672"

}

Sub Command: list

Description: List all dashboard groups

usage: dashgroup [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for dashgroup list

name label description enabled saved_time

-- ----------------------- ------------------- ---------------------------------------- --------- --------------------------

0 Test Admin Group True 2022-12-21T16:59:47.419691

1 l1-users L1 Users Dashboards for L1 Users True 2022-09-28T05:56:11.325672

2 executives Executives Dashboards for Executives True 2022-09-29T03:00:33.425663

3 Experience Desk Experience Desk Dashboards for Experience Desk True 2022-09-28T05:56:05.969015

4 DevOps Users True 2022-09-28T19:21:38.852671

5 TestGroup TestGroup True 2022-09-28T03:59:14.609687

6 Reression Training Test Admin Group False 2022-09-28T05:56:07.802828

7 Partner Admin All Partner Dashboards True 2022-09-28T05:56:06.961122

8 Acme Acme True 2022-10-23T22:03:29.461241

9 bizops Business Operations Dashboards for Business Operations Users True 2022-09-29T03:11:06.780365

10 l2-users L2 Users Dashboards for L2 Users True 2022-09-28T05:56:12.239130

11 l1-l3 Dashboard l1-l3 Dashboard True 2022-12-21T05:28:23.966417

12 Biz Command Center Biz Command Center Dashboards for Biz Command Center True 2022-09-28T05:56:03.338590

13 Engineering Engineering Group Dashboards for Engineering Group True 2022-09-28T05:56:05.072187

delete

Description: Delete a dashboard group

usage: dashgroup [-h] --name DASHBOARD_GROUP_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_GROUP_NAME

Name of the dashboard group to delete

- Following is the syntax for dashgroup delete

2023-01-04:12:02:37 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:12:02:37 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:12:02:37 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Deleted dashboard group: synthetics-control

Sub Command: enable

Description: Change the status of a dashboard group to 'enabled'

usage: dashgroup [-h] --name DASHBOARD_GROUP_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_GROUP_NAME

Name of the dashboard group

- Following is the syntax for dashgroup enable

2023-01-04:10:54:08 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:10:54:08 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:10:54:08 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Changed status of l1-users to enabled

Sub Command: disable

Description: Change the status of a dashboard group to 'disabled'

usage: dashgroup [-h] --name DASHBOARD_GROUP_NAME

optional arguments:

-h, --help show this help message and exit

--name DASHBOARD_GROUP_NAME

Name of the dashboard group

- Following is the syntax for dashgroup disable

2023-01-04:10:53:16 [1] INFO nats_client Creating new SharedPool ...

2023-01-04:10:53:16 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-04:10:53:16 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Changed status of l1-users to disabled

Sub Command: dataset

Following are the valid Sub-Commands for the dataset

Sub Commands |

Description |

|---|---|

| list | List datasets from the object store |

| get | Download a dataset from the object store |

| meta | Download metadata for a dataset from the object store |

| add | Add a new dataset to the object store |

| delete | Delete a dataset from the object store |

| bounded-list | List bounded datasets from the object store |

| bounded-get | Download a bounded dataset from the object store |

| bounded-meta | Download metadata for a bounded dataset from the object store |

| bounded-add | Add a new bounded dataset to the system |

| bounded-import | Import the data for a bounded dataset and store it in the object store |

| bounded-delete | Delete a bounded dataset from the object store |

Dataset management commands

Following are valid sub-commands for dataset:

list List datasets from the object store

get Download a dataset from the object store

meta Download metadata for a dataset from the object store

add Add a new dataset to the object store

delete Delete a dataset from the object store

bounded-list List bounded datasets from the object store

bounded-get Download a bounded dataset from the object store

bounded-meta Download metadata for a bounded dataset from the object store

bounded-add Add a new bounded dataset to the system

bounded-import Import the data for a bounded dataset and store it in the object store

bounded-delete Delete a bounded dataset from the object store

Sub Command: list

Description: List datasets from the object store

usage: dataset [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for dataset list

name format mem_size_mb num_rows num_columns saved_time

--- ------------------------------------------------------ -------- ------------- ---------- ------------- --------------------------

0 Appdynamics_cpu_metrics csv 0.092 1000 12 2022-12-14T05:48:07.940815

1 Appdynamics_cpu_metrics_new csv 0.092 1000 12 2023-01-04T12:00:17.628034

2 Balancing_Control_to_Platform_Standardizer csv 0 1 3 2022-10-19T00:32:51.328289

3 DATASET-SERVICEWOW csv 0.015 2000 1 2022-10-13T14:35:08.843641

4 Data_Masking_to_hive_load csv 0 1 3 2022-10-19T00:32:51.657829

5 Data_Parsing_to_Balancing_Control csv 0 1 3 2022-10-19T00:32:51.213174

6 Feed_Data_Standardizer_to_Data_Masking csv 0 1 3 2022-10-19T00:32:51.550645

7 Metadata_Validator_to_Preprocessing csv 0 1 3 2022-10-19T00:32:50.999017

8 Online_Banking_Stack_Metrics csv 0.001 6 12 2022-09-29T04:01:45.875869

9 Platform_Standardizer_to_Feed_Data_Standardizer csv 0 1 3 2022-10-19T00:32:51.433995

10 Preprocessing_to_Data_Parsing csv 0 1 3 2022-10-19T00:32:51.101942

11 SS-AWS-event-groups csv 0 3 9 2022-11-08T14:39:47.207992

Sub Command: get

Description: Download a dataset from the object store

usage: dataset [-h] --name NAME [--tofile SAVE_TO_FILE] [--json]

[--format DATA_FORMAT] [--viz]

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--tofile SAVE_TO_FILE

Save the data to the specified file (CSV or JSON if

--json is specified)

--json Export data as a JSON formatted rows. ** Deprecated.

Use --format **

--format DATA_FORMAT Save the downloaded data in the specified format.

Valid values are csv, json, parquet. If format is

'auto', format is determined from extension

--viz Open Dataframe visualizer to show the data

- Following is the syntax for dataset get

Downloaded dataset. Number of Rows: 6, Columns: 12

[DFViz:1] Rows 6, Cols 12 | View Rows 0-6, Cols: 0-4 | Press 'q' to exit, '?' for help

Data Filter: | Col Filter: | Data Sort: | cfxql:

+----+-------------+----------+-----------------------+-----------------------------+--------------------------+

| | component | count_ | layer | metric_name | node_id |

|----+-------------+----------+-----------------------+-----------------------------+--------------------------|

| 0 | | | Application Component | db_slow_queries | 10.95.134.103_Database |

| 1 | | | Application Component | total_response_time | 10.95.134.101_Webserver |

| 2 | | | Application Component | consumer_lag | 10.95.134.104_MessageBus |

| 3 | | | Application Component | under_replicated_partitions | 10.95.134.104_MessageBus |

| 4 | | | Application Component | db_connections | 10.95.134.103_Database |

| 5 | | | Application Component | transaction_time | 10.95.134.102_Appserver |

+----+-------------+----------+-----------------------+-----------------------------+--------------------------+

Sub Command: meta

Description: Download metadata for a dataset from the object store

usage: dataset [-h] --name NAME

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

- Following is the syntax for dataset meta

{

"name": "Online_Banking_Stack_Metrics",

"format": "csv",

"datafile": "cfxdm-saved-data/Online_Banking_Stack_Metrics-data.csv",

"mem_size_mb": 0.001,

"num_rows": 6,

"num_columns": 12,

"saved_time": "2022-09-29T04:01:45.875869",

"dtypes": {

"component": "float64",

"count_": "float64",

"layer": "object",

"metric_name": "object",

"node_id": "object",

"node_label": "object",

"node_type": "object",

"source_tool": "object",

"stack_name": "object",

"timestamp": "object",

"unit": "object",

"value": "float64"

}

}

Sub Command: add

Description: Add a new dataset to the object store

usage: dataset [-h] [--folder FOLDER] --name NAME --file INPUT_FILE

[--local_format LOCAL_FORMAT] [--remote_format REMOTE_FORMAT]

optional arguments:

-h, --help show this help message and exit

--folder FOLDER Dataset Folder

--name NAME Dataset name

--file INPUT_FILE CSV or parquet formatted file from which dataset will

be added

--local_format LOCAL_FORMAT

Local file format (auto or csv or parquet or json).

'auto' means format will be determined from filename

extension

--remote_format REMOTE_FORMAT

Remote file format (csv or parquet).

- Following is the syntax for dataset add

Loaded dataset from file. Number of Rows: 100000, Columns: 13

Dataset 'metricsdata' not found in the object storage.

2023-01-05:05:13:37 [1] INFO nats_client Creating new SharedPool ...

2023-01-05:05:13:37 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-05:05:13:37 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Added dataset 'metricsdata'

Sub Command: delete

Description: Delete a dataset from the object store

usage: dataset [-h] --name NAME [--yes]

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--yes Delete without prompting

- Following is the syntax for dataset delete

Confirm deletion of dataset (y/n)? y

2023-01-05:05:21:39 [1] INFO nats_client Creating new SharedPool ...

2023-01-05:05:21:39 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-05:05:21:39 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Sub Command: bounded-list

Description: List bounded datasets from the object store

usage: dataset [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for dataset bounded-list

drafts mem_size_mb name num_columns num_rows saved_time schema

-- -------- ------------- --------- ------------- ---------- -------------------------- ---------

0 [] 0 Schema-QA 6 0 2023-01-05T04:39:16.255705 Schema-QA

1 [] 0 Test 3 0 2023-01-05T04:39:24.660053 Test

Sub Command: bounded-get

Description: Download a bounded dataset from the object store

usage: dataset [-h] --name NAME [--tofile SAVE_TO_FILE] [--format DATA_FORMAT]

[--viz]

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--tofile SAVE_TO_FILE

Save the data to the specified file (CSV or JSON if

--json is specified)

--format DATA_FORMAT Save the downloaded data in the specified format.

Valid values are csv, json, parquet. If format is

'auto', format is determined from extension

--viz Open Dataframe visualizer to show the data

- Following is the syntax for dataset bounded-get

Downloaded bounded dataset. Number of Rows: 0, Columns: 7

[DFViz:1] Rows 0, Cols 7 | View Rows 0-0, Cols: 0-4 | Press 'q' to exit, '?' for help

Data Filter: | Col Filter: | Data Sort: | cfxql:

+--------+--------+--------+--------------+-------+

| text | enum | bool | enum_array | int |

|--------+--------+--------+--------------+-------|

+--------+--------+--------+--------------+-------+

Sub Command: bounded-meta

Description: Download metadata for a bounded dataset from the object store

usage: dataset [-h] --name NAME

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

{

"name": "Schema-QA",

"mem_size_mb": 0,

"num_rows": 0,

"drafts": [],

"saved_time": "2023-01-05T04:39:16.255705",

"schema": "Schema-QA",

"num_columns": 6

}

Sub Command: bounded-add

Description: Add a new bounded dataset to the system

usage: dataset [-h] --name NAME --schema SCHEMA_NAME

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--schema SCHEMA_NAME Validate data against given schema. When schema is

given, the dataset is added as 'schema bounded

dataset'.

- Following is the syntax for dataset bounded-add

2023-01-05:04:54:02 [1] INFO nats_client Creating new SharedPool ...

2023-01-05:04:54:02 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-05:04:54:02 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Successfully added bounded dataset Example Schema

Sub Command: bounded-delete

Description: Delete a bounded dataset from the object store

usage: dataset [-h] --name NAME [--yes]

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--yes Delete without prompting

Confirm deletion of dataset (y/n)? y

Successfully deleted all drafts of Example Schema

2023-01-05:04:55:43 [1] INFO nats_client Creating new SharedPool ...

2023-01-05:04:55:43 [1] INFO nats_client Initiallzing PubMgr for pid=1

2023-01-05:04:55:43 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Successfully deleted bounded data set Example Schema

bounded-import

Description: Import the data for a bounded dataset and store it in the object store

usage: dataset [-h] --name NAME --file INPUT_FILE

[--local_format LOCAL_FORMAT] [--yes] [--json]

optional arguments:

-h, --help show this help message and exit

--name NAME Dataset name

--file INPUT_FILE CSV or parquet formatted file from which dataset will

be added

--local_format LOCAL_FORMAT

Local file format (auto or csv or parquet or json).

'auto' means format will be determined from filename

extension

--yes Delete without prompting

--json Print detailed information in JSON format instead of

tabular format

- Following is the syntax for dataset bounded-import

Sub Command: demo

Following are the valid Sub-Commands for the demo

Sub Commands |

Description |

|---|---|

| backup | Export dashboards and all related artifact meta data in to a folder |

| setup | Setup a target system for demo |

| diff | Compare two backup directories |

Demo related commands

Following are valid sub-commands for demo:

backup Export dashboards and all related artifact meta data in to a folder

setup Setup a target system for demo

diff Compare two backup directories

Note

To create demo.tar.gz file from the output folder use the below mentioned commands

Sub Command: backup

Description: Export dashboards and all related artifact meta data in to a folder

usage: demo [-h] --to_dir TO_DIR [--yaml]

optional arguments:

-h, --help show this help message and exit

--to_dir TO_DIR Output directory

--yaml Export in YAML format (default is JSON)

- Following is the syntax for demo backup

Backing up 109 Dashboards

Backing up 16 Dashboard Groups

Backing up 52 Published Pipelines

Backing up 11 Blueprints

Backing up 4 Synthetic Profiles

Backing up 4 Stacks used in Synthetic Profiles

2023-01-05:06:31:18 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Backing up 16 Endpoints

Backing up 9 Mappings

Backing up 98 Persistent Streams

Sub Command: diff

Description: Compare two backup directories

usage: demo [-h] --first FIRST --second SECOND [--details] [--side]

[--no_ident] [--opts OPTIONS]

optional arguments:

-h, --help show this help message and exit

--first FIRST First Directory where demo setup artifacts are stored.

--second SECOND Second Directory where demo setup artifacts are stored.

--details Show detailed diffs between files

--side While Showing diffs use side-by-side format

--no_ident Exclude Identical Objects from output

--opts OPTIONS Comma separated list of artifacts names to restrict: stacks

(st), pstreams (ps), dashboards (d), dashboard_groups

(dg),synthetic_profiles (syn)

- Following is the syntax for demo diff

Dashboard l1-main-app Identical

Dashboard olb-engineering-dashboard_page_Metric Analysis Identical

Dashboard rda-mgmt-page-blueprints Identical

Dashboard olb-business-command-center Identical

Dashboard rda-microservice-traces_page_Healthchecks Identical

Dashboard rda-mgmt-page-stagingarea Identical

Dashboard incident-metrics Identical

Dashboard ss_ch_all_sources Identical

Dashboard alert-incident-summary Identical

Dashboard l2-l3-incidents Identical

Dashboard olb-observability-data_page_Metric Analysis Identical

Dashboard incident-topology Identical

Dashboard olb-bizops-observability_page_Incidents_network Identical

Dashboard ss_ch_pal_page_Analytics Identical

Dashboard olb-bizops-observability Identical

- Following is the syntax No.2 for demo diff

Sub Command: setup

Description: Setup a target system for demo

usage: demo [-h] --dir FOLDER --ip PLATFORM_IP [--port WEBHOOK_PORT]

[--protocol WEBHOOK_PROTO] [--pipelines PIPELINES]

[--blueprints BLUEPRINTS] [--verify_only]

optional arguments:

-h, --help show this help message and exit

--dir FOLDER Directory where demo setup artifacts are stored. Most

contain a settings.json in that folder

--ip PLATFORM_IP Target platform Public IP Address

--port WEBHOOK_PORT Port for webhook server (Default 7443)

--protocol WEBHOOK_PROTO

Protocol for Webhook server (Default https)

--pipelines PIPELINES

Comma seperated list of Pipeline names to deploy them

alone

--blueprints BLUEPRINTS

Comma seperated list of Blueprint names to deploy them

alone

--verify_only Verify Only. Do not push changes to target system

- Following is the syntax for demo setup

WARNING: dashboards directory not found, skipping

WARNING: dashboard_groups directory not found, skipping

WARNING: stacks directory not found, skipping

WARNING: synthetic_profiles directory not found, skipping

WARNING: persistent_streams directory not found, skipping

WARNING: pipelines directory not found, skipping

WARNING: blueprints directory not found, skipping

WARNING: endpoints directory not found, skipping

WARNING: mappings directory not found, skipping

Performing Audit ...

2023-01-05:06:22:58 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Skipping audit for pstreams

Skipping audit for dashboards

Skipping audit for Dashboard Groups

Skipping audit for Stacks

Skipping audit for Synthetic Profiles

Skipping audit for Publish Pipelines

Skipping audit for Blueprints

Everyhing configured (Total Checks 2)

Sub Command: bundle-deploy

optional arguments:

-h, --help show this help message and exit

--file BUNDLE_FILE Bundle file to be deployed. Must be in .tar.gz format

--type TYPE Deploy only specified types. Comma separated list. (d:

dashboard, b: blueprint, p: pipeline)

--compare Compare bundle vs currently deployed. Do not deploy any

artifact

Deployed following artifacts from this bundle:

type name

-- -------- ---------------------------------------------------

0 pipeline vmware_vcenter_inventory_pipeline_v1_c1

1 pipeline vmware_vcenter_inventory_topology_pipeline_v1_c2

2 pipeline windows_host_os_system_inventory_and_topology_v1_c1

3 pipeline irm-stream-missing-columns-update

4 pipeline netapp_cmode_storage_arrays_inventory_v1

5 pipeline vmware_vcenter_inventory_pipeline_v1_c2

6 pipeline vmware_vcenter_inventory_topology_pipeline_v1_c1

7 pipeline linux_host_os_system_inventory_and_topology_v1_c2

8 pipeline linux_host_os_system_inventory_and_topology_v1_c3

9 pipeline oia-sources-streams-merge

10 pipeline netapp_storage_array_topology_pipeline_v1

11 pipeline kubernetes_cluster_inventory_pipeline_v1

12 pipeline cisco_ucs_cimc_inventory_v1

13 pipeline linux_host_os_system_inventory_and_topology_v1_c1

14 pipeline windows_host_os_system_inventory_and_topology_v1_c2

15 pipeline kubernetes_cluster_topology_pipeline_v1

16 pipeline cisco-ucsm-infra-topology-pipeline-v1

17 pipeline NetApp_7Mode_inventroy

Note

Similar to the pipeline bundle deployment in the example shown above, We can deploy for Dashboards and Service Blueprints

Sub Command: deployment

Following are the valid Sub-Commands for the deployment

Sub Commands |

Description |

|---|---|

| activity | List recent deployment activities |

| status | Display status of all deployments |

| audit-report | Display Audit report for a given deployment ID |

| add | Add a new Deployment to the repository. Deployment specification must be in valid YML format |

| enable | Enable an existing deployment if it is not already enabled |

| disable | Disable an existing deployment if it is not already disabled |

| delete | Delete an existing deployment from repository |

| dependencies | List all artifact dependencies used by the deployment |

| svcs-status | List current status of all service pipelines in a deployment |

| map | Print service map information in JSON format for the given deployment |

Following are valid sub-commands for deployment:

activity List recent deployment activities

status Display status of all deployments

audit-report Display Audit report for a given deployment ID

add Add a new Deployment to the repository. Deployment specification must be in valid YML format

enable Enable an existing deployment if it is not already enabled

disable Disable an existing deployment if it is not already disabled

delete Delete an existing deployment from repository

dependencies List all artifact dependencies used by the deployment

svcs-status List current status of all service pipelines in a deployment

map Print service map information in JSON format for the given deployment

Sub Command: activity

Description: List recent deployment activities

usage: deployment [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for deployment activity

timestamp severity message

-- -------------------------- ---------- ----------------------------------------------------------------------------------------------------------------------------------------

0 2022-12-21T09:05:13.943451 WARNING Re-Created Job: f5cc308e6d014173ac2a7893b1bae564 for Deployment Name: Demo_vmware_service_blueprint, Id: b744c8c2. Restart Count: 213

1 2022-12-21T09:05:13.301284 WARNING Re-Created Job: 19c5ad1f4b5442a6a662bfd0493cc3db for Deployment Name: Service Action schedule pipeline, Id: b744c8c0. Restart Count: 213

2 2022-12-21T09:05:12.817716 WARNING Re-Created Job: 8cc74ba0d633413faea5230aa2590d41 for Deployment Name: Blueprint_18_11_2022, Id: b744c873. Restart Count: 213

3 2022-12-21T09:04:02.257588 WARNING Re-Created Job: 4d2b3770013b462db8cbcc421084a057 for Deployment Name: Demo_vmware_service_blueprint, Id: b744c8c2. Restart Count: 212

4 2022-12-21T09:04:01.482574 WARNING Re-Created Job: e37777adca0a4a3fb6eeb431e0b9acca for Deployment Name: Service Action schedule pipeline, Id: b744c8c0. Restart Count: 212

5 2022-12-21T09:04:00.992727 WARNING Re-Created Job: b792ae4110a6469883abcdf709692dee for Deployment Name: Blueprint_18_11_2022, Id: b744c873. Restart Count: 212

6 2022-12-21T09:02:50.307263 WARNING Re-Created Job: f9e9a10bf1dd46e8a14049d0ac26a87a for Deployment Name: Demo_vmware_service_blueprint, Id: b744c8c2. Restart Count: 211

Sub Command: status

Description: Display status of all deployments

usage: deployment [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

- Following is the syntax for deployment status

id category name description enabled errors warnings

-- -------------------------------------- -------------------------------------- -------------------------------------- ---------------------------------------------------------------------------------------------- --------- -------- ----------

0 41e33973 ITSM eBonding eBond ServiceNow incidents to PagerDuty, Twilio-SMS, Elasticsearch/Kibana, Slack, Email no 0 0

1 81a1a030 ITOM ML-Experiments ML Experiments yes 0 0

2 81a1a2202 ITOM OIA Ops Intelligence & Analytics yes 0 0

3 81a1a2203 ITOM Stacks Stacks yes 0 0

4 V2tdZpEs Dependency Mapping AWS Dependency Mapper AWS Dependency mapping with updates every hour yes 2 0

5 b744c873 Service Action schedule pipeline Blueprint_18_11_2022 Service Action schedule pipeline example yes 0 2

6 b744c8c0 Service Action schedule pipeline Service Action schedule pipeline Service Action schedule pipeline example yes 0 1

7 b744c8c1 Service Action schedule pipeline Blueprint_16_11_2022 Service Action schedule pipeline example no 0 0

8 b744c8c2 vmware schedule pipeline Demo_vmware_service_blueprint vmware schedule pipeline yes 0 0

9 exec_dashboard_kpi_metrics_querystream exec_dashboard_kpi_metrics_querystream exec_dashboard_kpi_metrics_querystream exec_dashboard_kpi_metrics_querystream yes 0 0

10 guide001 Log Analytics Beginner Guide Blueprint Generate Synthetic Syslogs, Save all logs to Log Archive, send processed logs to a NULL stream yes 0 0

Sub Command: audit-report

Description: Display Audit report for a given deployment ID

usage: deployment [-h] --id DEPLOYMENT_ID [--json]

optional arguments:

-h, --help show this help message and exit

--id DEPLOYMENT_ID Deployment ID

--json Print detailed information in JSON format instead of

tabular format

- Following is the syntax for deployment audit-report

type severity message

-- ------------------------ ---------- -------------------------------------------------------------------------------------------

0 Verify Pipeline INFO Pipeline with name 'aws-dependency-mapper' and version '22_02_16_1' loaded

1 Verify Pipeline INFO Pipeline with name 'aws-dependency-mapper-inner-pipeline' and version '2022_02_16_1' loaded

2 Verify Pipeline ERROR No published versions found for pipline: aws-dependency-mapper2

3 Verify Site ERROR No sites matched the regex 'cfx.*' or no active workers found

4 Verify Source INFO Credential found for Integration: aws, Type: aws_v2

5 Verify Persistent Stream INFO PStream rda_worker_resource_usage found

6 Verify Persistent Stream INFO PStream rda_system_worker_trace_summary found

7 Verify Persistent Stream INFO PStream rda_worker_resource_usage found

8 Verify Persistent Stream INFO PStream rda_system_worker_trace_summary found

9 Verify Persistent Stream INFO PStream rda_system_deployment_updates found

10 Verify Persistent Stream INFO PStream rda_system_gw_endpoint_metrics found

Sub Command: add

Description: Add a new Deployment to the repository. Deployment specification must be in valid YML format

usage: deployment [-h] --file INPUT_FILE [--overwrite]

optional arguments:

-h, --help show this help message and exit

--file INPUT_FILE YAML file containing Deployment specification

--overwrite Overwrite even if a ruleset already exists with a name.

- Following is the syntax for deployment add

- sample blueprint.yml file

cat > blueprint.yml << 'EOF'

name: Blueprint_Example

id: b744c873a

version: '2022_12_19_01'

category: Service Action schedule pipeline

comment: Service Action schedule pipeline example

enabled: true

type: Service

auto_deploy: false

provider: CloudFabrix Software Inc.

attrs: {}

service_pipelines:

- name: Service_Pipeline

label: Service Pipelines

version: '*'

site: rda-site-01

site_type: regex

instances: 1

scaling_policy:

min_instances: 1

max_instances: 1

action_pipelines:

- name: Action_Pipeline

label: Action Pipelines

version: '*'

site: rda-site-01

site_type: regex

instances: 1

scaling_policy:

min_instances: 1

max_instances: 1

scheduled_pipelines:

- name: schedule_pipeline

label: Scheduled Pipelines

version: '*'

site: rda-site-01

cron_expression: '*/5 * * * *'

site_type: regex

instances: 1

scaling_policy:

min_instances: 1

max_instances: 1

EOF

Sub Command: enable

Description: Enable an existing deployment if it is not already enabled

Usage: deployment-enable [-h] --id DEP_ID

optional arguments:

-h, --help show this help message and exit

--id DEP_ID Deployment ID

- Following is the syntax for deployment enable

Sub Command: disable

Description: Disable an existing deployment if it is not already disabled

Usage: deployment-disable [-h] --id DEP_ID

optional arguments:

-h, --help show this help message and exit

--id DEP_ID Deployment ID

- Following is the syntax for deployment disable

Sub Command: delete

Description: Delete an existing deployment from repository

usage: deployment [-h] --id DEP_ID

optional arguments:

-h, --help show this help message and exit

--id DEP_ID Deployment ID

- Following is the syntax for deployment delete

2022-12-22:08:49:02 [1] INFO nats_client Creating new SharedPool ...

2022-12-22:08:49:02 [1] INFO nats_client Initiallzing PubMgr for pid=1

2022-12-22:08:49:02 [1] INFO nats_client Saving NATS certificate to file: /tmp/nats_cert_custom_461dedd908ef2d75e509377dfe35be02_1.pem

Deleted deployment with ID ID: 44de62c6

Sub Command: dependencies

Description: List all artifact dependencies used by the deployment

Usage: deployment-dependencies [-h] --id DEPLOYMENT_ID [--json]

optional arguments:

-h, --help show this help message and exit

--id DEPLOYMENT_ID Deployment ID

--json Print detailed information in JSON format instead of

tabular format

- Following is the syntax for deployment dependencies

type name read write

-- -------- ------------------------------------ ------ -------

0 pipeline aws-dependency-mapper True False

1 pipeline aws-dependency-mapper-inner-pipeline True False

2 dataset cfx-aws-ec2-instances True True

3 dataset cfx-aws-ec2-instance-types True True

4 dataset cfx-aws-ec2-volumes True True

5 dataset cfx-aws-ec2-vpcs True True

6 dataset cfx-aws-ec2-efs-filesystems True True

7 dataset cfx-aws-ec2-security-groups True True

8 dataset cfx-aws-ec2-subnets True True

9 dataset cfx-aws-ec2-internet-gateways True True

10 dataset cfx-aws-ec2-security-group-nodes True True

Sub Command: svcs-status

Description: List current status of all service pipelines in a deployment

usage: deployment [-h] --id DEPLOYMENT_ID [--json]

optional arguments:

-h, --help show this help message and exit

--id DEPLOYMENT_ID Deployment ID

--json Print detailed information in JSON format instead of

tabular format

- Following is the syntax for deployment svcs-status

label pipeline_name version min_instances max_instances instances num_jobs

-- ------------------------------------------------------------ ------------------------------------------ --------- --------------- --------------- ----------- ----------

0 Read incidents from ServiceNow write to stream ebonding-servicenow-to-stream-v2 * 1 4 1 0

1 Read incidents from stream and write to Elasticsearch/Kibana ebonding-stream-to-elasticsearch-kibana-v2 * 0 4 1 0

2 Read incidents from stream and write to Twilio-SMS ebonding-stream-to-twilio-sms-v2 * 0 4 1 0

3 Read incidents from stream and write to PagerDuty ebonding-stream-to-pagerduty * 0 4 1 0

4 Read incidents from stream and write to Slack ebonding-stream-to-slack * 0 4 1 0

5 Read incidents from stream and write to Email ebonding-stream-to-email * 0 4 1 0

Sub Command: map

Description: Print service map information in JSON format for the given deployment

Usage: deployment-map [-h] --id DEPLOYMENT_ID

optional arguments:

-h, --help show this help message and exit

--id DEPLOYMENT_ID Deployment ID

- Following is the syntax for deployment map

{

"status": "ok",

"reason": "",

"data": {

"stack": {

"name": "eBonding",

"description": "Service map for blueprint: eBonding",

"nodes": [

{

"node_id": "rda-network-stream-ebonding-analytics",

"node_type": "rda-network-stream",

"layer": "RDA Stream",

"iconURL": "Stream",

"node_label": "ebonding-analytics"

},

{

"node_id": "pstream-ebonding-analytics",

"node_type": "Persistent Stream",

"node_label": "ebonding-analytics",

"layer": "Persistent Stream",

"iconURL": "Persistent_Stream"

},

{

"node_id": "rda-network-stream-rda_worker_resource_usage",

"node_type": "rda-network-stream",

"layer": "RDA Stream",

"iconURL": "Stream",

"node_label": "rda_worker_resource_usage",

"defaultVisibility": "hidden"

},

{

"node_id": "pstream-rda_worker_resource_usage",

"node_type": "Persistent Stream",

"node_label": "rda_worker_resource_usage",

"layer": "Persistent Stream",

"iconURL": "Persistent_Stream",

"defaultVisibility": "hidden"

},

{

"node_id": "rda-network-stream-rda_system_worker_trace_summary",

"node_type": "rda-network-stream",

"layer": "RDA Stream",

"iconURL": "Stream",

"node_label": "rda_system_worker_trace_summary",

"defaultVisibility": "hidden"

},

{

"node_id": "pstream-rda_system_worker_trace_summary",

"node_type": "Persistent Stream",

"node_label": "rda_system_worker_trace_summary",

"layer": "Persistent Stream",

"iconURL": "Persistent_Stream",

"defaultVisibility": "hidden"

},

{

"node_id": "rda-network-stream-rda_system_deployment_updates",

"node_type": "rda-network-stream",

"layer": "RDA Stream",

"iconURL": "Stream",

"node_label": "rda_system_deployment_updates",

"defaultVisibility": "hidden"

},

{

"node_id": "pstream-rda_system_deployment_updates",

"node_type": "Persistent Stream",

"node_label": "rda_system_deployment_updates",

"layer": "Persistent Stream",

"iconURL": "Persistent_Stream",

"defaultVisibility": "hidden"

}

],

"relationships": [

{

"left_id": "rda-network-stream-ebonding-analytics",

"right_id": "pstream-ebonding-analytics",

"description": "Stream persistence",

"relationship_type": "uses"

},

{

"left_id": "rda-network-stream-rda_worker_resource_usage",

"right_id": "pstream-rda_worker_resource_usage",

"description": "Stream persistence",

"relationship_type": "uses"

},

{

"left_id": "rda-network-stream-rda_system_worker_trace_summary",

"right_id": "pstream-rda_system_worker_trace_summary",

"description": "Stream persistence",

"relationship_type": "uses"

},

{

"left_id": "rda-network-stream-rda_worker_resource_usage",

"right_id": "pstream-rda_worker_resource_usage",

"description": "Stream persistence",

"relationship_type": "uses"

},

{

"left_id": "rda-network-stream-rda_system_worker_trace_summary",

"right_id": "pstream-rda_system_worker_trace_summary",

"description": "Stream persistence",

"relationship_type": "uses"

},

{

"left_id": "rda-network-stream-rda_system_deployment_updates",

"right_id": "pstream-rda_system_deployment_updates",

"description": "Stream persistence",

"relationship_type": "uses"

}

]

}

}

}

Sub Command: event-gw-status

Description: List status of all ingestion endpoints at all the event gateways

usage: rdac [-h] [--json]

optional arguments:

-h, --help show this help message and exit

--json Print detailed information in JSON format instead of tabular

format

Sub Command: evict

Description: Evict a job from a worker pod

Usage: evict [-h] --jobid JOBID [--yes]

optional arguments:

-h, --help show this help message and exit

--jobid JOBID RDA worker jobid. If partial must match only one job.

--yes Do not prompt for confirmation, evict if job is found

Sub Command: file-ops

Description: Perform various operations on local files

Usage: file-ops copy Copy dataframe from one format to another. Format is inferred from extension. Examples are csv, parquet, json

csv-to-parquet Copy data from CSV to parquet file using chunking

test-formats Run performance test on various formats

positional arguments:

subcommand File ops sub-command

optional arguments:

-h, --help show this help message and exit

Sub Command: file-to-object

Description: Convert files from a column into objects

Usage: file-to-object [-h] --inpcol INPUT_FILENAME_COLUMN --outcol OUTPUT_COLUMN --file

INPUT_FILE --outfolder OUTPUT_FOLDER --outfile OUTPUT_FILE

optional arguments:

-h, --help show this help message and exit

--inpcol INPUT_FILENAME_COLUMN

Name of the column in input that contains the

filenames

--outcol OUTPUT_COLUMN

Column name where object names will be inserted

--file INPUT_FILE Input csv filename

--outfolder OUTPUT_FOLDER

Folder name where objects will be stored

--outfile OUTPUT_FILE

Name of output csv file that has object location

stored

Sub Command: fmt-template-delete

Description: Delete Formatting Template

Usage: fmt-template-delete [-h] --name NAME

optional arguments:

-h, --help show this help message and exit