cfxOIA: Operations Intelligence & Analytics

1. What is Operations Intelligence & Analytics

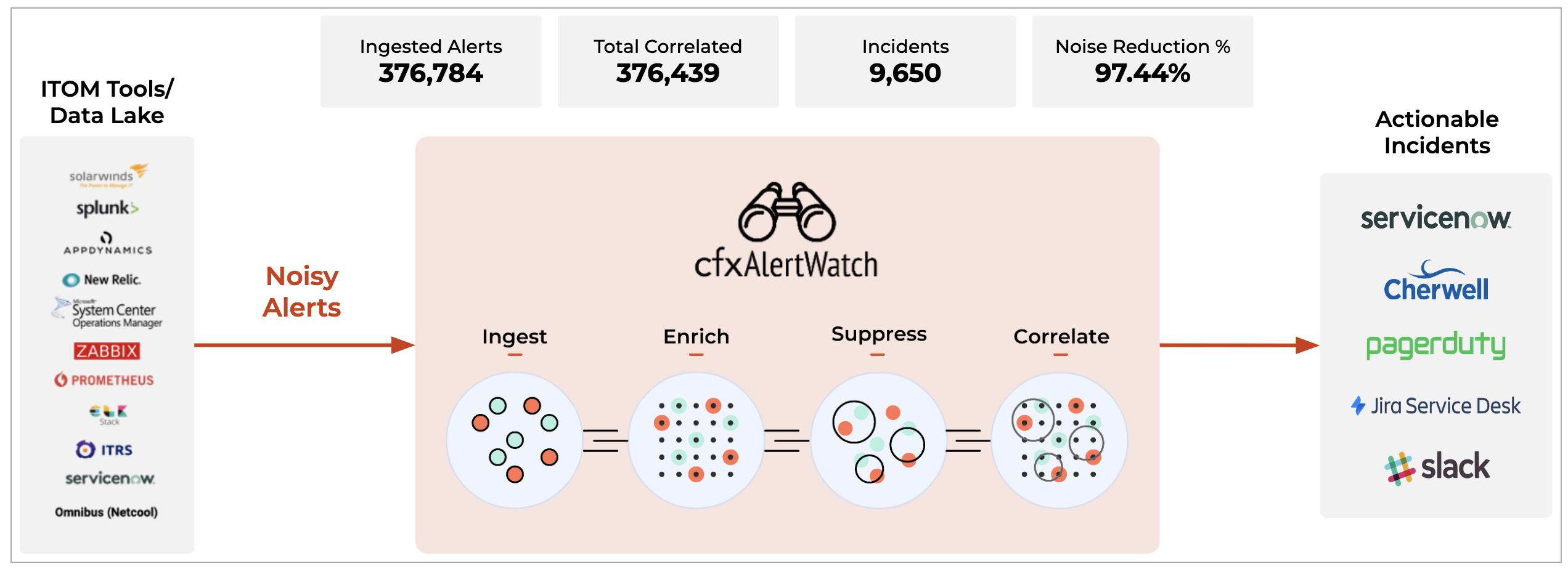

CloudFabrix AIOps solution is called as Operations Intelligence & Analytics (cfxOIA). This solution provides domain-agnostic AIOps capabilities to bring algorithmic decisions to IT operations from several disparate monitoring and other operational data sources. cfxOIA or OIA is a software solution that runs as a distributed application using microservices and containers architecture. OIA is available as an enterprise offering, for on-premise or cloud deployment. OIA is also be offered as fully managed SaaS by CloudFabrix or its partners.

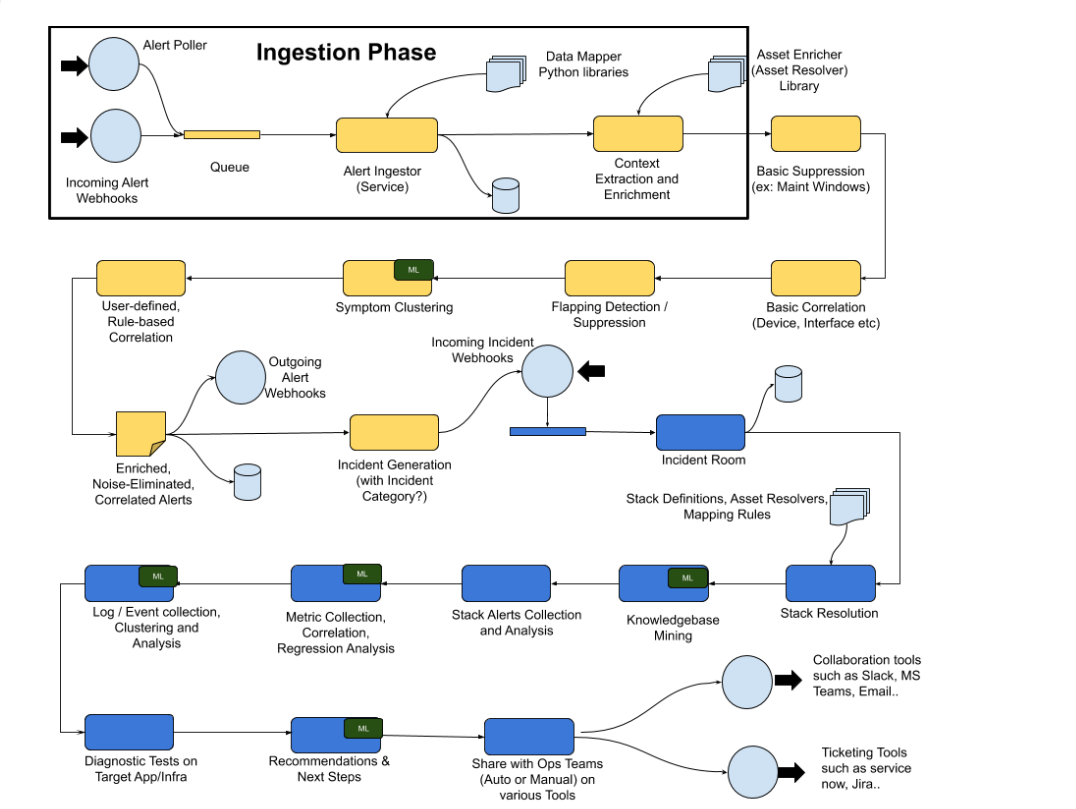

2. How it works

cfxOIA works by ingesting IT operational data, like alerts, events, and traces from multiple performance monitoring tools, log-based alerts from log monitoring tools and observational data from data-lakes for performing algorithmic correlation of alerts to reduce noise. OIA normalizes every alert with enrichment data established by stitching CMDB data, service mappings, and asset management data together to derive context-rich data for every alert that is ingested into the platform.

cfxOIA then correlates alerts, based on enriched data. Identifying correlation patterns is done on OIA's machine learning engine to identify symptomatic patterns in alert data. These patterns are then provided as recommendations to AIOps administrators to consider grouping or deduplication of future alerts that match those symptoms. Admins can create additional correlation policies to tune algorithmic correlation behavior to group alerts across on the entire application stack, within a time window, or in an infrastructure layer.

cfxOIA has an out-of-box implementation to correlate well-known operational issues related to alert burst scenarios, alert flapping situations, and transient alerts. This robust correlation engine allows the admin to implement event correlation for any type of situation, where the majority of patterns are detected with an unsupervised machine learning combined with additional flexibility for admin configurable policies to tune correlation behavior. Alerts that are correlated are called Alert Groups and the policies are called Correlation Policies.

Deduplicated and correlated alerts are grouped in an Alert Group that indicates an active operational issue or an OIA Incident. Every Alert Group has one OIA Incident, which is sent to the ITSM systems (like ServiceNow, PagerDuty, etc,.) and to OIA Incident Room for further Incident processing.

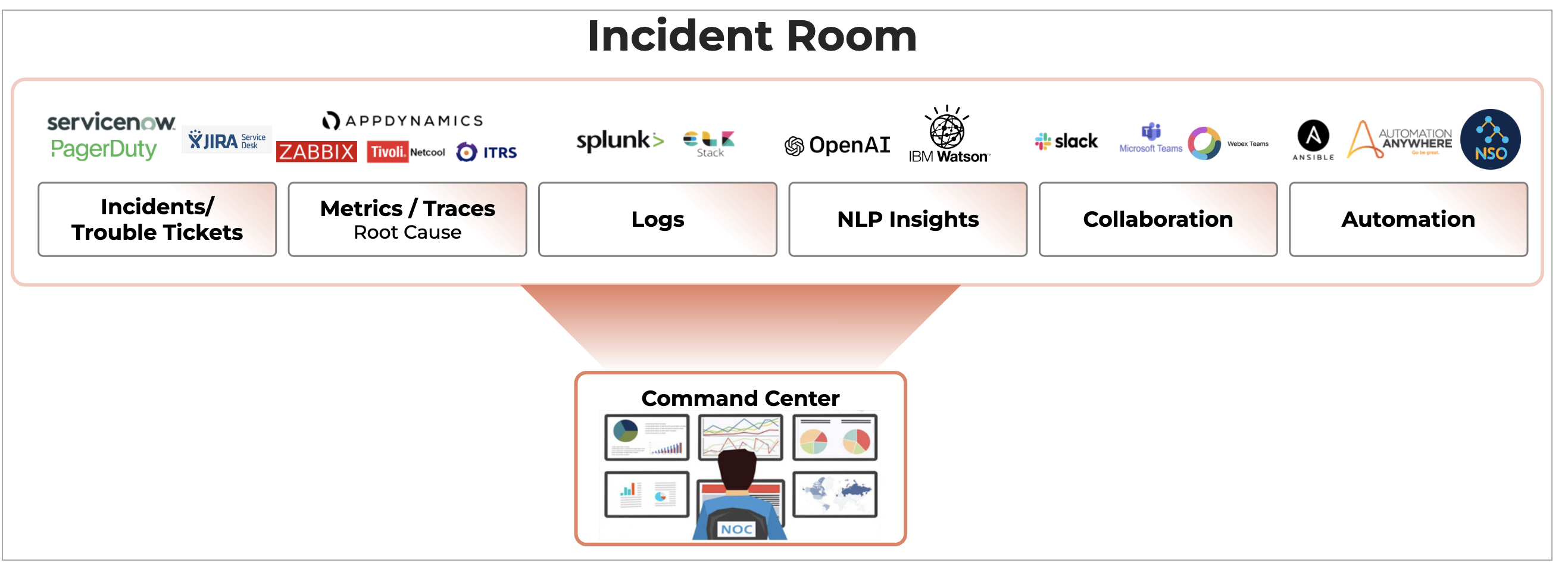

Incident Room is a dynamic and incident-centric workbench that provides all the triage data, Operational metrics, KPIs, Logs, Impacted assets context, Collaboration, and Diagnostic tools all at one place, so that operators can swiftly perform incident root cause analysis and service restoration. This helps in reducing Incident MTTR.

3. Deployment

cfxOIA is an application that is installed on top of RDA Fabric platform.

Please refer Setup, Install and Upgrade of OIA Application Services

4. Data Ingestion & Integrations

cfxOIA operates on IT operational data like alerts, events, traces, metrics, most of which are generated by monitoring tools and in some cases replicated in an aggregate data-lake. OIA supports integrations with many featured vendors using Webhooks, APIs, Kafka messages, etc. Custom integrations can be developed and supported by CloudFabrix professional services, Partners, using CloudFabrix Provided Developer SDKs.

4.1 Alert Ingestion in RDA AlertWatch Module

Tip

Some screenshots are normal some screenshots needs zooming, Please click on those screenshots below to enlarge and again to go back to the actual page please click on the back arrow button on the top left.

4.1.1 High Level Flow Diagram

The following broad steps are essentially needed to ingest and process events (alerts / incidents / messages).

1. Add a source endpoint that creates a sink for posting events from the source ex. for consuming alerts from AppDynamics; add a webhook source endpoint

2. Enable the endpoint to capture initial events. These events are not processed yet but will be recorded in event tracking. The raw event payload can be downloaded from the event tracking report.

3. Add a mapping rule to transform the raw event payload to an internal event model. Use the downloaded event payload as input to test the mapping rule and evaluate the internal event generated via the mapping.

4. Enable the endpoint to process incoming events from source

4.1.2 Event Ingestion

-

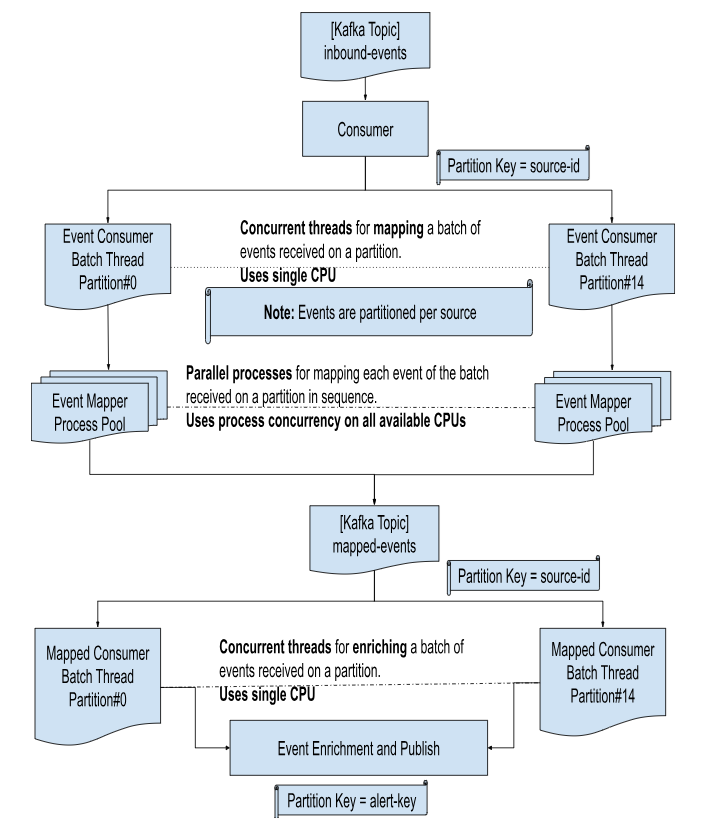

Events ingested from sources like webhooks, SMTP and various other supported consumers are published to Kafka topic inbound-events. The publisher uses the ID of the source, where the event is received; as a partition-key for the Kafka topic

-

A Kafka consumer subscribes for receiving events published on topic inbound-events in batches of maximum count of 500 within a maximum batch interval of 5 seconds

-

A batch of inbound events per partition is processed in a concurrent thread

-

The batch of events is sent to parallel mapper process-pool that is forked for that partition

-

The results of mapping are collected sequentially, thereby retaining the order of events; and published to Kafka topic mapped-events. The publisher again uses the ID of the source, where the event is received; as a partition-key for the Kafka topic

-

A Kafka consumer subscribes for receiving events published on topic mapped-events in batches of a maximum count of 1000 within a maximum batch interval of 5 seconds

-

A batch of mapped events per partition is enriched in a concurrent thread

-

The batch is enriched using the configured ingestion pipeline

-

A batch of mapped events is first converted into multiple contiguous batches that use the same pipeline. So if a batch has say 5 events for pipeline-A, followed by 2 events of pipeline-B and followed by 5 events of pipeline-A again, then the contiguous batches will be as shown below

-

This batching mechanism ensures that the sequence of events is maintained during bulk actions. Each batch is then executed against its configured pipeline to run the enrichment steps.

-

The batch execution of events against each pipeline step, maximizes the usage of resources. Also reduces the time taken for events to go through the pipeline steps.

-

Each step of the pipeline, tracked as part of event trail; receives the batch as input and enriches each event in the batch with enriched attributes

-

The batch of enriched events (alerts/incidents/messages) is published for alert or incident processing

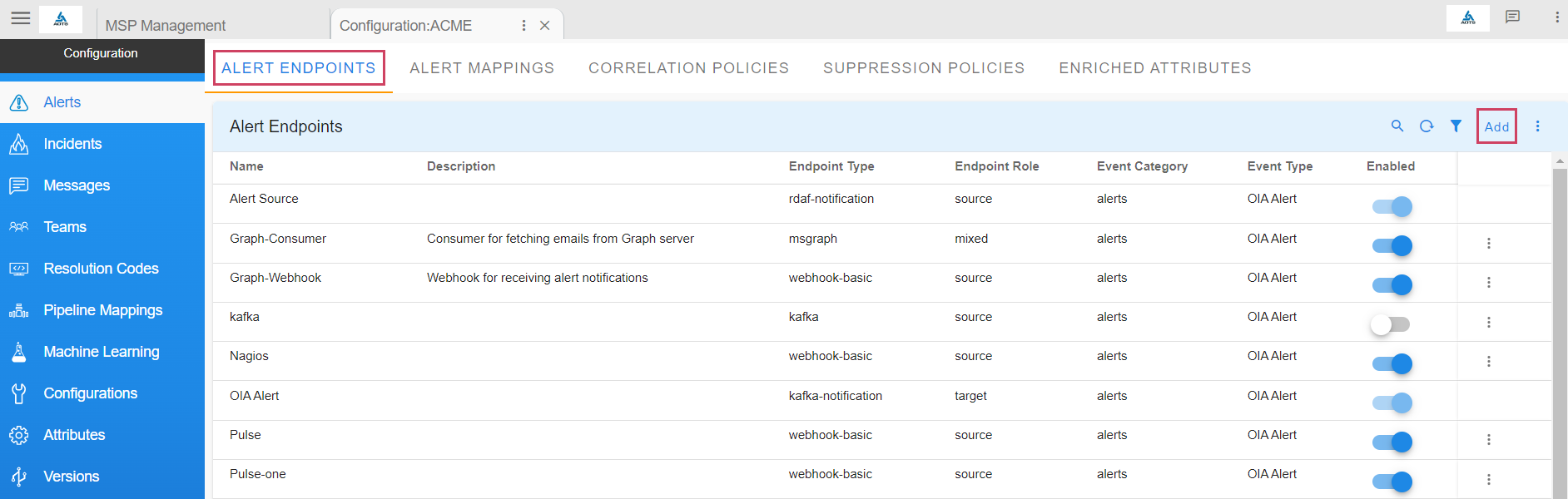

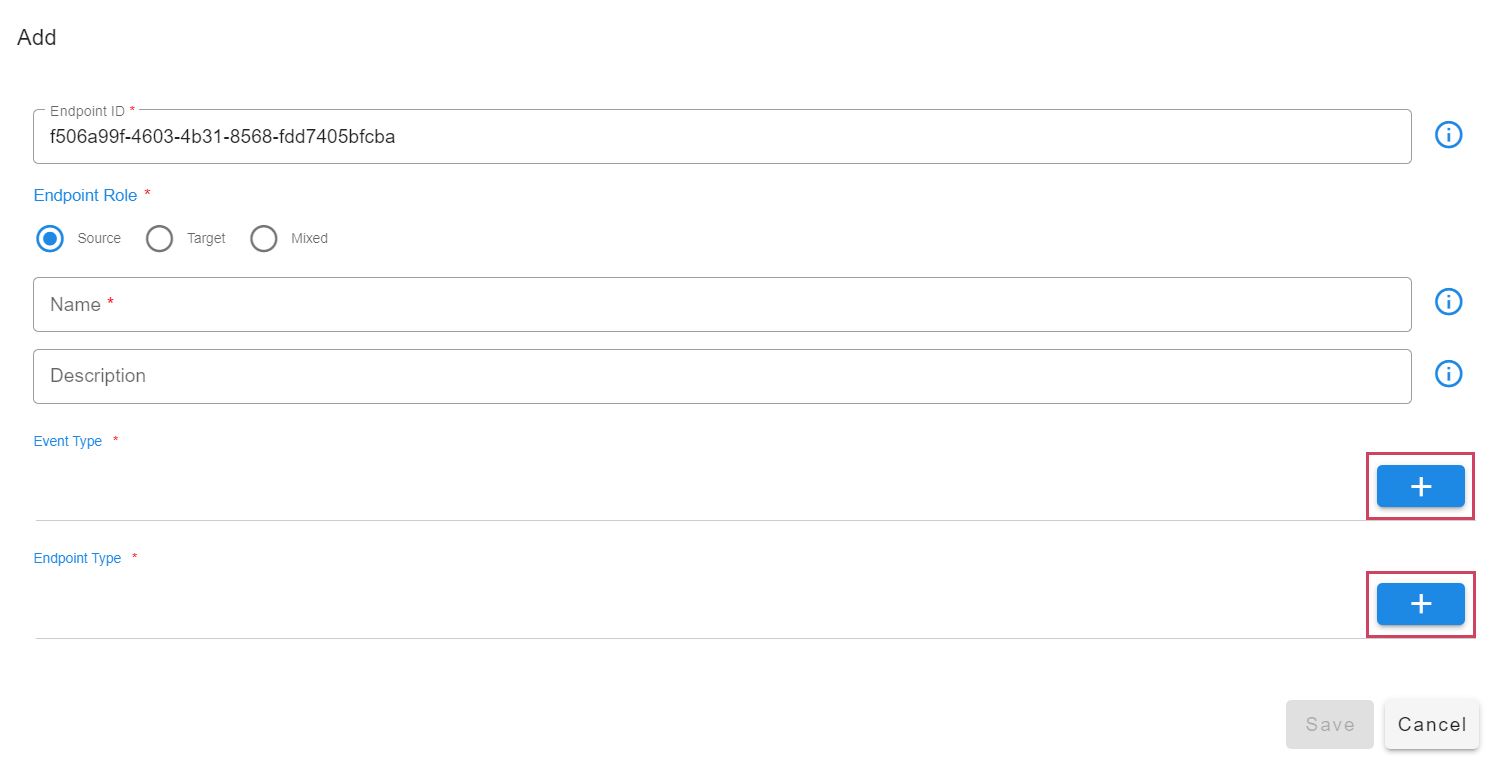

4.1.3 Source Event Endpoint

For ingesting events from a source, we need to add a Source endpoint.

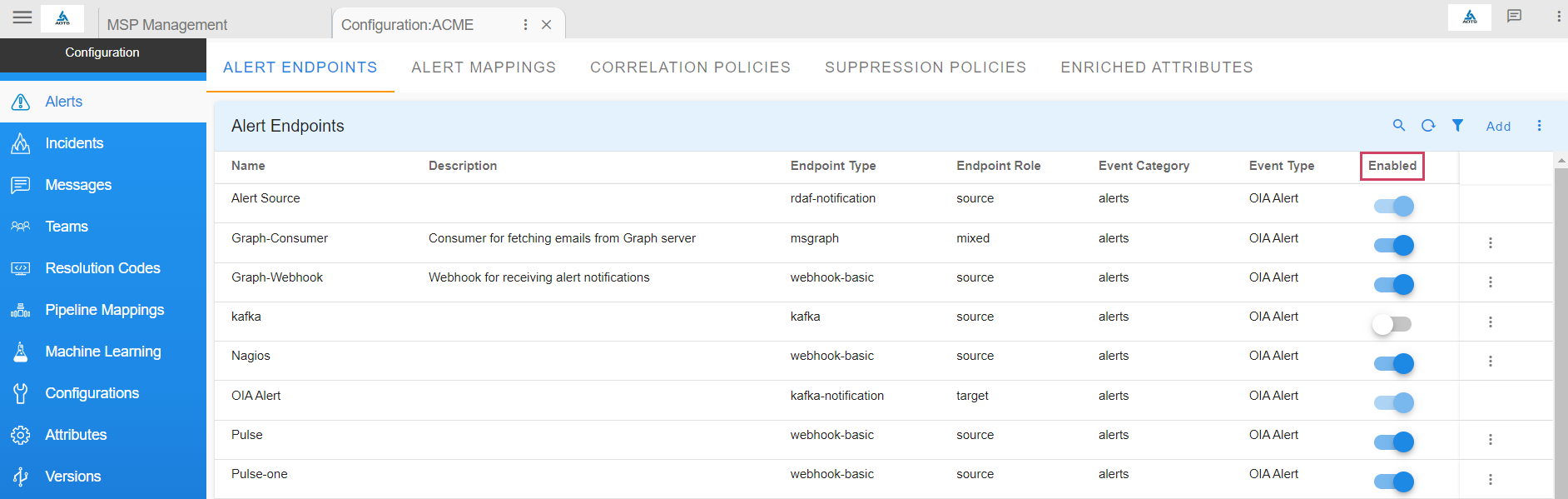

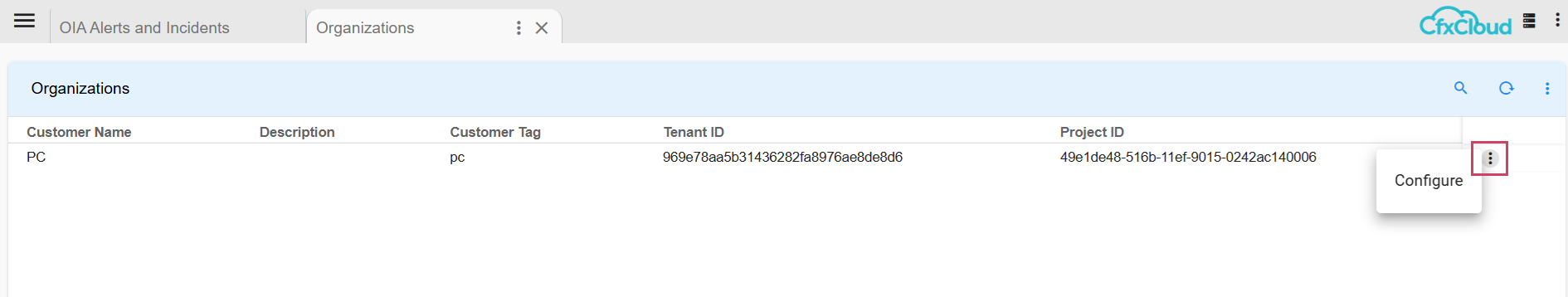

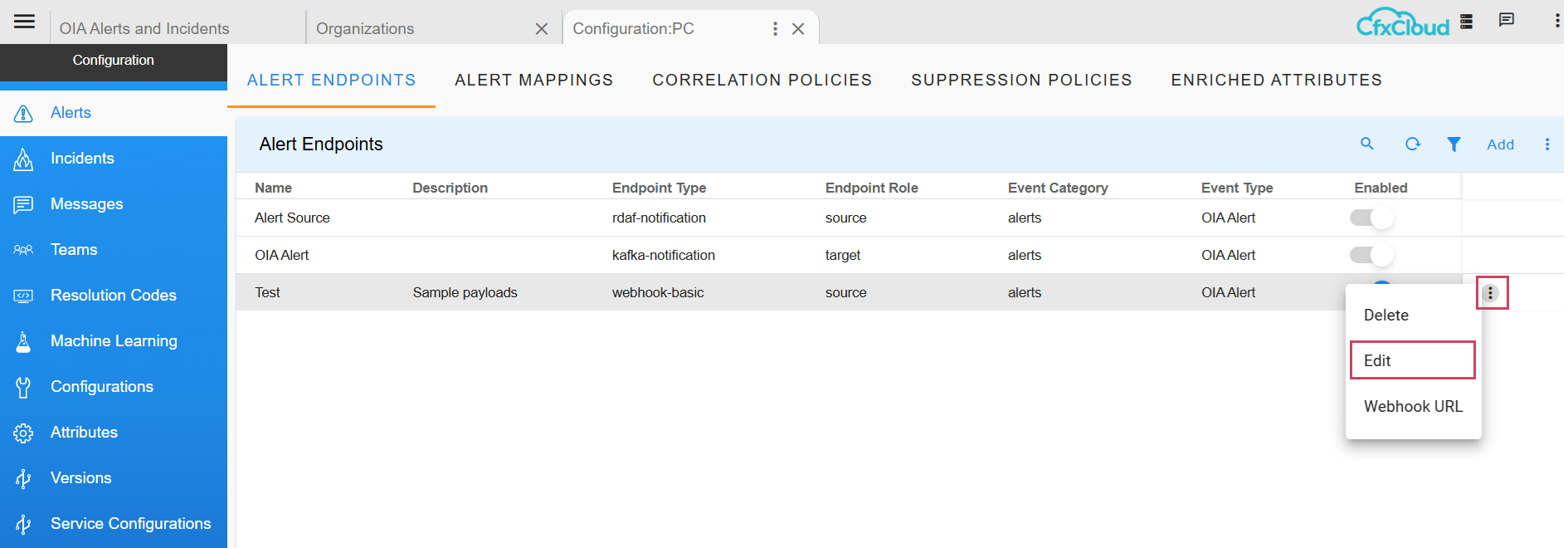

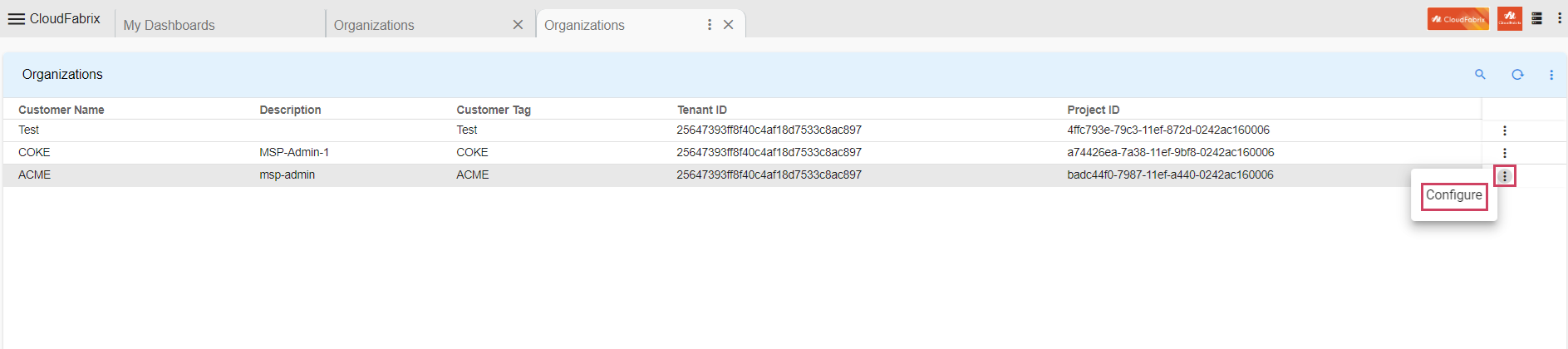

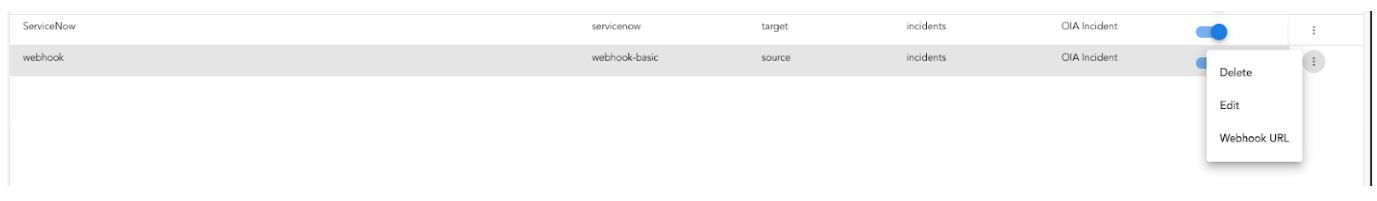

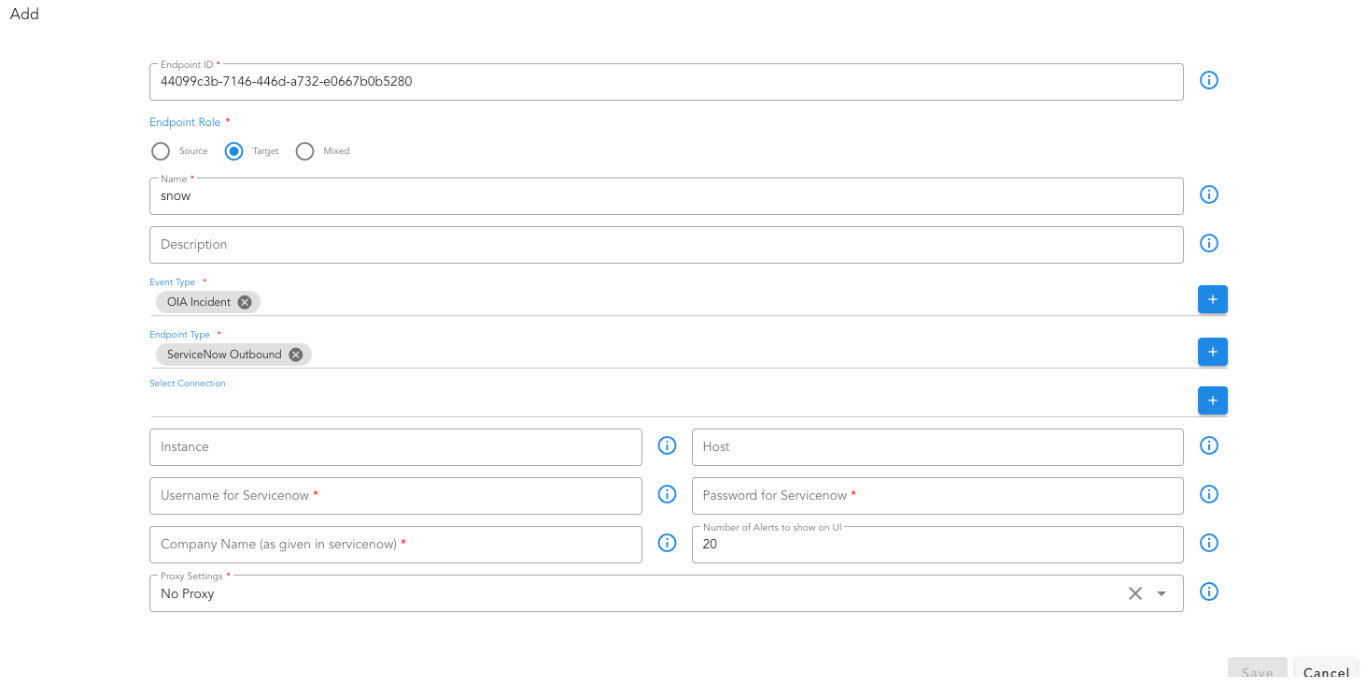

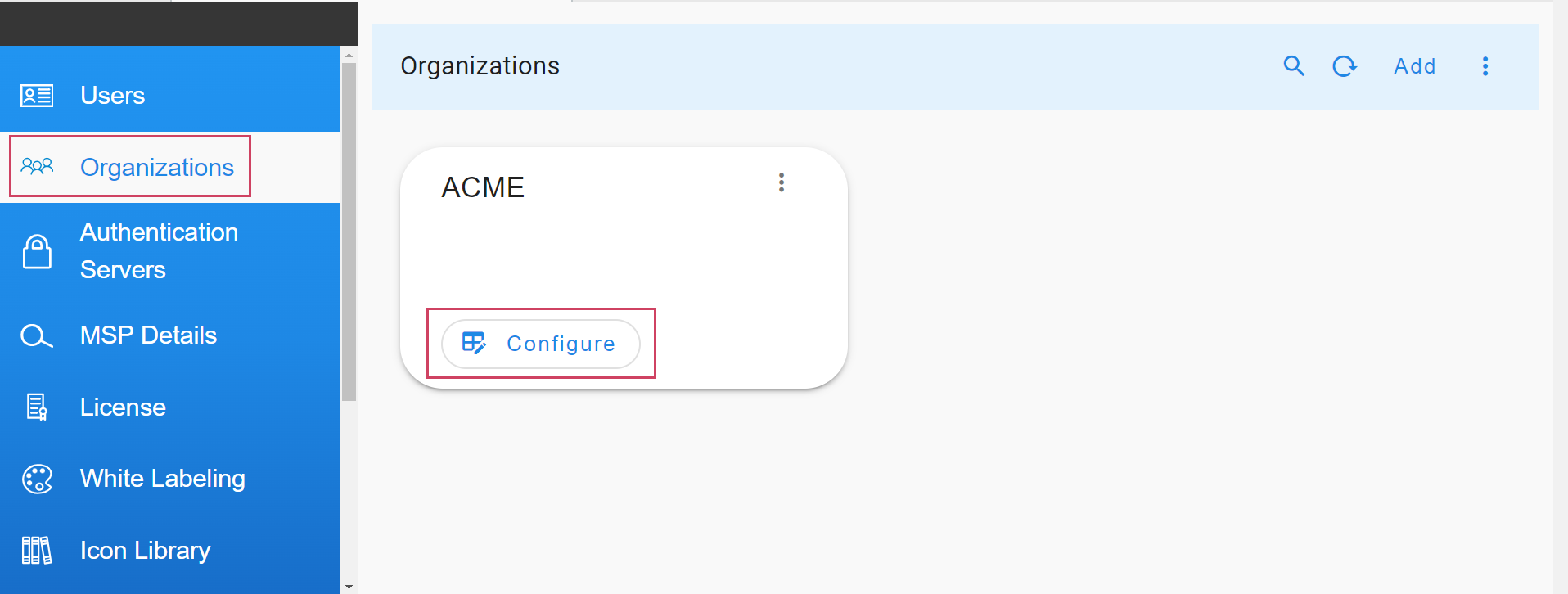

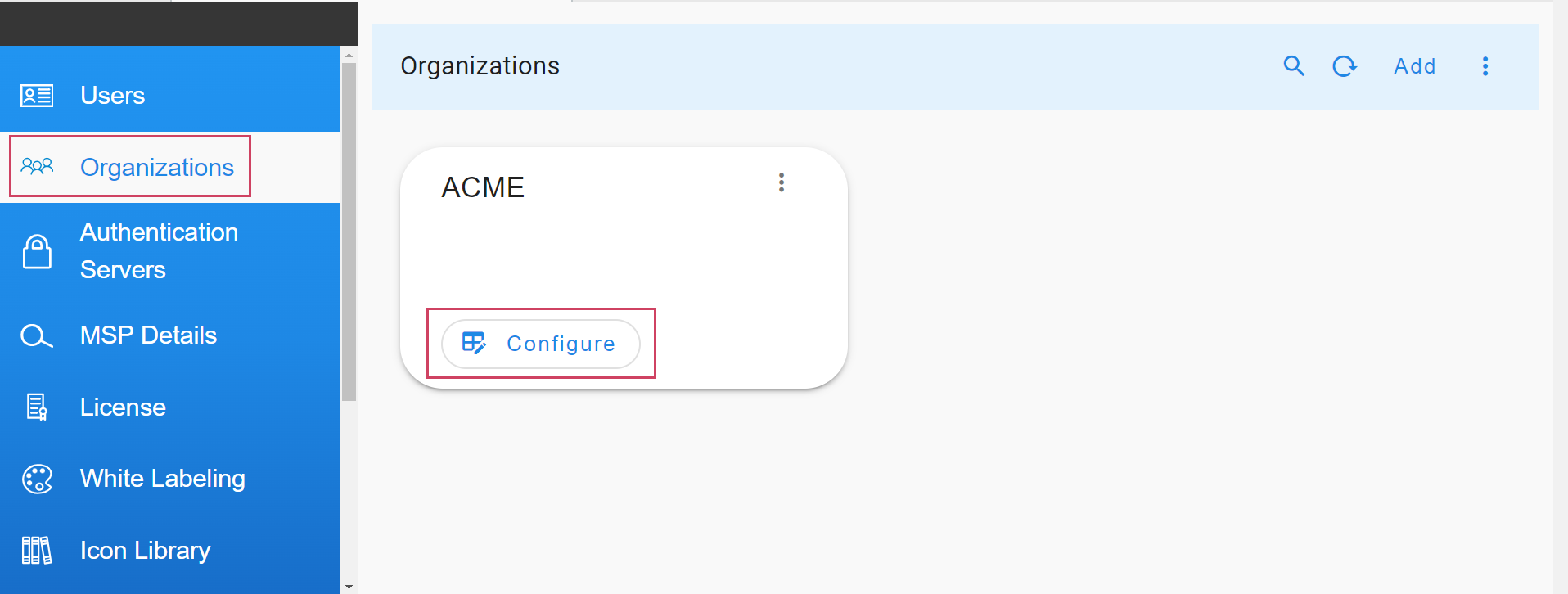

Go to Home --> Administration --> Organizations --> Configure --> ALERT ENDPOINTS --> Click Add -->

- Navigate to the appropriate section based on the type of the incoming event - alert or incident or message.

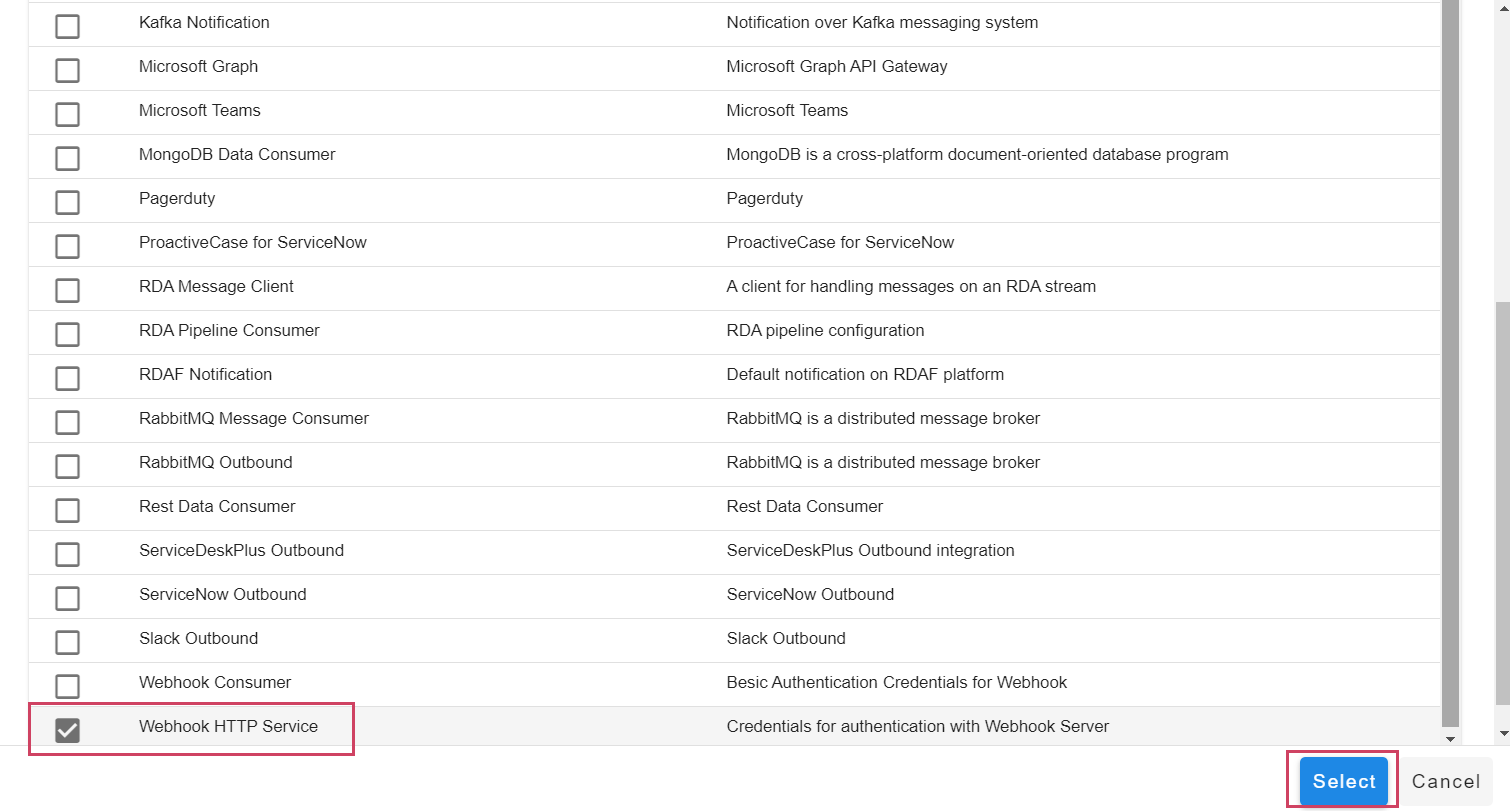

- Add an endpoint and pick the appropriate type for the endpoint. For instance choose Webhook HTTP Service for creating an HTTP endpoint where events can be posted.

- After the endpoint is added, just as shown in the below screenshot, use the toggle switch under Enabled this will enable the endpoint to start ingesting alerts into the system.

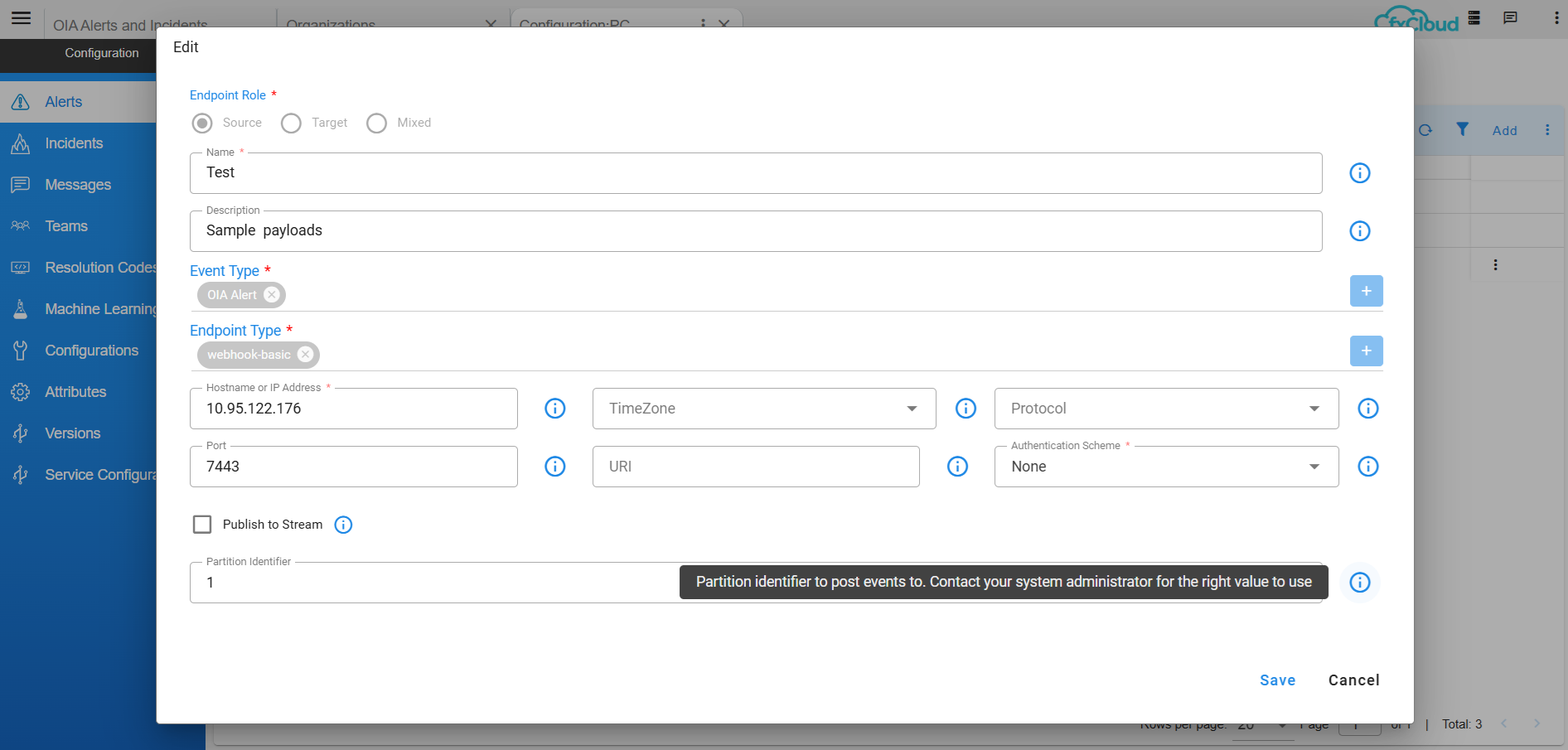

4.1.4 Source Endpoint Partition Assignment

A source of raw events can be optionally assigned a partition identifier to ensure better load distribution and management for optimized alert processing. The partition identifier is a positive integer between 0 and 14.

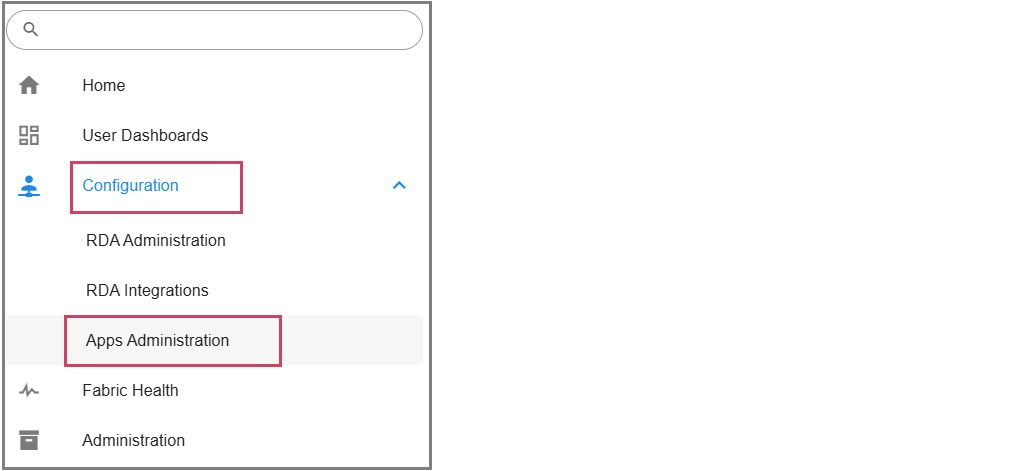

Navigation Path: Home Menu -> Configuration -> Apps Administration -> Configure -> Edit (Please find the path below in screenshots)

When assigned a partition, the events from that source will be published on that partition; and not a randomly assigned partition ID. Partition assignment can be used effectively to distribute the processing of the incoming load from bursty or noisy sources to a specific instance with dedicated CPU for processing. Consider the following example

4.1.4.1 Endpoint Sources

1) Metrics - very noisy (for instance Prometheus with typical incoming rate of xxx/minute)

2) Email alerts - moderate (for instance MS-Graph with typical incoming rate of xx/minute)

3) vROps alerts - low (typical incoming rate of x/minute)

Partitions assigned to each node in a 2 node service cluster using Round Robin Strategy

1) node#1 - [0, 2, 4, 6, 8, 10, 12, 14]

2) node#2 - [1, 3, 5, 7, 9, 11, 13]

Note

Each node is running on a Separate VM

Endpoint partitions based on the incoming rate of events

| Endpoint | Partition Number | Node Number |

|---|---|---|

| Metrics | 0 | 1 |

| 1 | 2 | |

| vROps | 3 | 2 |

In the above example, the noisy or bursty sources (Metrics) are assigned to an instance (node#1) that is not processing events from other sources so as to ensure that events from moderate or low rate sources are not compromised due to the processing overhead from a single noisy source.

Similarly, the partition distribution on each node in a 3-node cluster is

1) node#1 - [0, 3, 6, 9, 12]

2) node#2 - [1, 4, 7, 10, 13]

3) node#3 - [2, 5, 8, 11, 14]

The endpoint sources can be accordingly assigned to appropriate partitions.

4.1.5 Source Event Payload

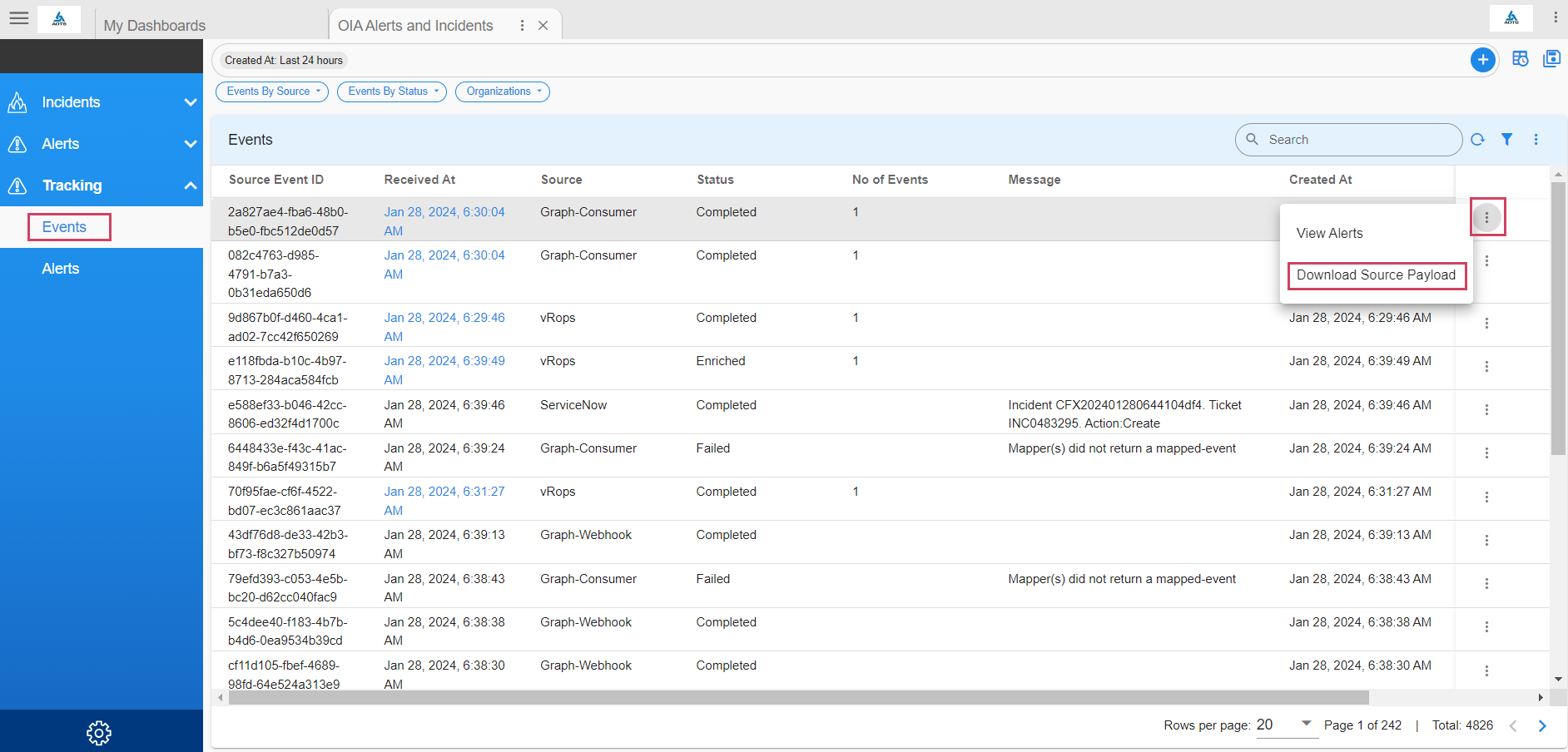

Go to Home --> User Dashboards --> select OIA Alerts and Incidents --> Click on Tracking --> Select Events

- Download the raw payload of the event posted to the webhook from the source as shown as example in the screenshot below

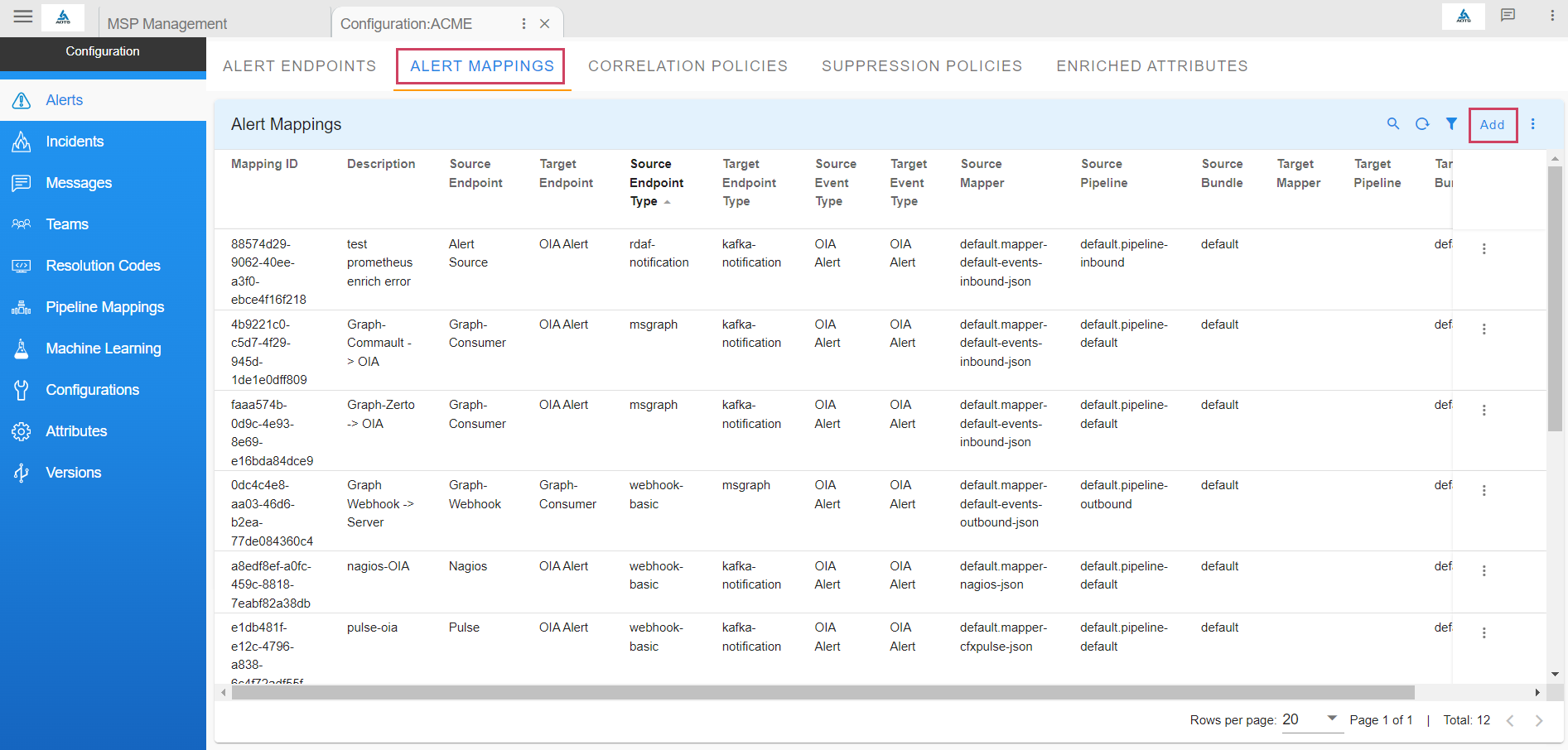

4.1.6 Source Event Mapping

- The source events will NOT be processed yet by the system without an appropriate mapping rule for translating the raw events into an actionable alert or incident or message.

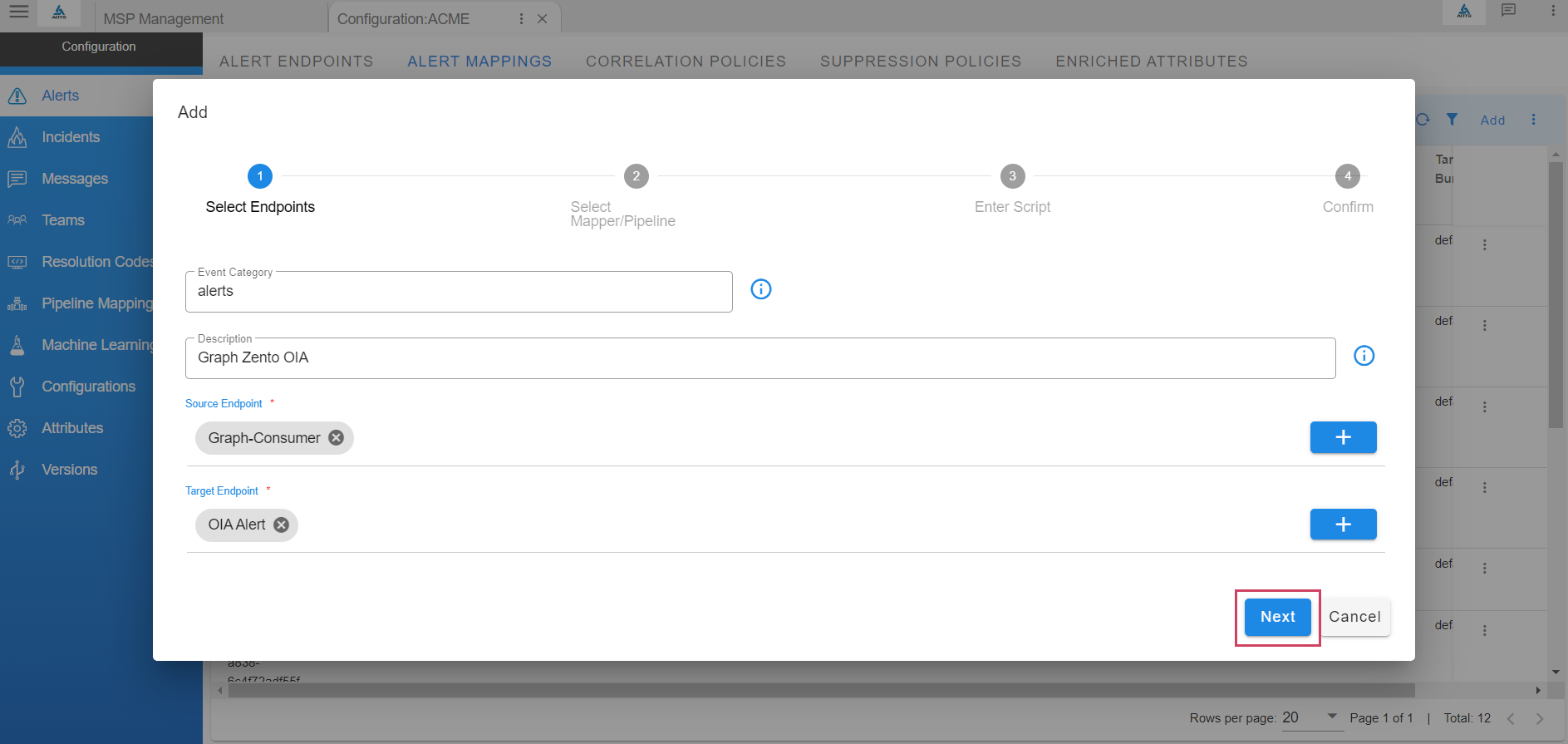

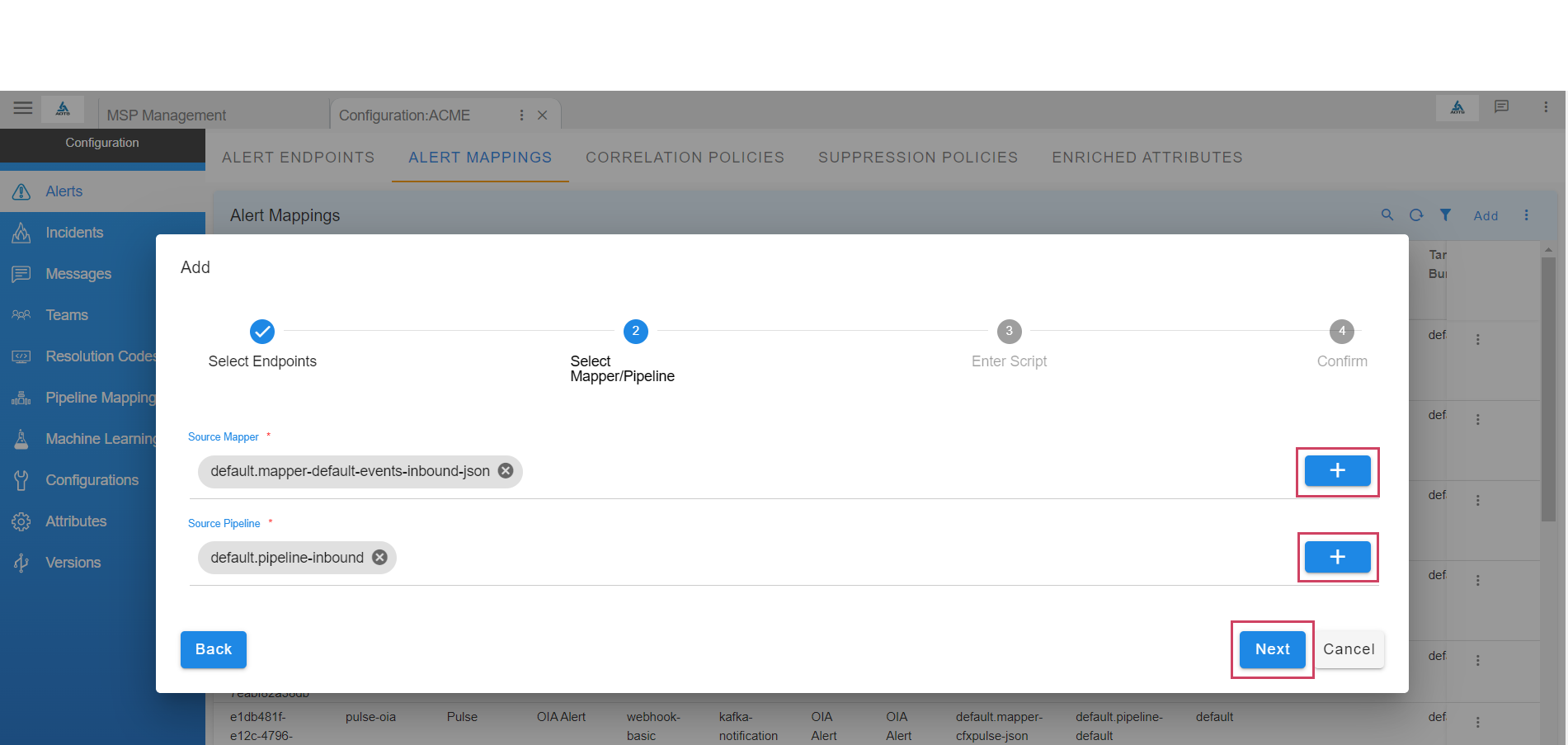

To create a mapping rule for translating the source event as an alert, navigate to Home --> Administration --> Organizations --> Configure --> ALERT MAPPINGS --> click on Add

- Select the source endpoint created earlier and select OIA Alert as target to ingest the event into the system as an actionable alert. Click Next

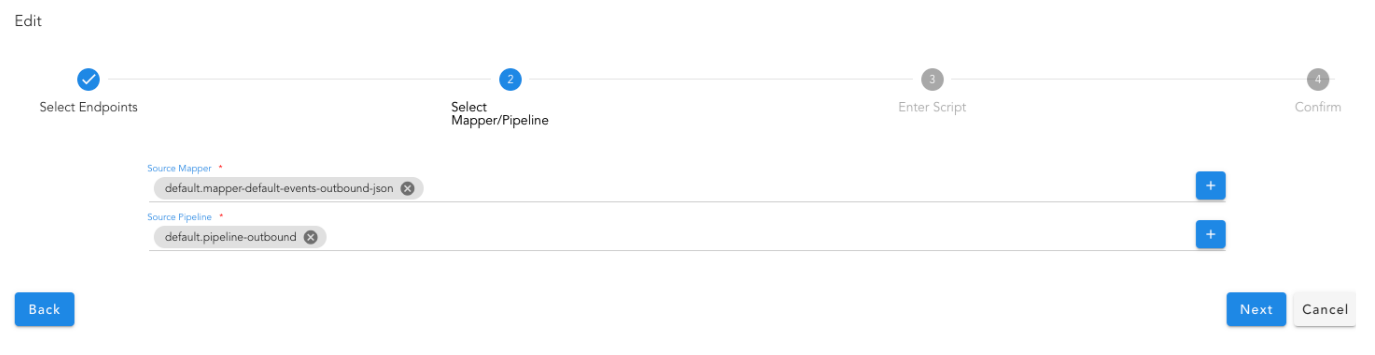

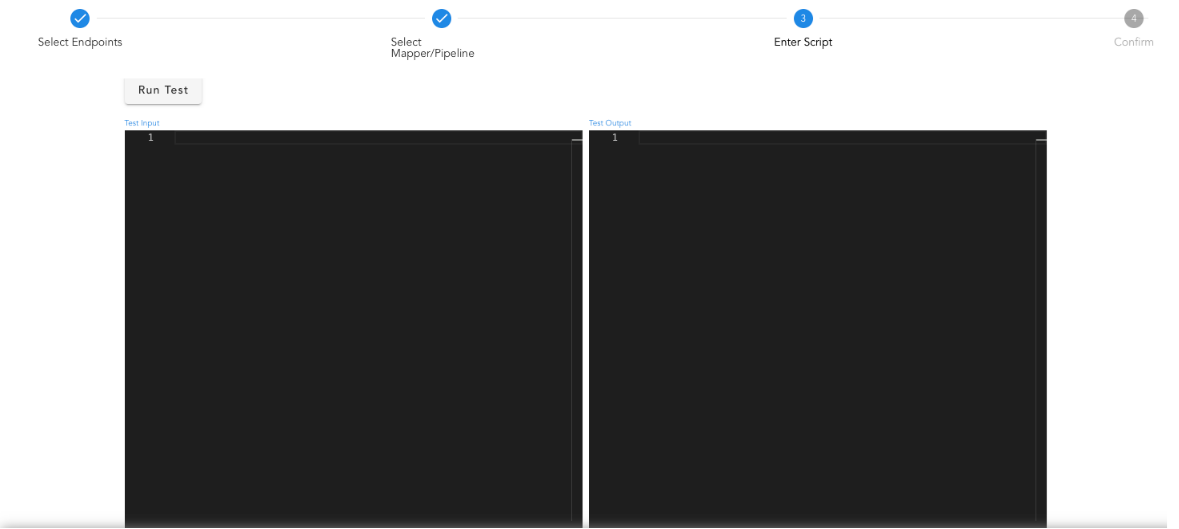

- Select one of the pre-defined mappers or select default.mapper-default-events-inbound-json to create a new JSON mapper. Also select pipeline default.pipeline-inbound for processing the alert via the ingestion pipeline.

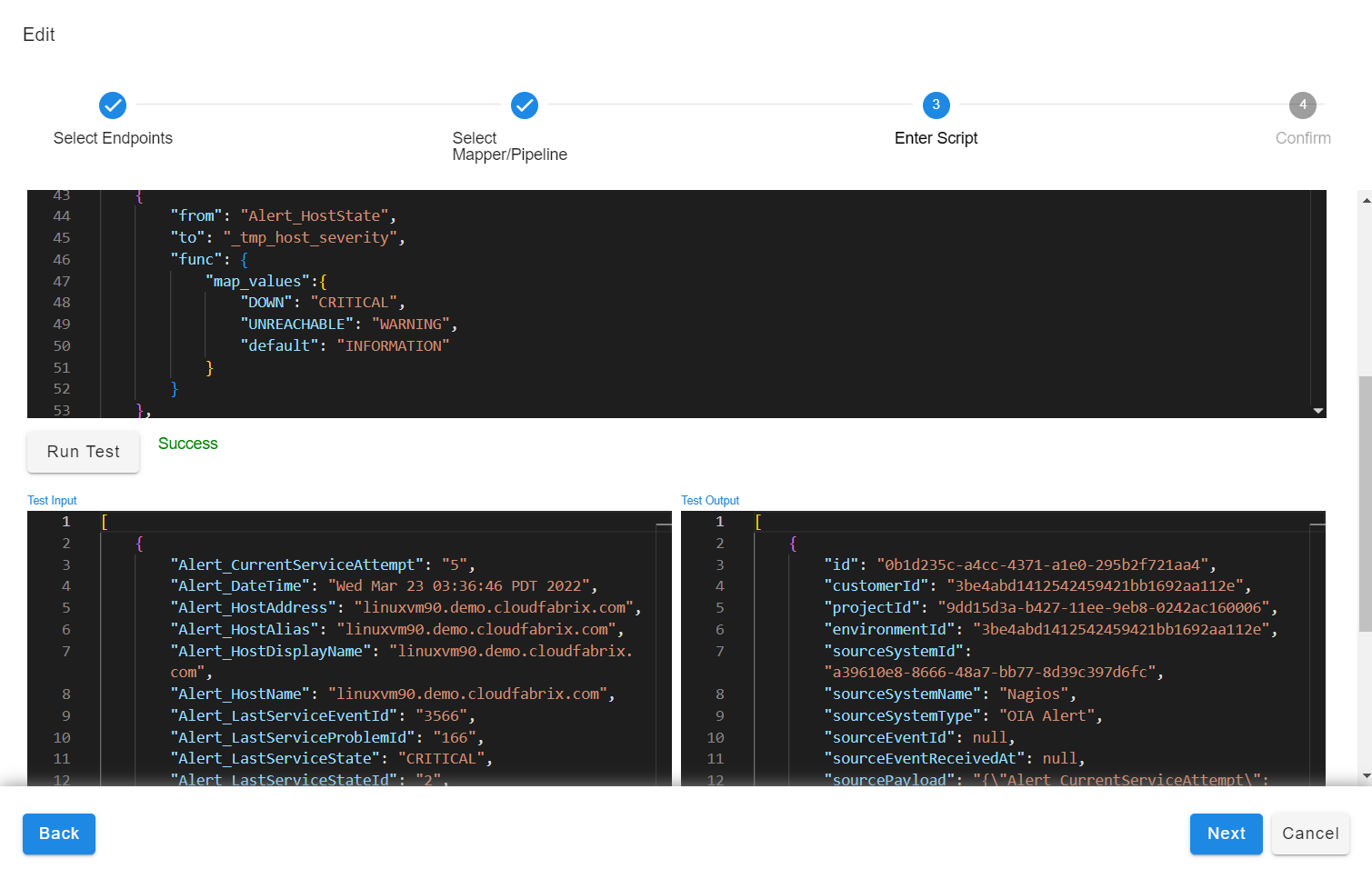

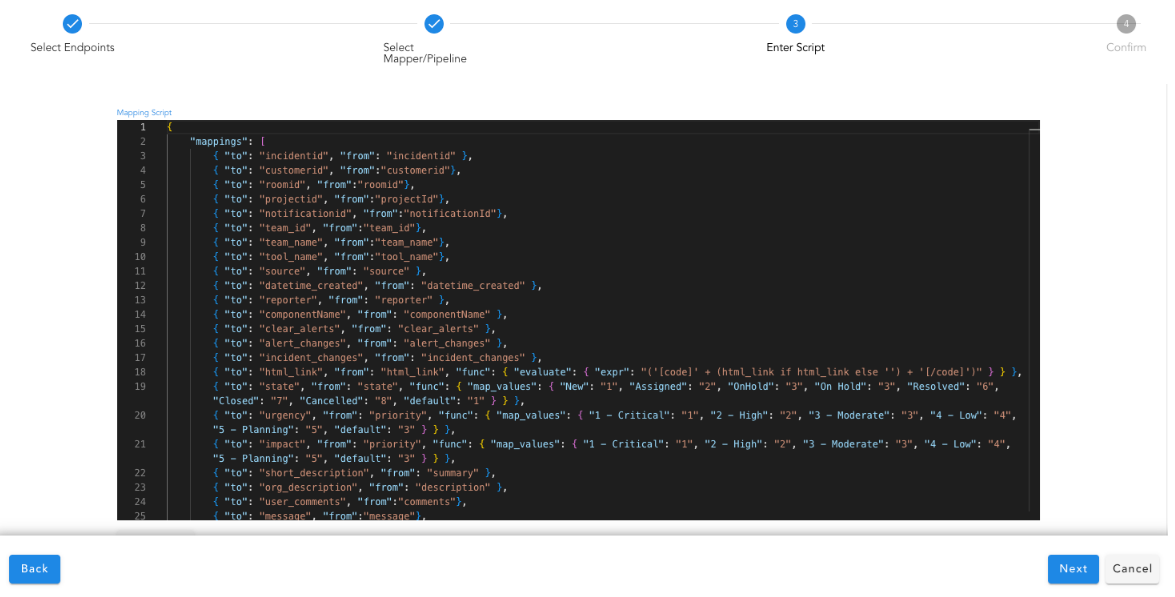

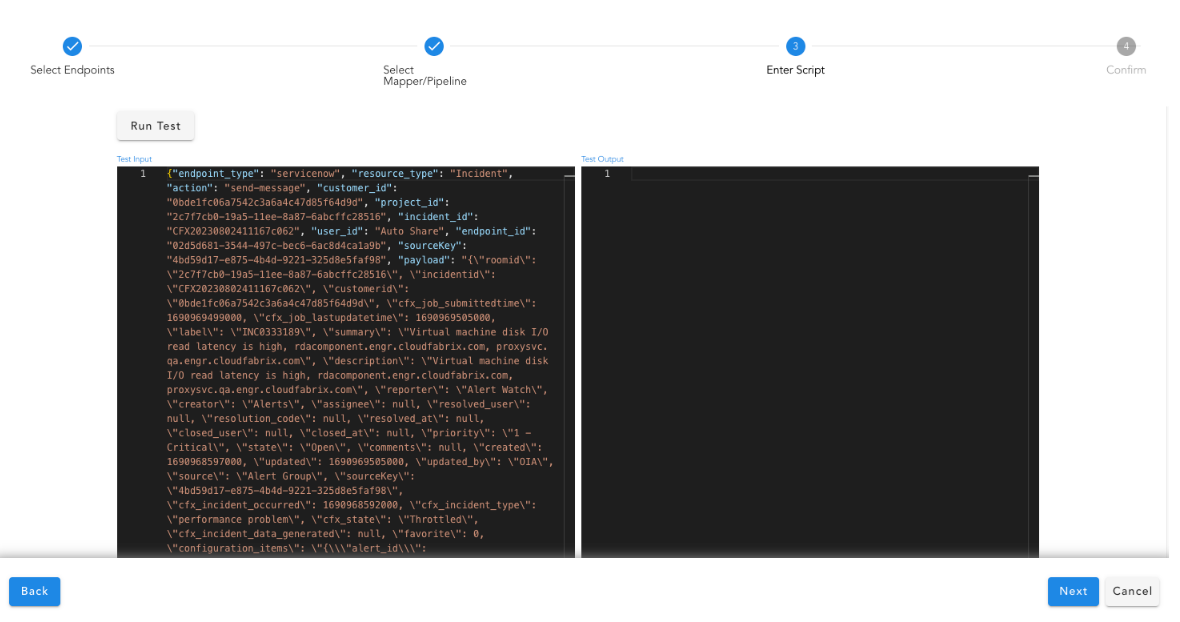

- Use the contents from the downloaded source payload as input. Create a JSON mapping definition to translate the input to the system identifiable alert.

For more Information on Json Based Alert Mapping Please click here

Use the Run Test option to view the mapping results in the output pane. After testing, save the mapping rule.

- Any incoming events to the system will now be processed as per the defined mapping rule and an alert will be created in the system.

4.1.6.1 Configure Enrichment of Metric Columns Using Mapper

- This adds attributes required for metric collection.

{

"func": {

"stream_enrich": {

"name": "oia-selected-metrics",

"condition": "asset_id is '$assetIpAddress' or asset_name is '$assetName'",

"enriched_columns": {

"metric_source": "metric_source",

"asset_id": "metric_asset_id"

}

}

}

}

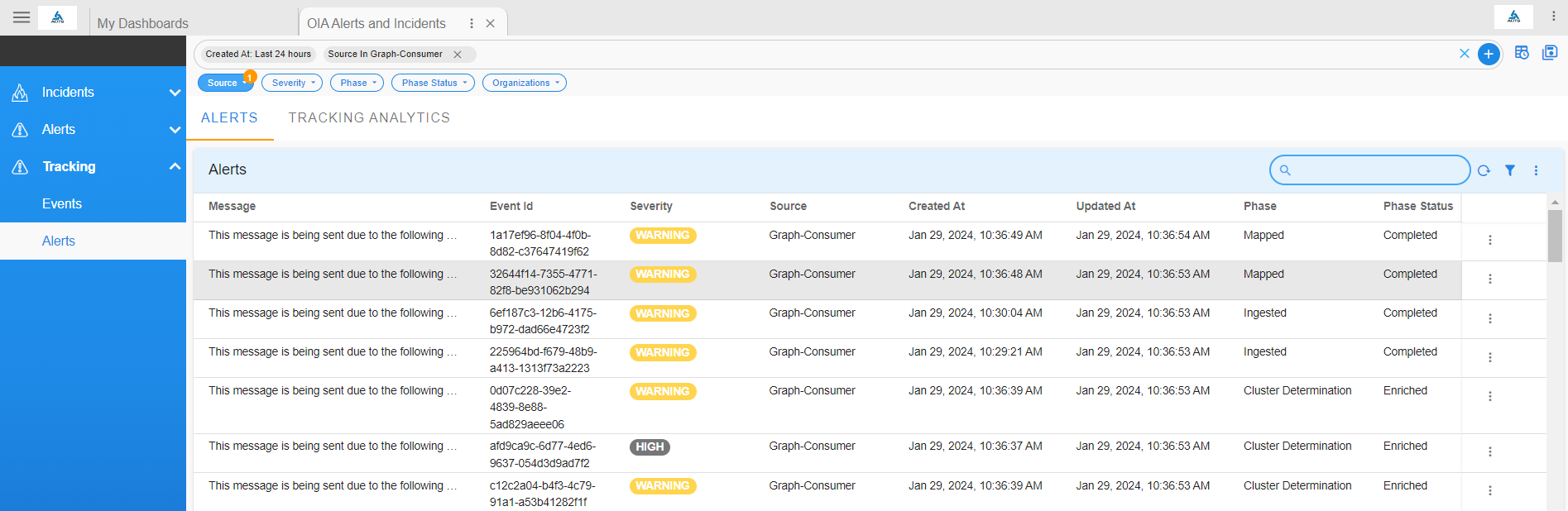

4.1.7 Event Tracking

Go to Home --> User Dashboards --> select OIA Alerts and Incidents --> Click on Tracking --> Select Events

View the alert created from the incoming event by navigating to the dashboard OIA Alerts and Incidents, and under the Events tab select View Alerts for the incoming event

- Alternatively you can check all the mapped alerts under Alerts tab

{

"type": "SOURCE-EVENT",

"sourceEventId": "fe342378-da9d-4475-8852-28076f60483d",

"id": "fe342378-da9d-4475-8852-28076f60483d",

"sourceSystemId": "b4a94c8e-ee3a-45f9-8e5a-e1a960d9a6ed",

"sourceSystemName": "Test",

"projectId": "49e1de48-516b-11ef-9015-0242ac140006",

"eventCategory": "alerts",

"customerId": "969e78aa5b31436282fa8976ae8de8d6",

"createdat": 1731579545852.3047,

"sourceReceivedAt": 1731579545852.2368,

"parentEventId": null,

"objstore_location": null,

"payload": "<serialized event data>",

"status": null

}

{

"type": "EVENT",

"sourceEventId": "fe342378-da9d-4475-8852-28076f60483d",

"id": "3224b96a-8cdd-4294-9fd7-fed73a4ce7fb",

"sourceSystemId": null,

"sourceSystemName": null,

"projectId": null,

"eventCategory": null,

"customerId": null,

"createdat": 1731579554557.038,

"sourceReceivedAt": 1731579545852.2368,

"parentEventId": null,

"objstore_location": null,

"eventKey": null,

"severity": null,

"payload": "<serialized data of a mapped event>",

"label": null

}

{

"type": "EVENT-STATE",

"sourceEventId": "8c3d07f2-d020-4d35-aa99-1716c811b371",

"id": "9318c5f5-a7b6-4e6d-b3aa-50f719be3ad9",

"sourceSystemId": "b4a94c8e-ee3a-45f9-8e5a-e1a960d9a6ed",

"sourceSystemName": null,

"projectId": "49e1de48-516b-11ef-9015-0242ac140006",

"eventCategory": "alerts",

"customerId": "969e78aa5b31436282fa8976ae8de8d6",

"createdat": 1731645523277.3901,

"sourceReceivedAt": 1731645523277.3901,

"parentEventId": null,

"objstore_location": null,

"eventKey": "0f44561717863028bf60d0fedde65e1c",

"processingState": "Ingested",

"status": "Completed",

"processedEventId": "0ba03246-1923-4463-b3f1-5f49e403aca4",

"triggeredEvent": "RAISE",

"message": null

}

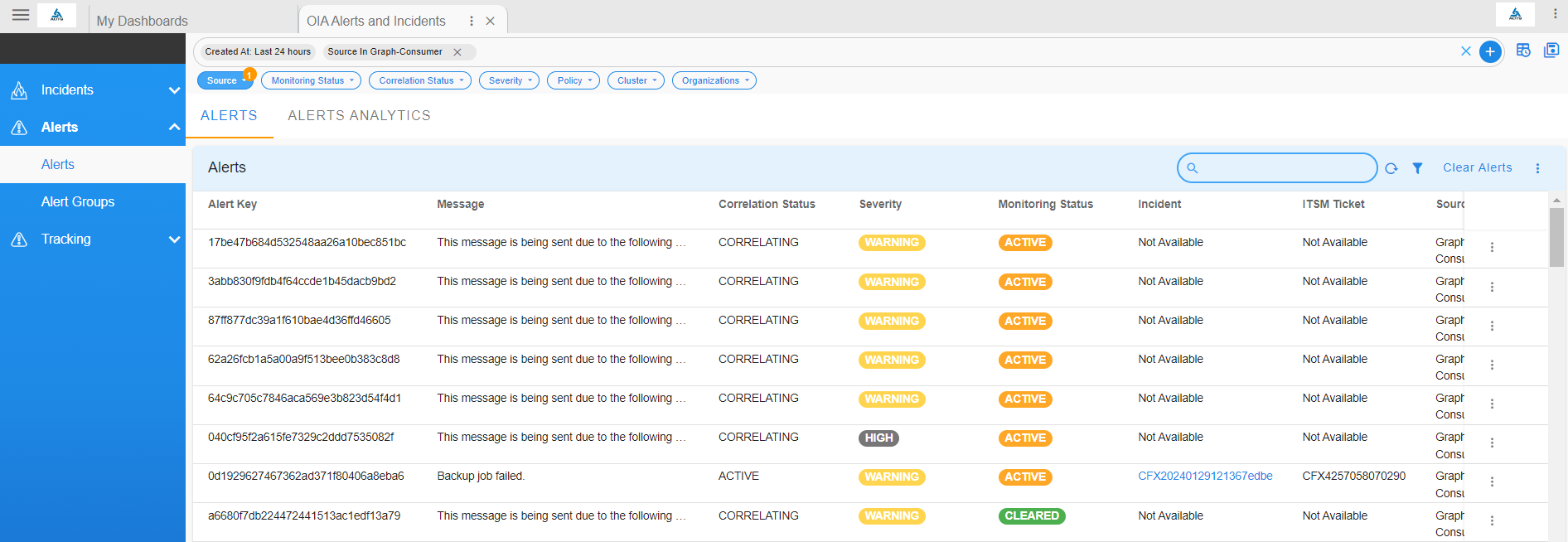

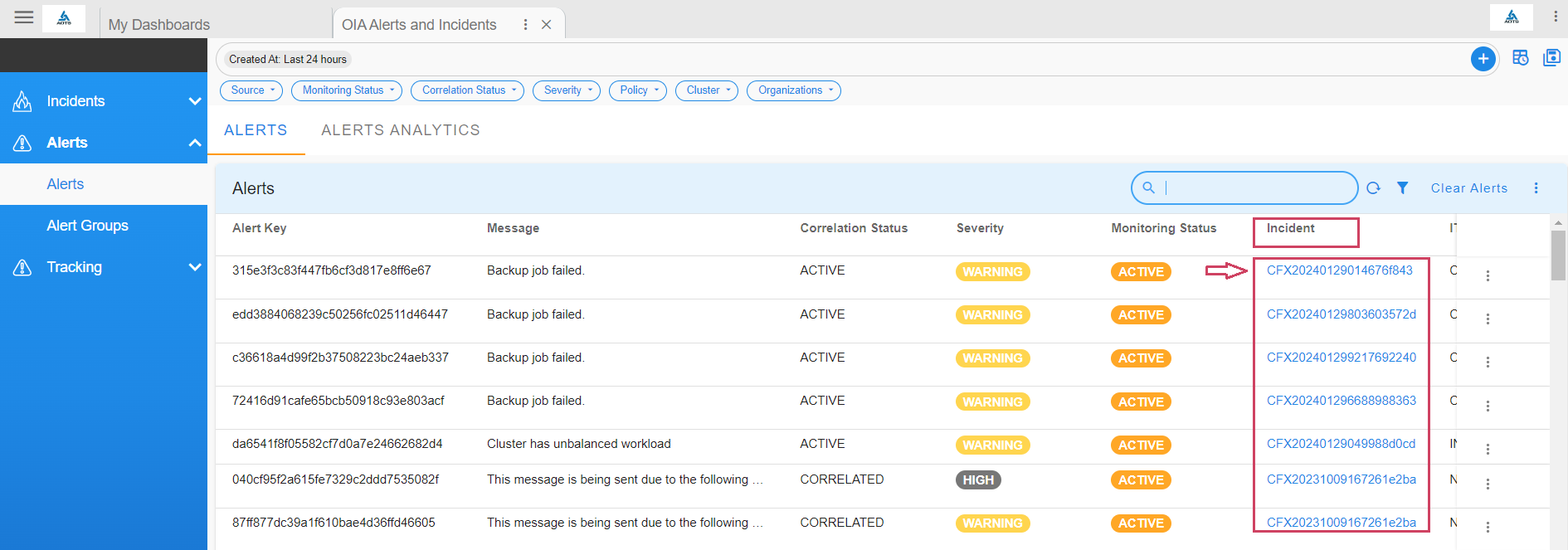

4.1.8 Alerts Report

- View all alerts created in the system by clicking on the Alerts tab

Go to Home --> User Dashboards --> select OIA Alerts and Incidents --> Click on Alerts

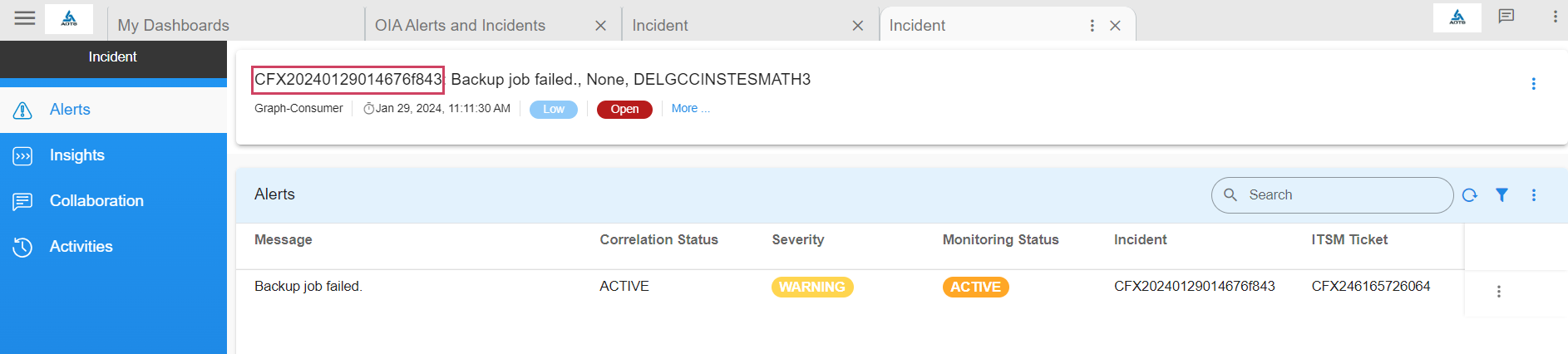

Click on the incident link to view the incident details

After the user Clicks on the Incident Link

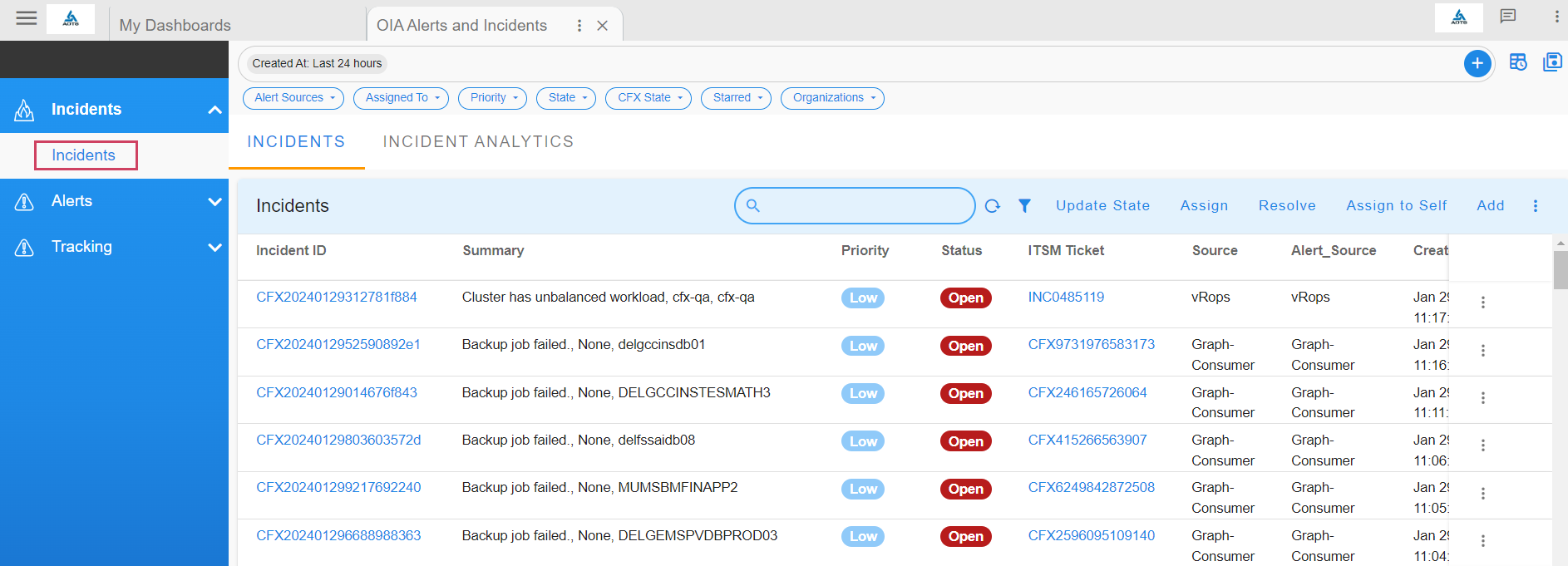

4.1.9 Incidents Report

View all incidents created in the system by clicking on the Incidents tab

5. Data Analysis and Stitching

Large enterprise environments have a mix of structured and unstructured IT data sources and many custom IT data parameters defined and implemented across various data sources. For example, IT environments can implement custom attributes like machine type, environment, site code, department name, support group, application ID, etc. Not every tool implements these attributes, making it difficult to understand which operational data sources are relevant for AIOps implementation and which attributes can be gleaned from which sources to enrich raw alert data. This is where the cfxOIA Data Analysis and Stitching module comes into the picture to help establish the below

- Asset Identities

- Enrichment Attributes

- Enrichment Flows

- Baseline Analysis

This module works off of historical alert/event data, ticket data, CMDB data, service mappings, asset management and establishes a data chain that will help in appropriate data source selection and enrichment attributes for AIOps implementation.

6. Alert Enrichment

Raw alert data contains extremely limited information, often consisting of id, severity, message/description, rule name, and asset IP/hostname, etc. This information doesn't provide enough service context (Application or Service name, Environment, machine-type, etc.) or supportability context (NOC id, Site-id, Department, Support-group, etc.) which are essential data for efficient correlation of alerts. cfxOIA performs automated alert data enrichment using a combination of following approaches

- ACE (Automated Context Extraction): Using this method, it extracts useful information like IP Address, DNS name and certain identifiable attributes from the source alert's payload. This doesn't require any external integrations, however, in majority of the scenarios, this may not be sufficient for alert correlation.

- External source lookup: This process looks up information related to the incoming alerts in an external data source (ex: CMDB or Inventory system, CSV etc...) and then adds them as enriched alert attributes. Enriched attributed presents more contextual information to the IT Operations user and also will be used to correlate the alerts.

6.1. Normalization

Alert notifications are ingested from disparate monitoring tools into cfxOIA application and each of them follow different format with different alert attributes. Some of the below attributes (not limited to) are important ones in general related to any incoming alert.

- Alert Timestamp

- Alert Status

- Alert Severity

- Alert Source

- Alert Message

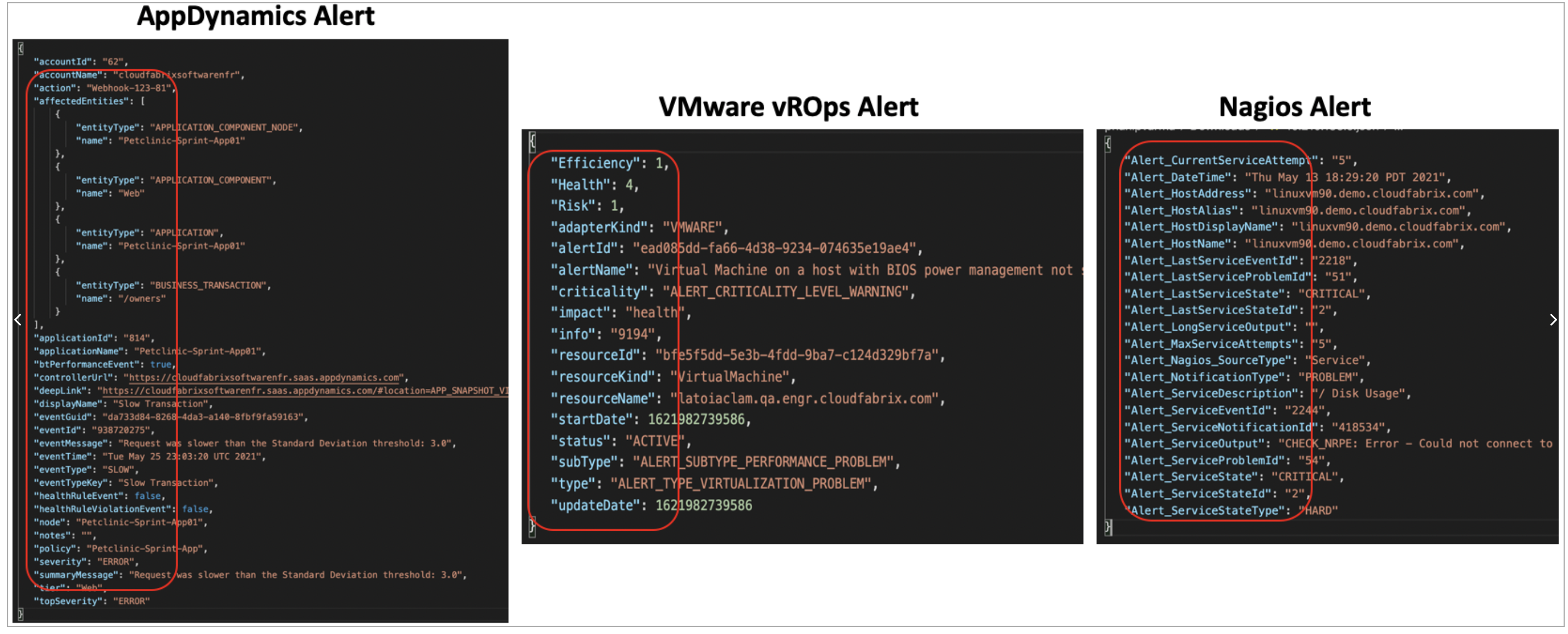

Below are three sample alert notifications payload from VMware vROps, Nagios & AppDynamics. As shown in the below, the alert attributes are completely different from each other.

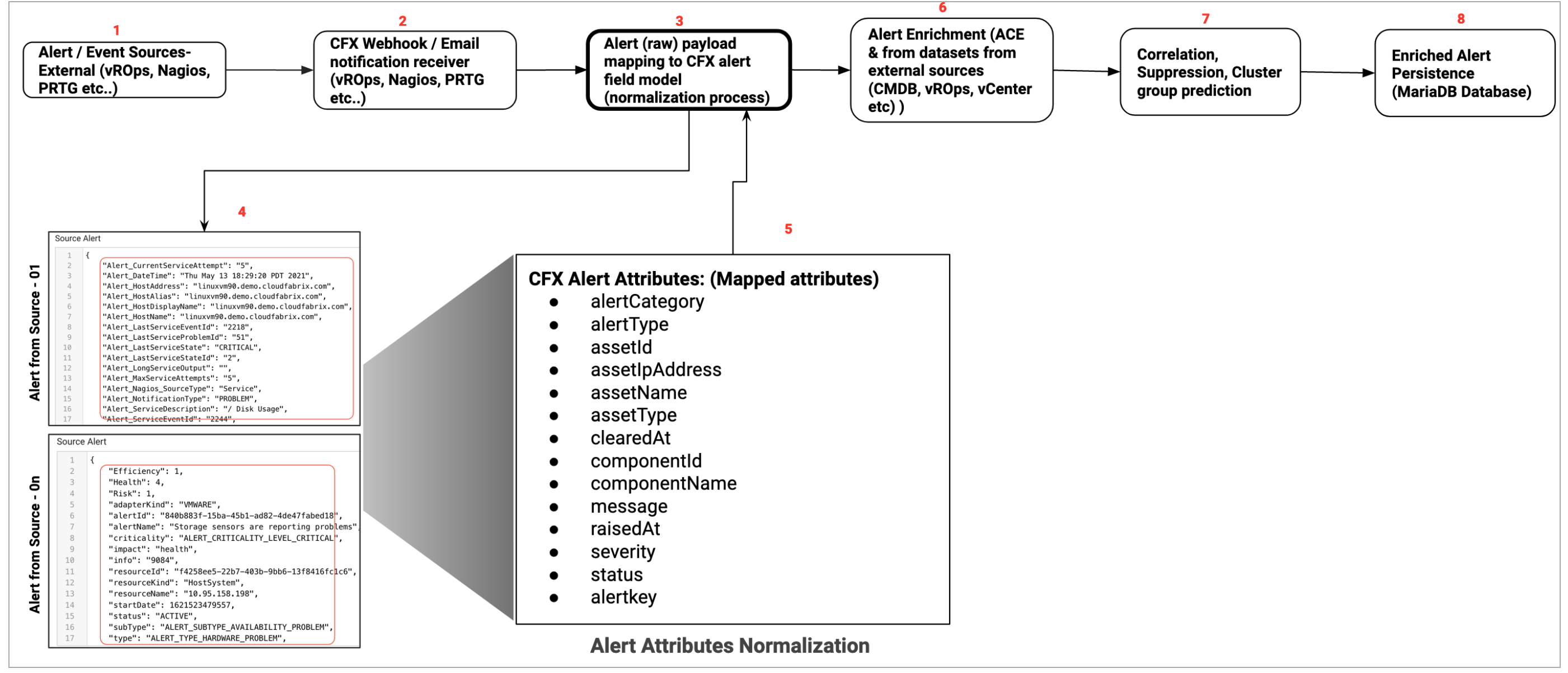

In cfxOIA application, it is a prerequisite to normalize these alert attributes coming from different monitoring tool sources to a common data model. Below are list of attributes which are used as part of the alert mapping process. Every ingested alert will go through Alert mapping process and their's payload attributes are mapped to the below standard attributes.

Info

Not all below attributes are mandatory to be mapped. The attributes that are flagged with * are mandatory ones.

- alertCategory: An attribute which can be used to categorize the alert

- alertType: An attribute to classify type of alert

- assetId: An attribute which can be used to identify the source of alert (Endpoint identity)

- assetIpAddress: An attribute that is used to identify the IP Address of the end point

- assetName*: An attribute that is used to identify the AssetName of the end point (ex: Hostname / Devicename)

- assetType: An attribute that is used to identify type of the Asset or the end point (ex: VM / Server / Storage / CPU / Memory etc)

- clearedAt*: Alert timestamp that is used to identify when the alert was cleared

- componentId: An attribute to associate a sub-component ID of an endpoint from which the alert was generated

- componentName: An attribute to associate a sub-component name of an endpoint from which the alert was generated

- message*: Alert message that states the symptom or problem which has caused the alert

- raisedAt*: Alert timestamp that is used to identify when the alert was occured

- severity*: Alert's severity (Ex: Critical, Warning, Minor etc..)

- status*: Alert's state (Open / Closed / Active / Recovered / Cancelled)

- alertkey*: Alert's unique identifier which is used to identify an incoming alert and to apply alert de-duplication process. It can be taken from a single alert attribute or a combination of alert's attributes

Alert ingestion with alert mapping & normalization process data flow:

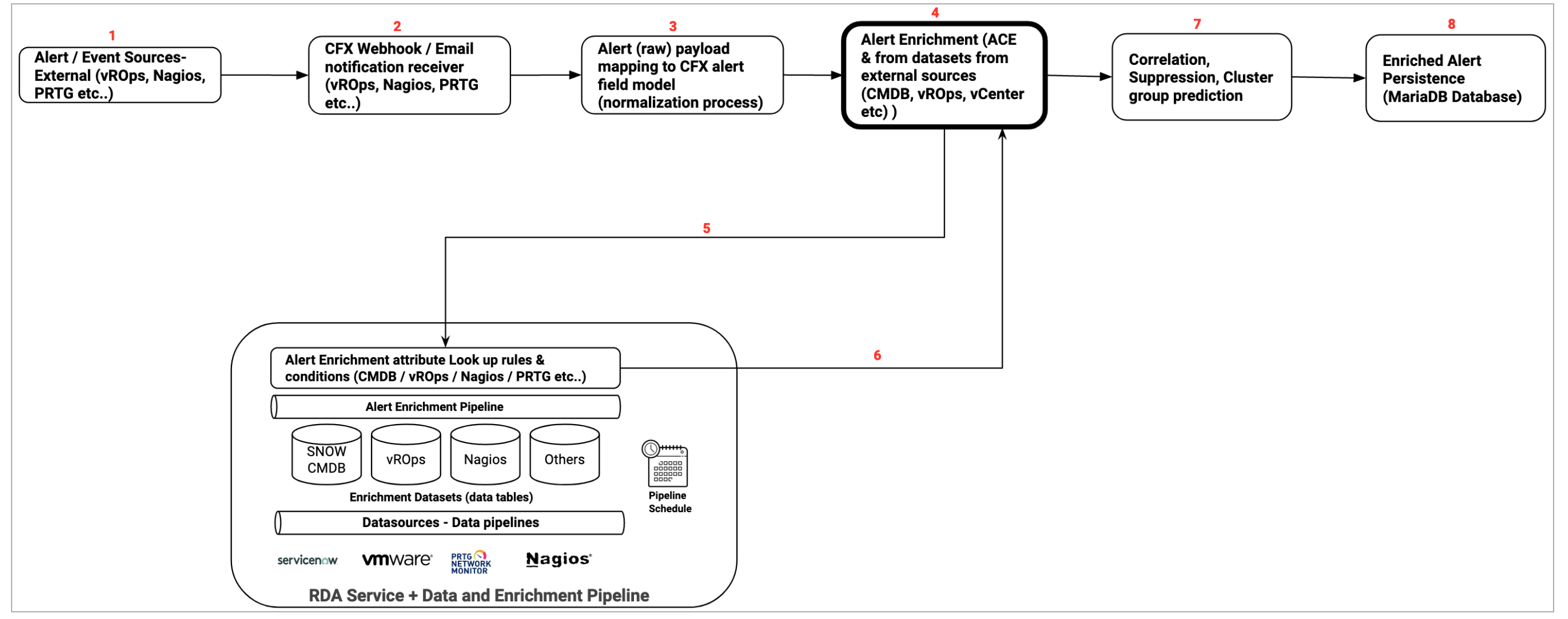

6.2. Enrichment

Below flow illustrates different stages of Alert processing from ingestion, alert attributes mapping, alert enrichment, correlation/suppression and persisting into the system's database.

Tip

In the above image illustration, listed enrichment datasources such as SNOW (ServiceNow), Nagios & vROPs are used for a quick reference only. cfxOIA support many datasources for enrichment process.

6.3. Alert Mapper

Alert Mapper normalizes and enriches ingested alert notifications from various sources, such as webhooks, emails, Kafka queues, and other supported transport mechanisms, into a standard alert data model. For detailed configuration information on Alert Mapper, please refer to the document on Json Based Alerts Mapping

7. Alert Correlation

Alert correlation is a process of grouping together related alerts to reduce noise and increase actionability of alerts and events. Correlated alerts are grouped and translated into CFX incidents, which are then routed to ITSM systems for handling by NOC/IT Analysts, who can then login to cfxOIA's Incident Room module to perform swift triage, diagnosis and root cause analysis of an Incident.

cfxOIA's correlation engine provides recommendations for detecting and grouping new alert patterns. Admins can grasp, analyze the recommendations, and convert into Correlation Policies or define new policies altogether. Admins can also implement alert Suppression Policies to suppress alerts that escape during maintenance windows. cfxOIA provides out of box policies to treat well-known operational issues like alert burst scenarios, flapping scenarios, etc.

7.1 Key Points

- Ingested alerts and events are normalized to OIA alert model, to allow addressing most alerts/tool implementations

- Customers can add custom attributes to alert model using enrichment process

- Ingested alerts are enriched with context about application, stack, department, ownership, support-group etc. using a process called alert enrichment.

- Enriched alerts are then evaluated for any correlation or suppression to be performed. Suppression policies are used to suppress alerts that escape maintenance windows.

- Alerts that remain are then evaluated for correlation that is determined by correlation policies, which are setup in 3-ways

- System defined policies: To address well-known behavior like alert burst and alert flapping situations.

- ML driven correlation recommendations: OIA uses unsupervised ML clustering to detect alert patterns and provides list of suggested correlations in the form of Symptom Clusters.

- Admin defined correlation policies: Administrators can define new correlation policies or customize existing policies to meet their needs. For instance, correlation policies allow admins to group alerts across a full-stack or an application instance. Admins can also group alerts across a common infrastructure (like network, storage etc.) or shared services (ex: SSO, DNS etc.).

7.2 How correlation policy works

Correlation policies are in enabled state when created, but they can be disabled. Correlation policies determine how alerts can be grouped together. Most of the correlation policies can be created in an assisted-manner by recommendations provided by cfxOIA's correlation engine with symptom clusters.

A correlation policy can result in one or more instances of alert correlations, each represented by a correlation Alert Group.

Following controls are available to specify correlation behavior.

Minimum Severity of Alert Group:

Severity of a correlated alert group is always determined by the highest severity of alerts that it comprises of. However, admin users can configure if they want a minimum level of severity to correlated alert groups formed by a correlation policy.

Time Boxing:

Time boxing is the concept of grouping related alerts that fall within a certain time window, like 15-mins, 30-mins or 1-hour. The time window is started when first matching alert is detected and closed after the time window expires. Any new matching alert after time window expiration will result into a new correlated alert group instance which leads to a new incident.

Precedence:

Precedence values help determine which policy takes precedence when conflicts arise, which could arise when an alert matches multiple policies. For example, an alert belonging to symptom cluster prod and application CMS can match both policies that are setup to correlated alerts at application level (app-name == CMS) or at symptom cluster level (cluster-name == prod). By providing higher precedence to application-level policy, alerts can will be grouped at application level.

Precedence is a numeric value starting with 10000, and higher values indicate higher precedence and take priority in case of match. Precedence values are optional, if not provided, system provides Precedence values automatically, based on chronological order i.e newly created correlation policies will get higher precedence.

A typical approach would be setup more wider or broad-scope correlation policies with higher precedence and more specific correlation policies to be with lower precedence.

Property Filters:

Narrows down related alert selection criteria using a set of property filters that match property fields with specified values using conditions like (equals, contains, in list of values etc.)

Property filters allow fine grained control of correlation policies to meet organizational processes, administrative domains or functional groups.

Group By:

Related alerts can be grouped by values in a certain attribute. This works best for attributes that are typically of type enumeration, list of values or represent a limited set of identities.

For example, assume Machine-Type attribute has following values Machine-Type = Application / Server / Network / Storage then if the Group By selects Machine-Type as attribute, correlation engine will automatically group alerts which have

With two group by attribute selections indicated above, following alert group correlations will be

"Machine-Type == Application and Environment == Prod" into one group.

"Machine-Type == Application and Environment == UAT" into one group.

"Machine-Type == Server and Environment == Prod" into one group.

"Machine-Type == Server and Environment == UAT" into one group.

"Machine-Type == Storage and Environment == Prod" into one group.

"Machine-Type == Storage and Environment == UAT" into one group.

"Machine-Type == Network and Environment == Prod" into one group.

"Machine-Type == Network and Environment == UAT" into one group.

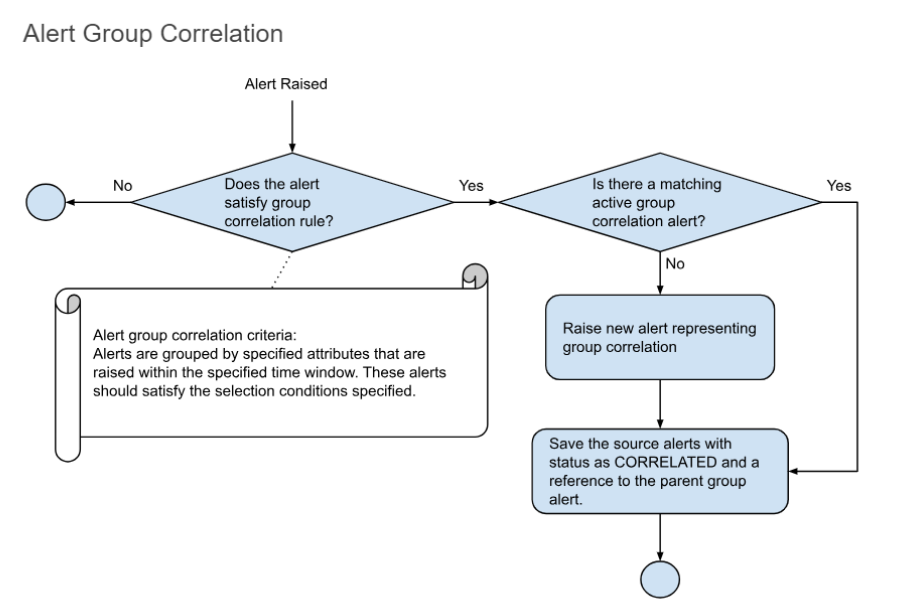

7.3 Correlation Group Policy

7.3.1 Alert Group Correlation Diagram

Tip

Some screenshots are normal some screenshots needs zooming, Please click on those screenshots below to enlarge and again to go back to the actual page please click on the back arrow button on the top left.

7.3.2 Correlation Use Case

-

Correlation is the process to correlate alerts generated from different sources and to reduce the noise of alerts, One of the ways to achieve this is to define correlation policy and get alerts correlated to one alert-group.

-

Alerts can be filtered using the policy filter and can be grouped using the GROUP BY methods of the correlation policy definition.

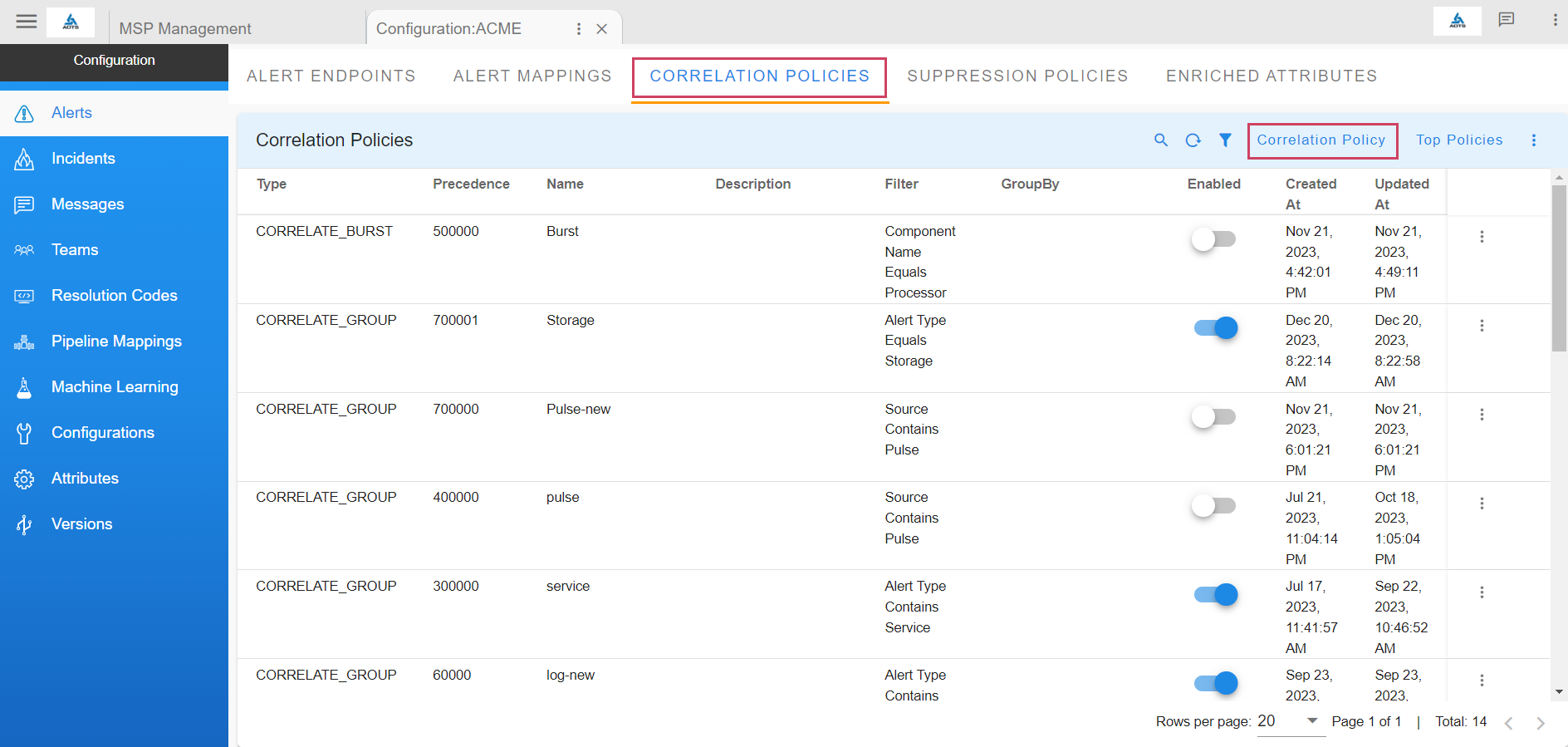

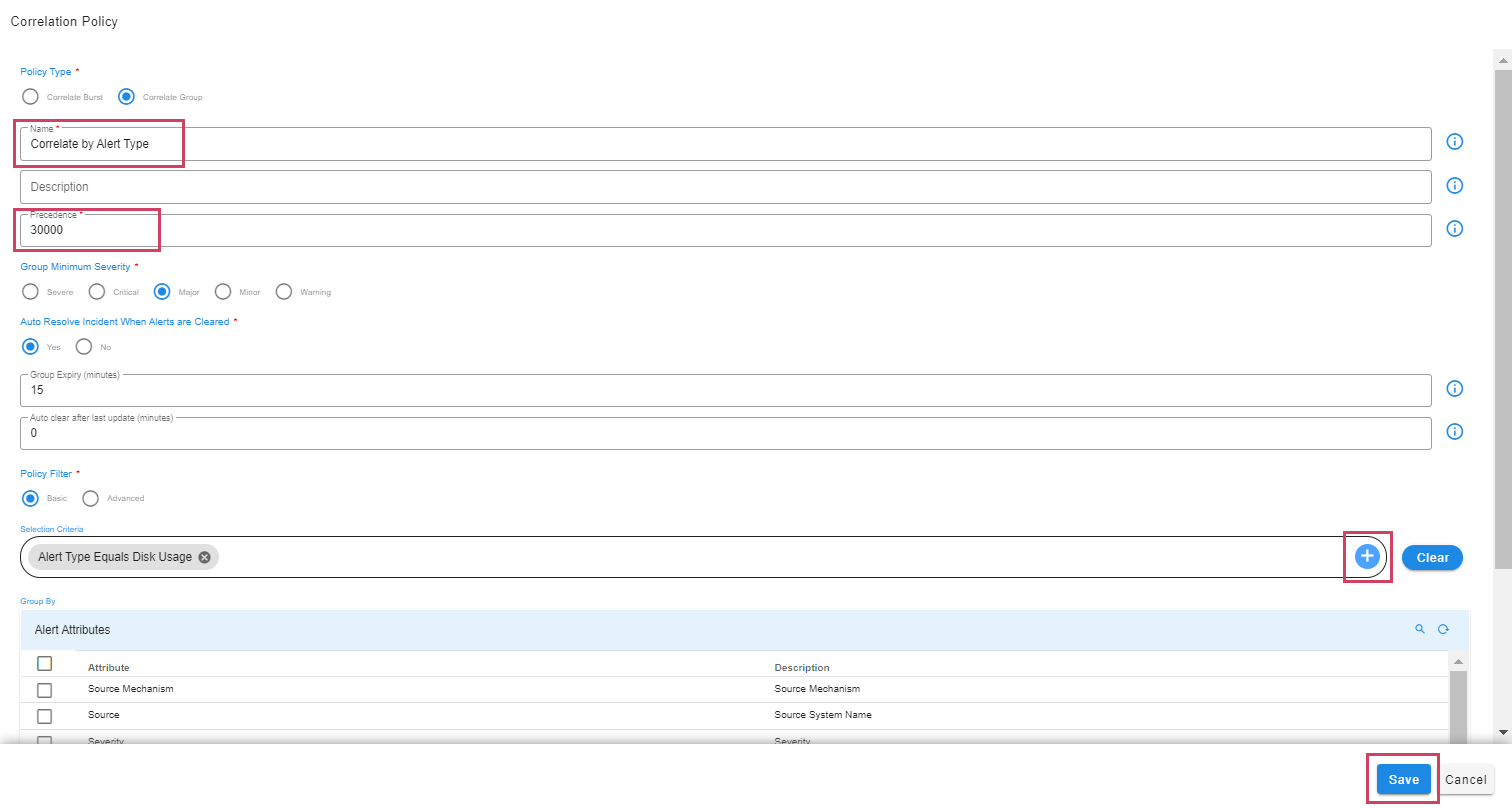

Creating and Updating Correlation Policies

Home --> Administration --> Organization --> Click on Configure --> click Correlation Policies --> Add all required credentials

7.3.3 Policy Definition Attributes

7.3.3.1 Precedence

-

Each policy has to be defined with a precedence. Policy applicability happens based on the defined precedence for incoming alerts.

-

Below are the allowed values for precedence:

a) Minimum Value: 10

b) Max Value: 1000000

7.3.3.2 Auto Resolve Incident When Alerts are Cleared

-

When an auto resolve is defined as Yes for a policy, alert-group/incident is auto cleared on clear of all the children alerts.

-

Allowed values are Yes/No

-

When auto resolve is defined as No group will remain active even though all children in the group are CLEARED.

7.3.3.3 Group Expiry

-

Group expiry is defined in minutes, which defines a window for alerts to get correlated to an alert group.

-

For example if group expiry is defined as 15 mins, if a group is created at 10:00 then the group window is opened till 10:15. All the alerts received in between 10:00 to 10:15 are correlated to the group created at 10:00.

Below is the sample explanation on alert group with expiry

Time |

10 minute window | Alerts in Incident | Alert Group State | Incident State |

|---|---|---|---|---|

| 10:03:00 | 2 Alerts raised, Alert Group is created - valid till 10:13:00, Incident is created - Incident 1 | 2 | Open | Open |

| 10:05:00 | 2 Alerts cleared | 2 | Open | Open |

| 10:07:00 | 3 Alerts raised | 5 | Open | Open |

| 10:12:00 | 3 Alerts cleared | 5 | Open | Open |

| 10:13:00 | Alert Group is closed | 5 | Closed | Resolved(If all the children are cleared) |

In the Above Sample Incident 1 is cleared if the group is expired and all the children are cleared in the group. If any one of the children is in ACTIVE state then alert-group/Incident will remain in the open state.

7.3.4 Auto Clear after last update

-

Auto clear an Incident happens if no new alerts gets correlated to the incident till the prescribed Auto clear interval.

-

For example if a correlation group is created at 10:00 and auto clear is defined as 20 minutes then the group will be auto cleared if no alert is received until next 20 minutes(i.e 10:20).

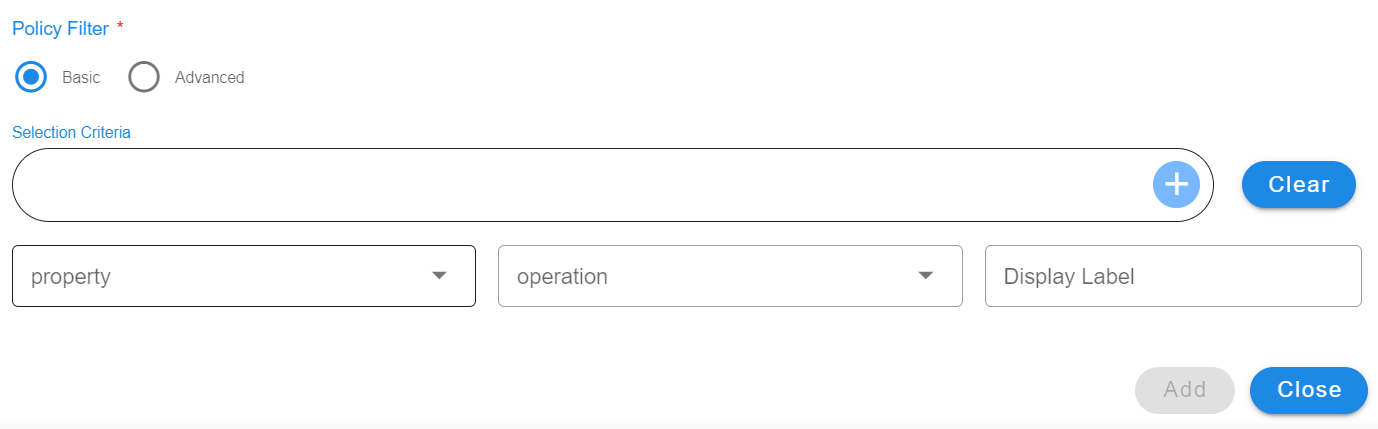

7.3.5 Policy Filter

-

Policy filters can be defined in order filter of the ingested alert to get correlated based on certain criteria.

-

Supported policy types

a) Basic

b) Advanced

7.3.5.1 Basic

-

Filter which can be defined using UI supported filter widget. Default operator AND is used when multiple conditions are defined in filter.

-

Below is the sample basic format

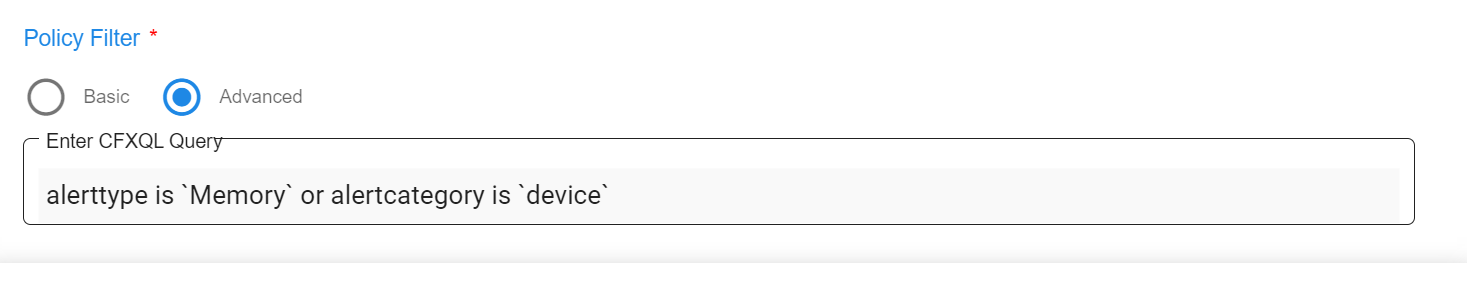

7.3.5.2 Advanced

-

Advanced can be defined using CFXQL language, when we want to specify multiple conditions with OR/AND operations.

-

Reference for CFXQL query format click here

-

Characters which needs to be escaped in the value of a defined filter.

CharactersUsecase ( with escape Characters ) Examples $ test$123 Message contains 'Error logging\$123' ^ test^123 Message contains 'Error logging\^123' * test*123 Message contains 'Error logging\*123' ( test(123 Message contains 'Error logging\(123' ) test)123 Message contains 'Error logging\)123' + test+123 Message contains 'Error logging\+123' [ test[123 Message contains 'Error logging\[123' ' test'123 Message contains 'Error logging\'123' ? test?123 Message contains 'Error logging\?123' -

Below is the sample for advanced filter using CFXQL

7.3.6 Filter Attributes Used to Define Filter

- Source Mechanism, Source, Severity, Alert Category, Alert Type, Cluster, Asset Type, IP Address, Asset Name,Component Name, Message.

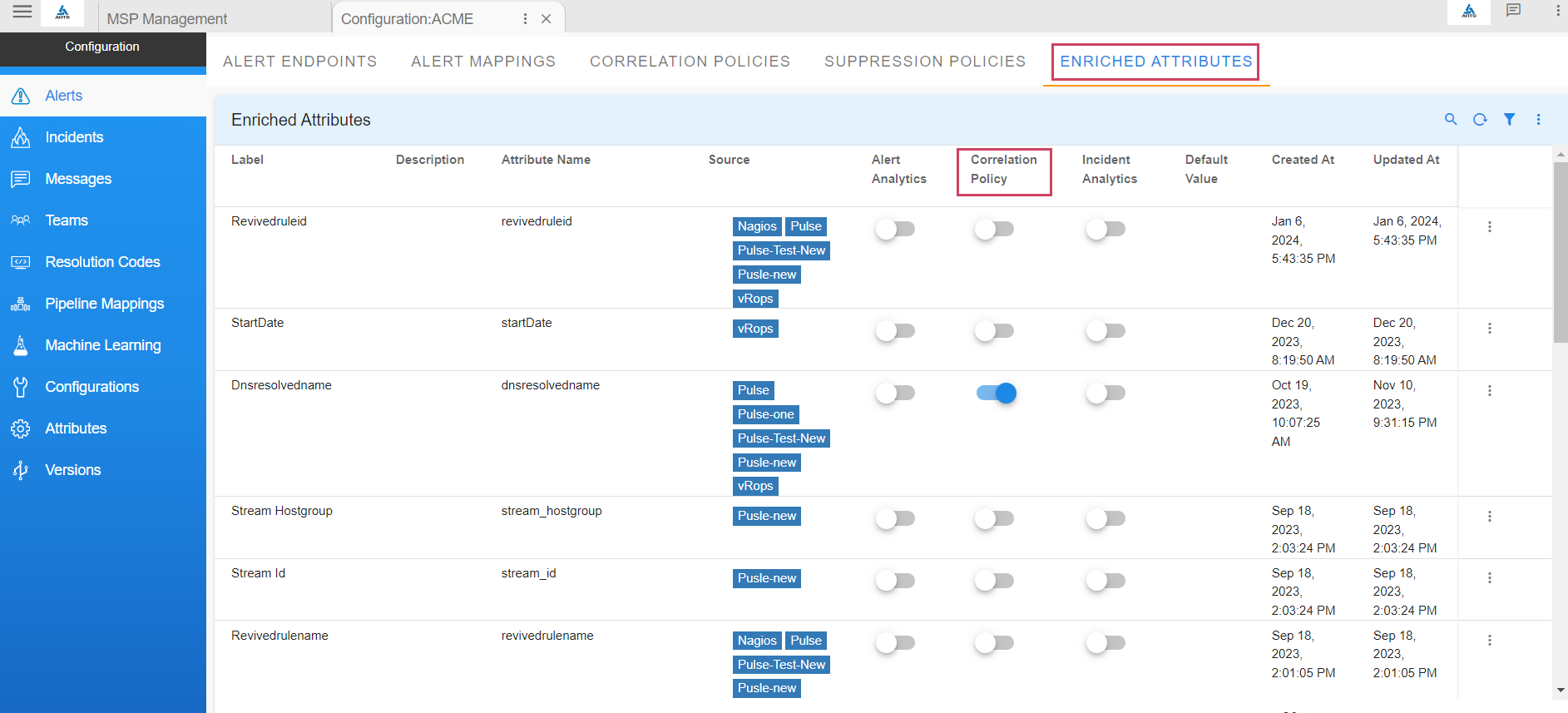

7.3.6.1 Using Enriched Attributes as Filter Attributes to Define Policy

-

Enriched attributes of an alert can be used to define filters by enabling them from the Enriched Attributes management section.

-

Path to manage enriched attributes of alerts: Home → Administration → Organization → Configure → Alerts → ENRICHED ATTRIBUTES → toggle switch under Correlation Policy

7.3.7 Group By

- Policy can be defined to have multiple attributes as group by, Unique incidents will be created using the group by attributes.

7.3.8 FAQ’s

-

Can we have one incident created for a policy and correlate all the alerts to one incident ?

We can define a policy with zero expiry minutes. Incoming alerts filtered with the policy will get correlated to one incident and the incident will be cleared when all the alerts are cleared.

-

Can an incident be active even though all the children are cleared?

Incidents will be in active state when a policy is defined with auto resolve No, even though all the alerts of an incident are cleared.

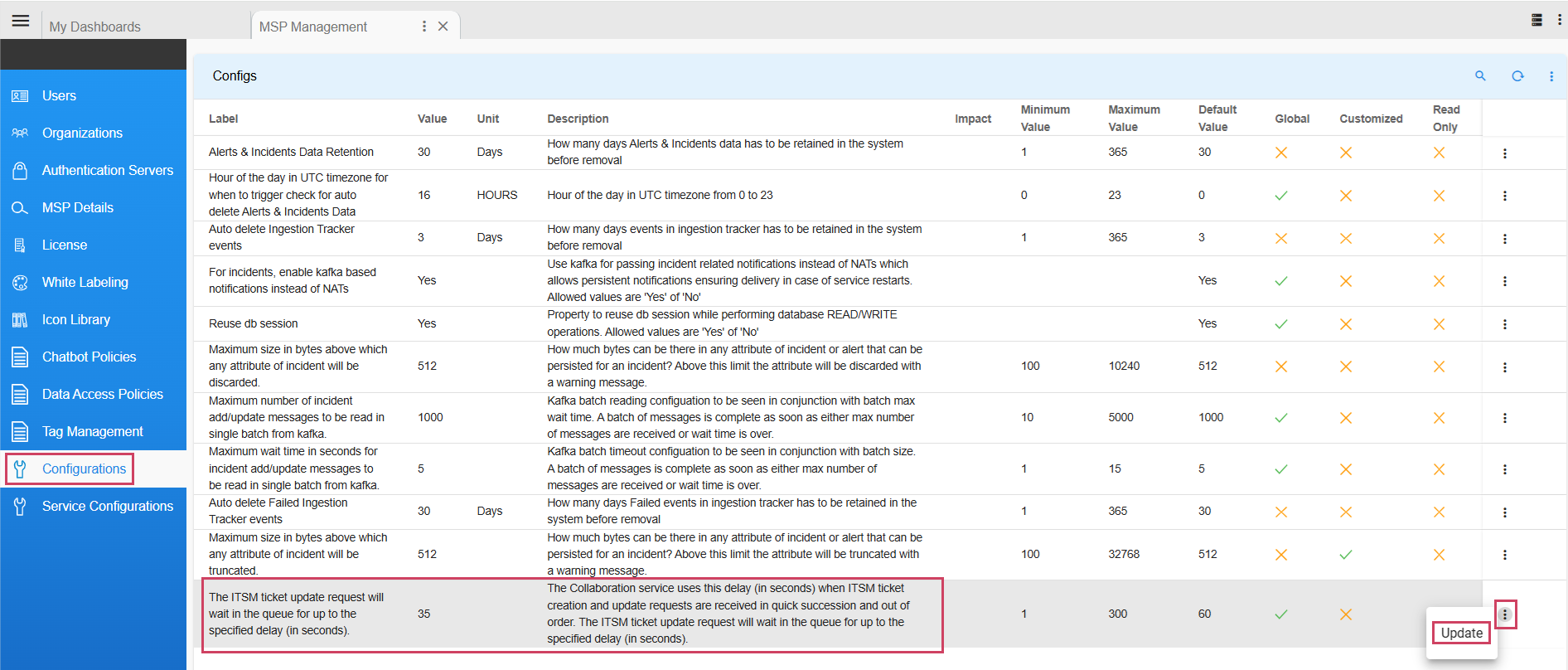

8. Configuration

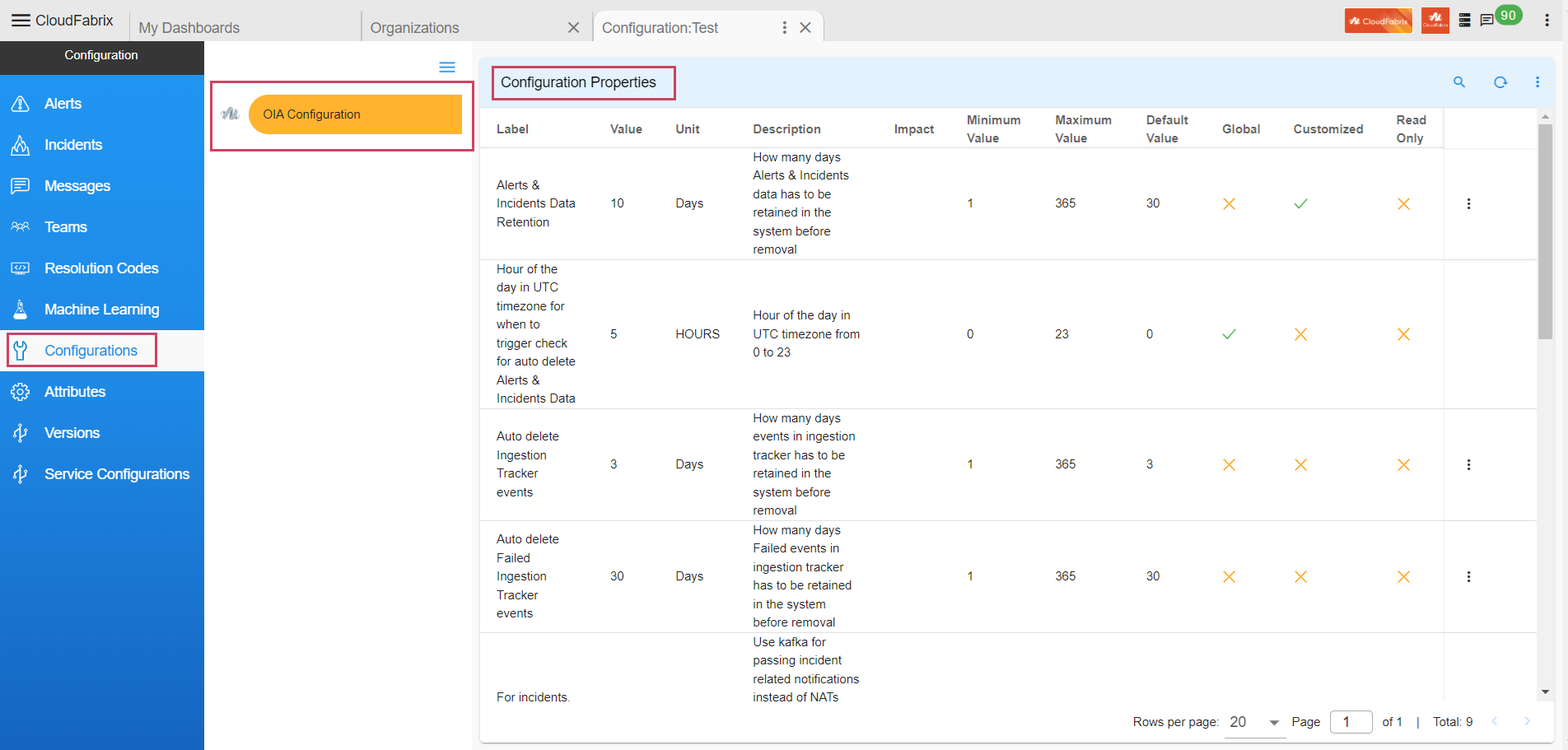

8.1 Manage OIA Configuration Properties

8.1.1 Overview

OIA supports updating configuration properties that can be updated through Configuration UI. For example Alerts & Incidents Data Retention and Auto Delete Ingestion Tracker Events etc.

-

Configuration properties can be updated at Global and Organization level.

[a]. Properties which are enabled at Global cannot be changed at Organization level.

[b]. Properties with Global as False can be updated at Organization level.

[c]. Property can be customized at the Organization level for which Global is False.

-

Path to update configuration properties

[a]. Organizational Level

Navigation Path : Home Menu → Configuration → Apps Administration → Configure → Configuration → OIA Configuration → Configuration Properties

[b]. Global Level

Navigation Path : Home Menu → Administration → Configurations → Configs

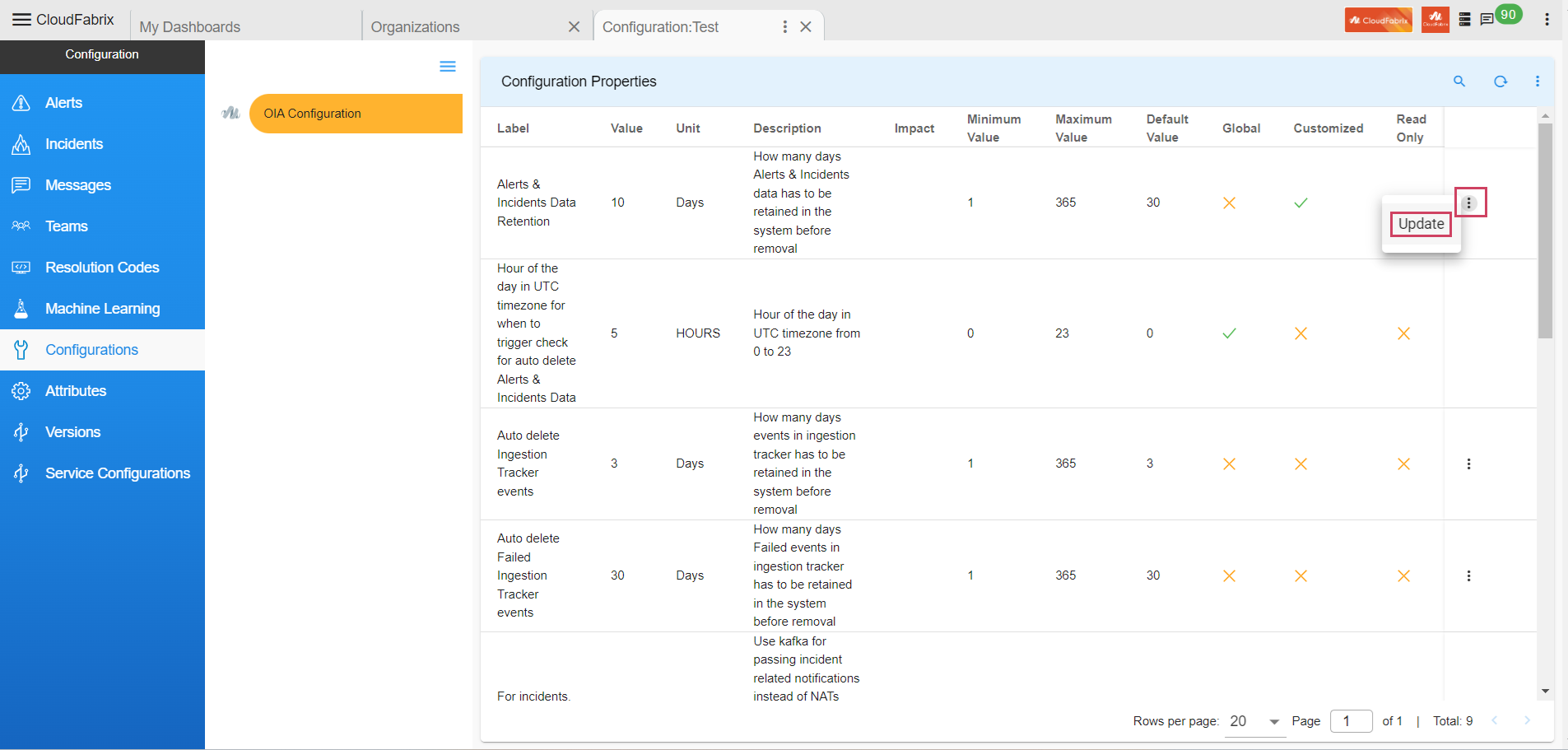

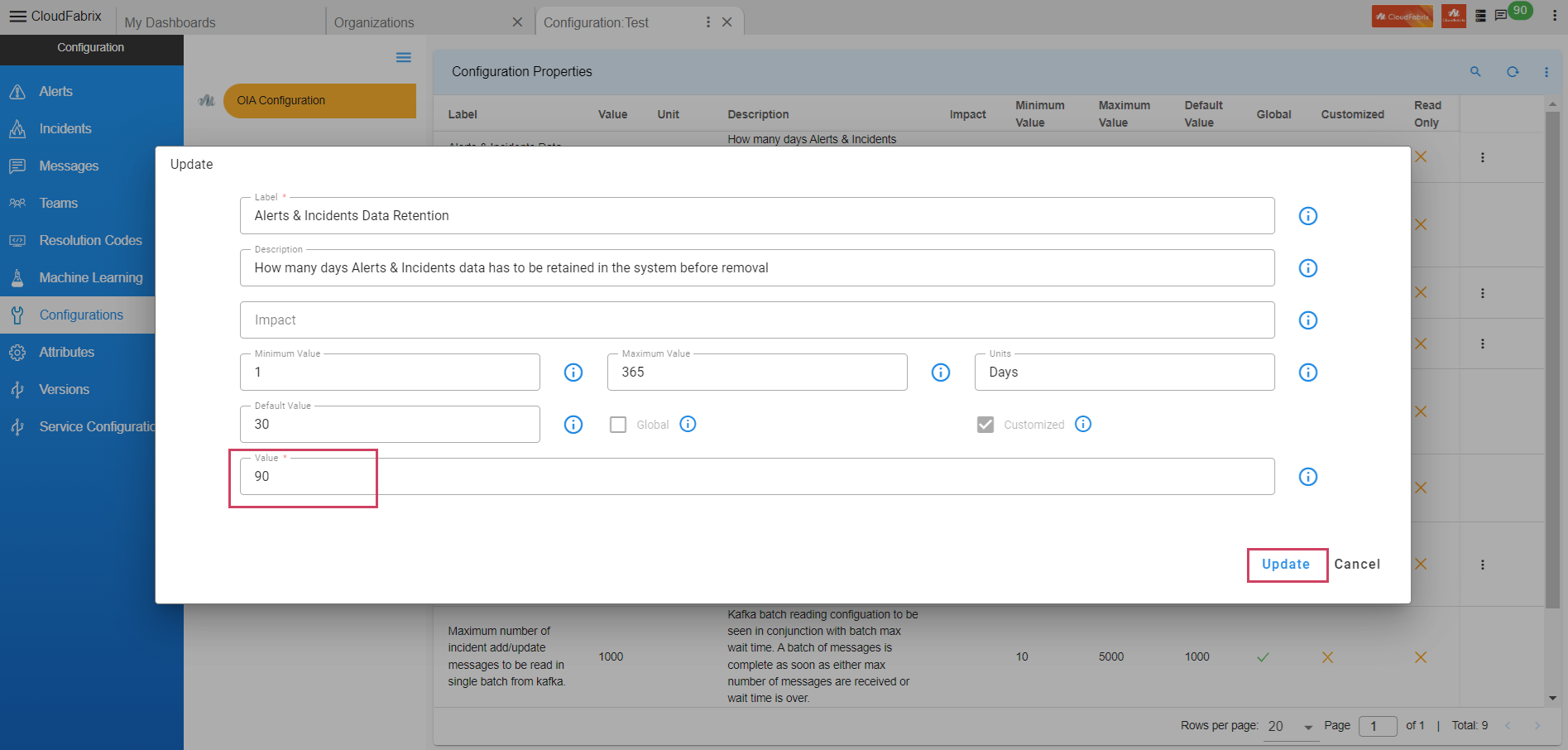

8.1.2 Configure Property(DB Configuration Change)

- Path to update Configuration Property : Home Menu → Configuration → Apps Administration → Configure → Configuration → OIA Configuration → Configuration Properties → Update

Alerts & Incidents Data Retention

- Property to define - How many days Alerts & Incidents data has to be retained in the system before removal.

Note

Pstream retention and this property should be the same in order to avoid functionality impact.

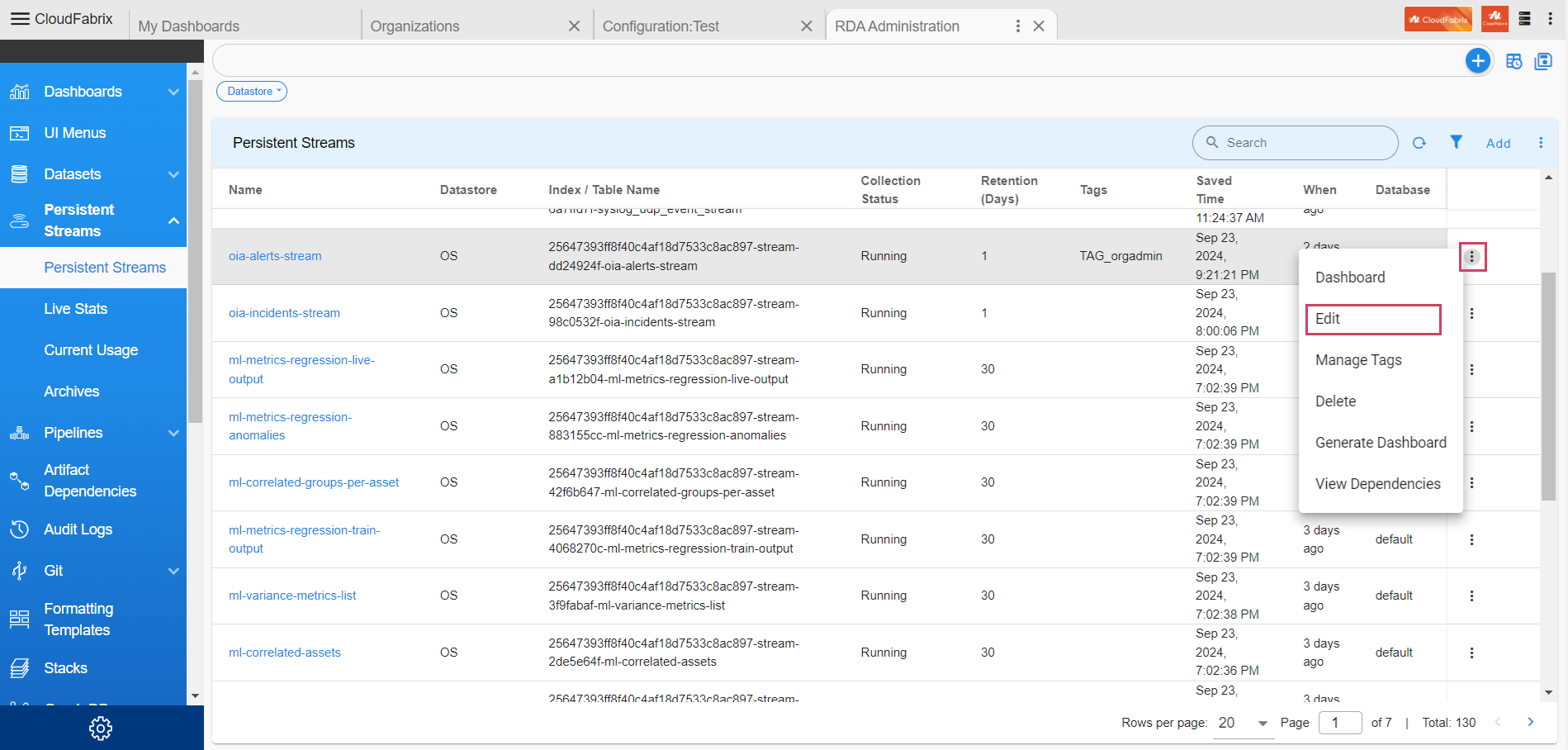

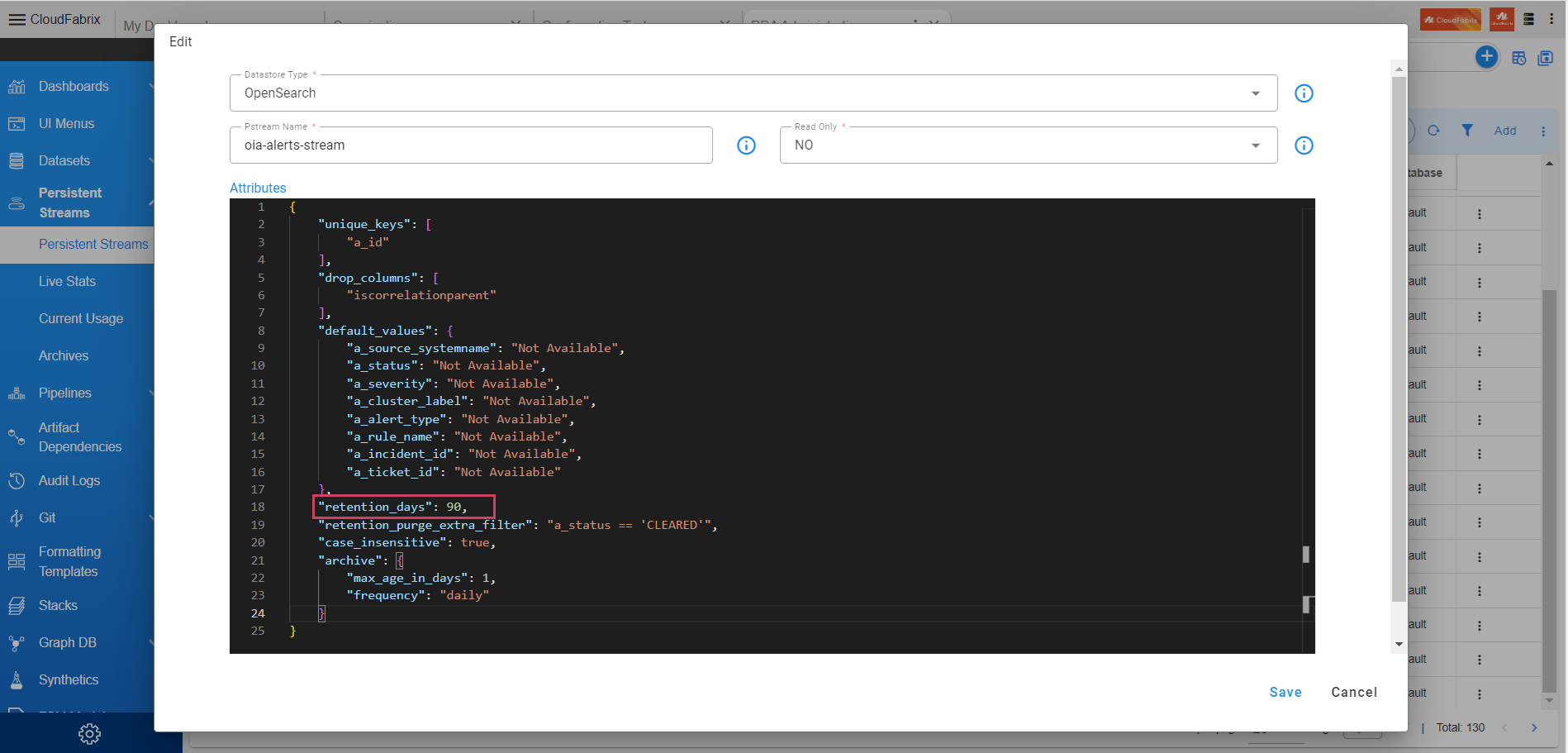

8.1.3 Configure Alerts PStream Retention

-

Here user can find the steps to update pstream retention days for Alerts stream

-

Path to update configurations for pstream : Home Menu → Configuration → RDA Administration → Persistent Streams→ Persistent Streams → oia-alerts-stream → Edit

- Update retention_days property value to 90 Days in json.

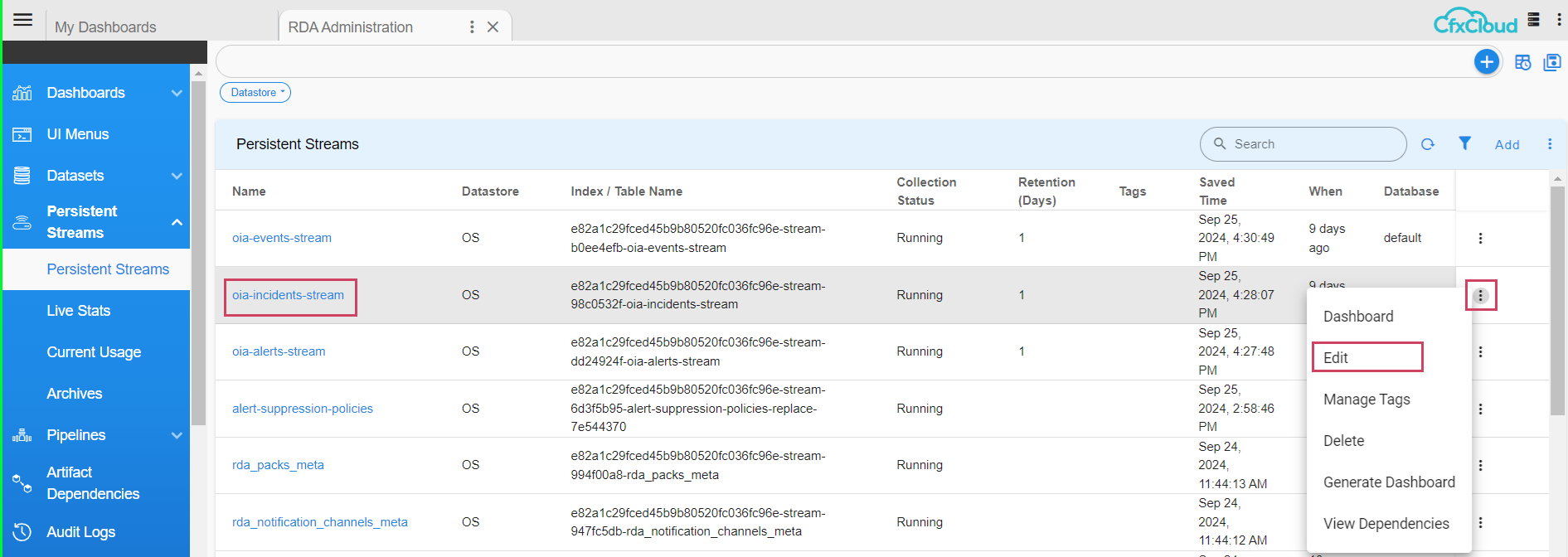

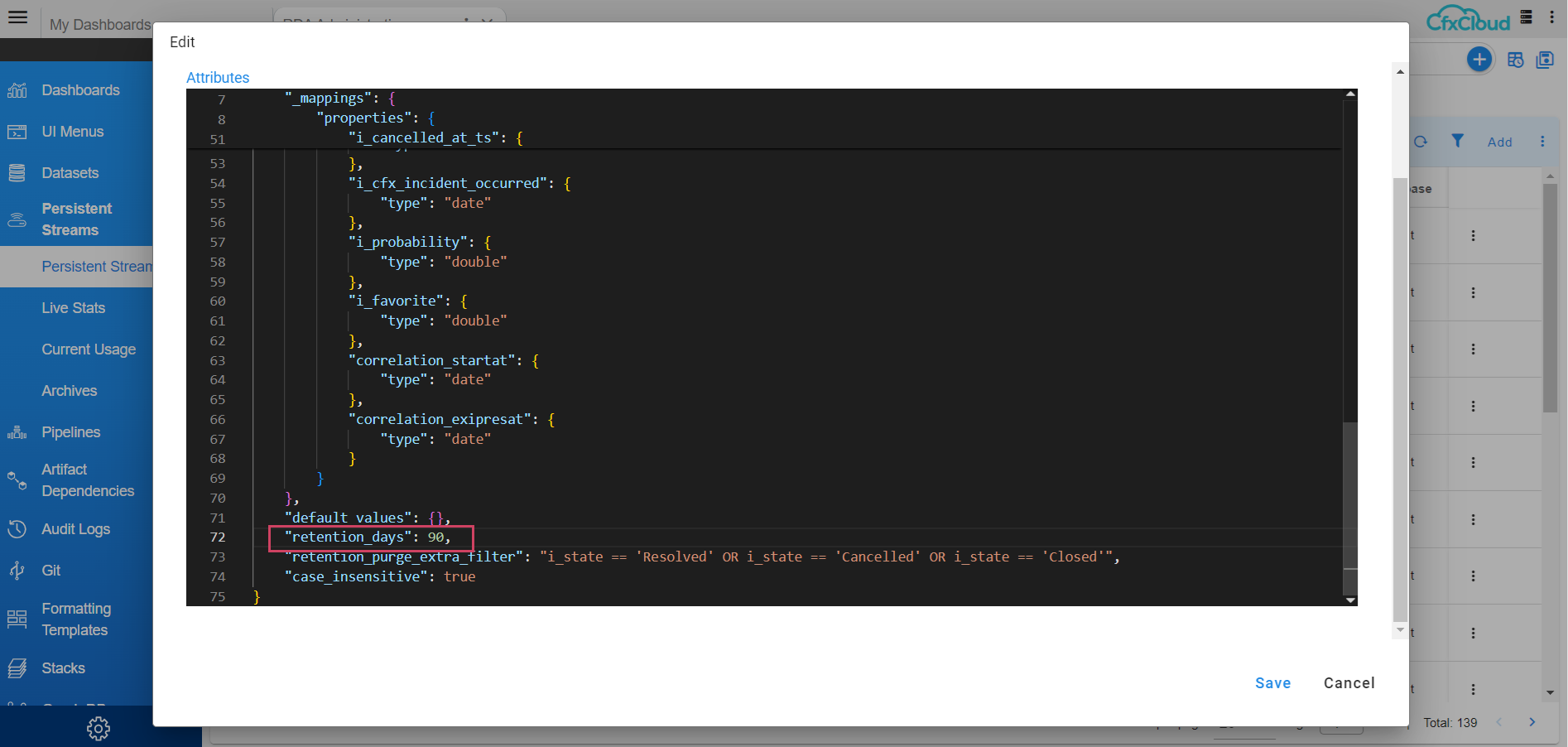

8.1.4 Configure Incident PStream Retention

-

Here user can find the steps to update pstream retention days for Incident stream

-

Path to update configurations for pstream : Home Menu → Configuration → RDA Administration → Persistent Streams→ Persistent Streams → oia-incidents-stream → Edit

- Update retention_days property value to 90 Days in json.

8.1.5 Configuring Wait Queue Time for ITSM Ticket Updates

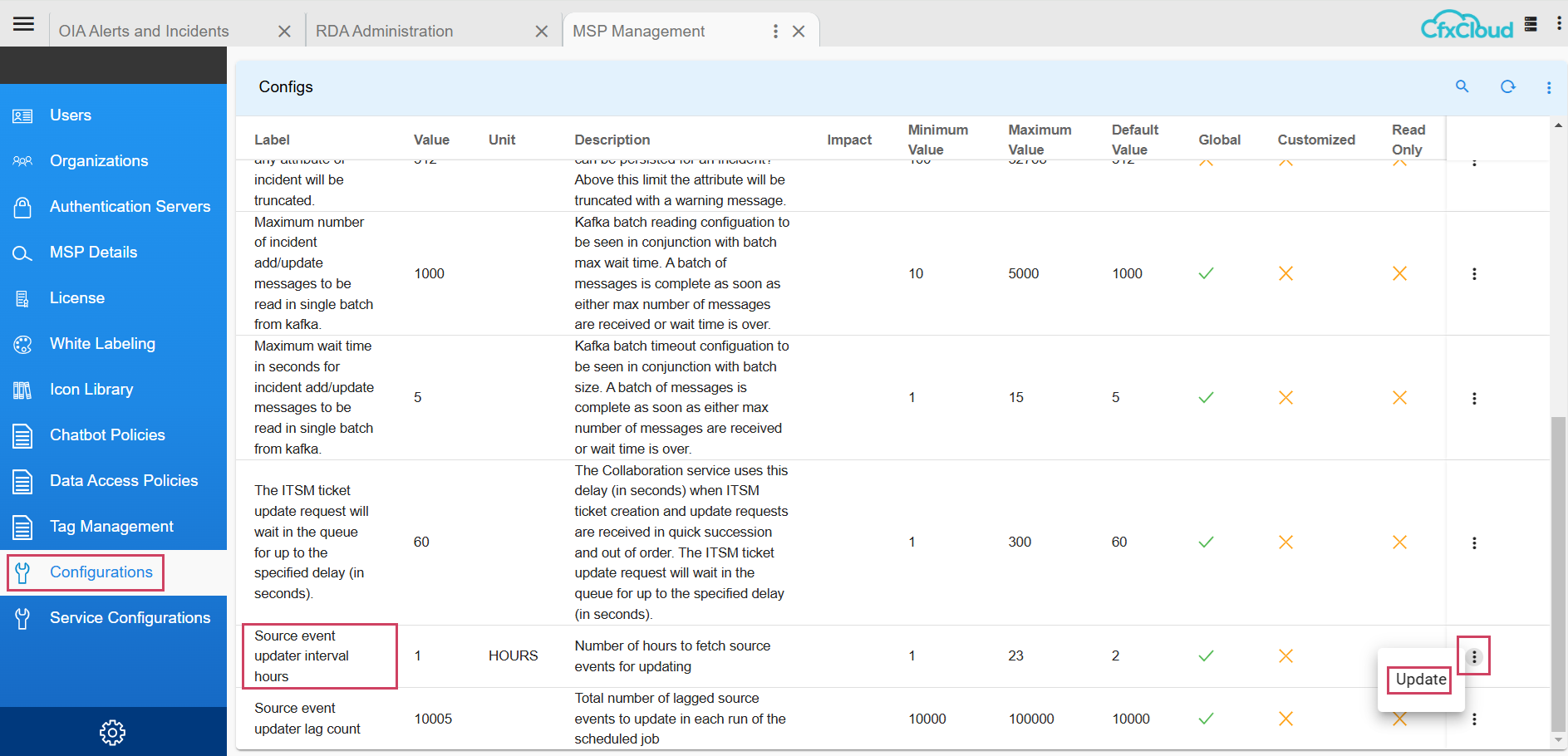

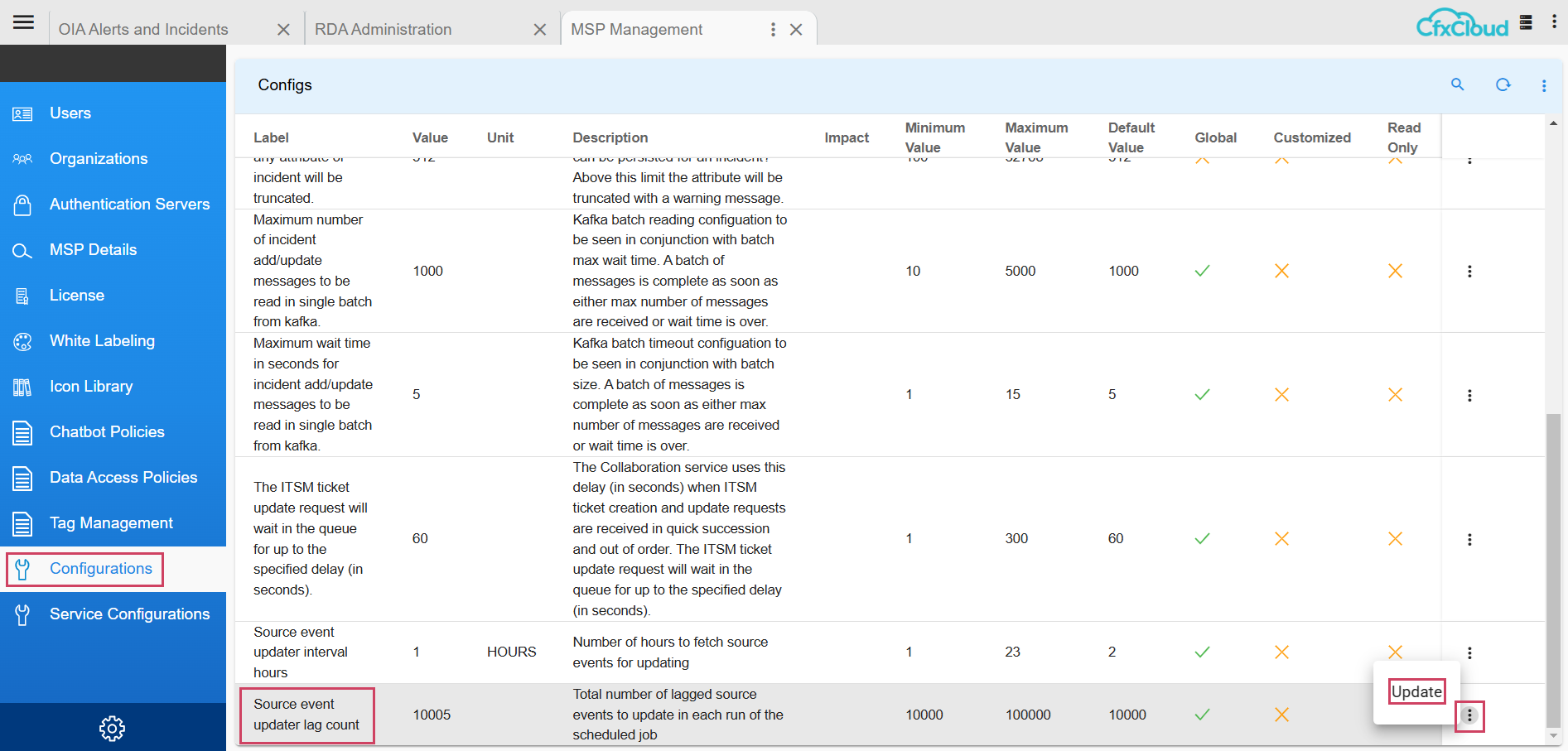

Path to update Configuration Property : Home Menu → Administration → Configurations → Update as shown in the screenshot below

8.1.6 Configuring Source Event Updater Interval Hours

Path to update Configuration Property : Home Menu → Administration → Configurations → Update as shown in the screenshot below

8.1.7 Configuring Source Event Updater Lag Count

Path to update Configuration Property : Home Menu → Administration → Configurations → Update as shown in the screenshot below

Note

The above mentioned configuration changes are applicable across all the projects in the system

9. Incident Management

cfxOIA creates Incident for every correlated Alert Group and sends them to ITSM tools (such as ServiceNow, PagerDuty, etc.) for further processing by IT Analysts, NOC/SOC Engineers, or Tier-1/Tier-2 Engineers. cfxOIA provides a module called Incident Room that AIOps operators and ITSM operators can use to accelerate incident analysis and resolution. The Incident room provides all the relevant context, data, insights, and tools at one place for incident resolution.

Learn more about:

9.1 Incident Mapping

9.1.1 Incident Endpoints

9.1.1.1 Endpoint Role

-

Source

Examples: Reading data from Webhooks, Kafka

-

Target

Examples: Creating/Updating ITSM tickets

9.1.1.2 Endpoint to Mapping

-

One to One

One Endpoint Mapped to one Incident Mapping

-

One to Many

a) One Endpoint mapped to more than one incident mapping.

b) Incident payload fields could be used to prepare condition that should be met before applying the mapping for current event. For example, alert-source on incident payload could be used.

9.1.1.3 Mapping Conditions

-

Mapping without Condition

This is typically mapped to one end point. All Incidents will use this single mapping and prepare similar payloads to submit to ITSM.

-

Mapping with Condition

This helps in creating different ITSM payloads as per defined condition. Conditions could be based on alert source mechanism or some other field.

9.1.1.4 Mapping Examples

Mapping helps in preparing the payload that will be used in sending to ITSM. System will receive Incident payload as the base and users, using this endpoint mapping, could customize the base payload and update or create new fields.

1) Passing All the fields as it is, no additional fields needed.

{

"mappings": [

{

"to": "status",

"from": "state",

"func": {

"map_values": {

"New": "New",

"Assigned": "New",

"OnHold": "On Hold",

"On Hold": "On Hold",

"Resolved": "Resolved",

"Closed": "Resolved",

"Cancelled": "Canceled",

"default": "New"

}

}

}

],

"keepUnmapped": true

}

- This means, if a field is NOT defined here in this mapping it will not be sent to the next step i.e. creating/updating ITSM ticket.

{

"conditions": [

{

"on": "alert_source",

"op": "matches",

"expr": "Corestack"

}

],

"mappings": [

{

"to": "customerid",

"from": "customerid"

},

{

"to": "publish-stream-type",

"func": {

"evaluate": {

"expr": "'KAFKA'"

}

}

}

],

"keepUnmapped": false

}

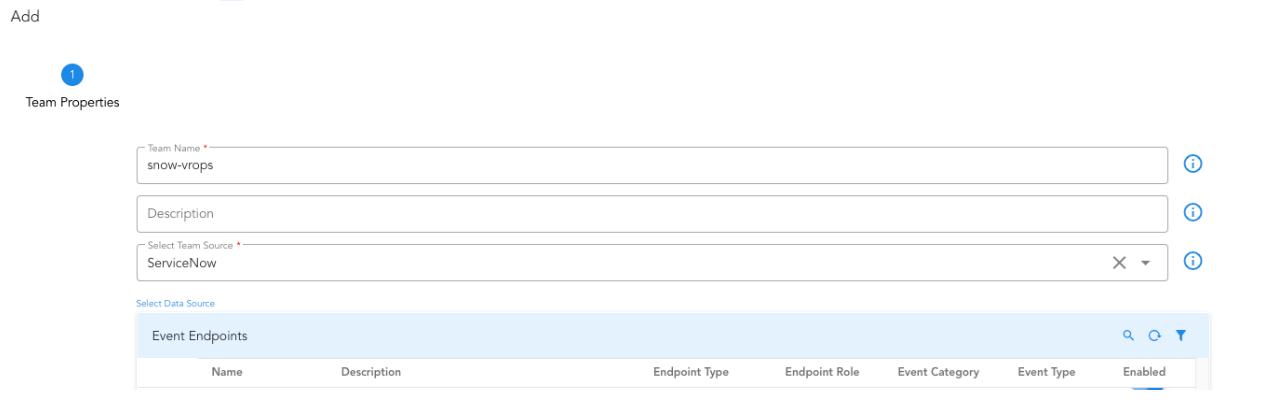

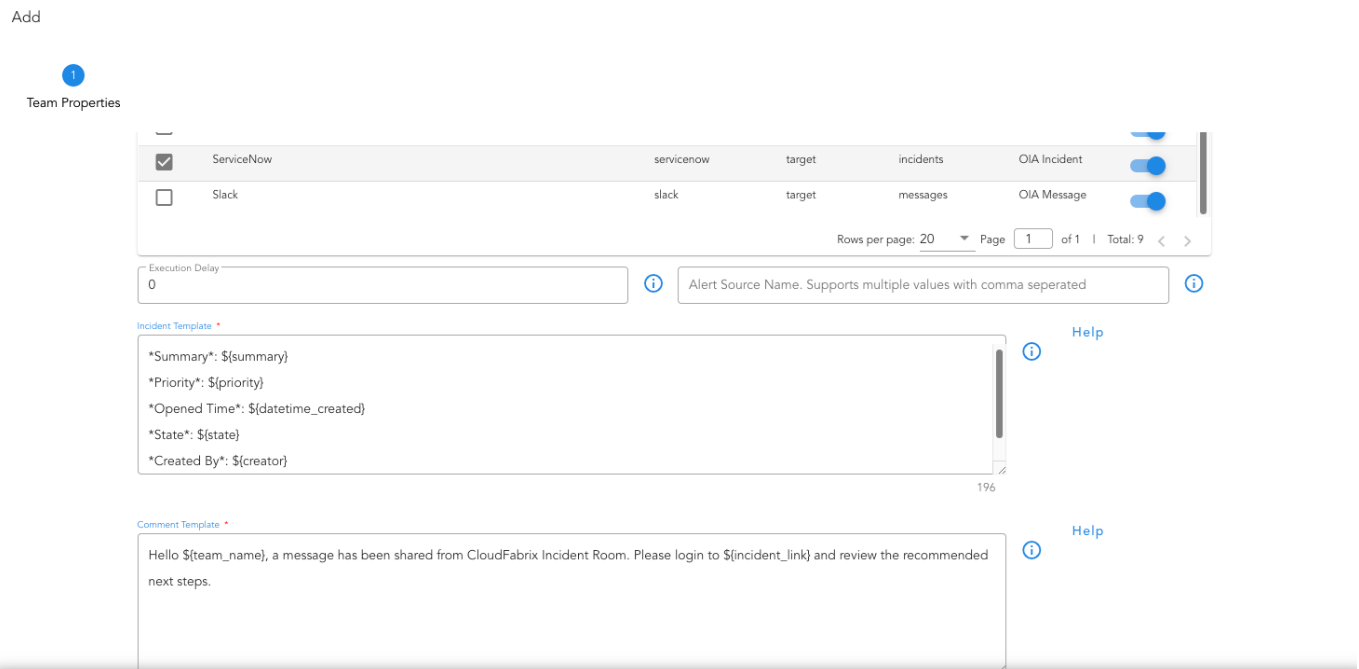

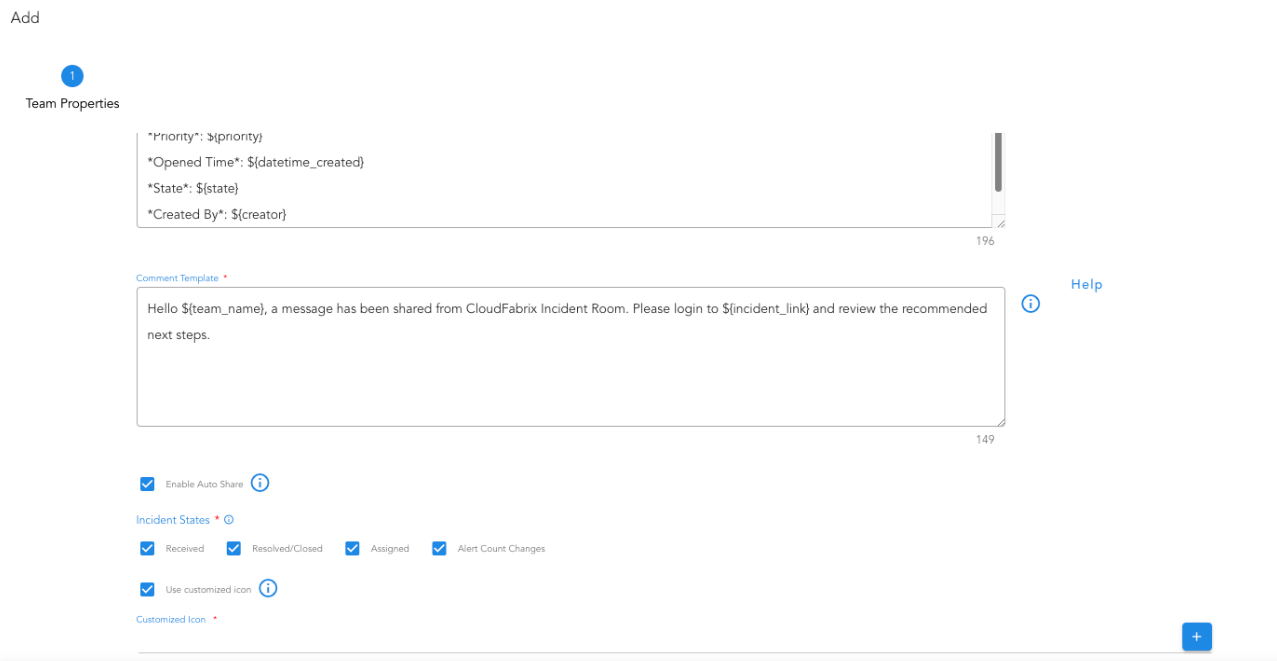

9.1.2 Teams

A Team is required for listening to Incident events and route it to corresponding Endpoints. Team helps users to configure what to listen on like Incident Creates, Incident Resolutions etc. Additionally the team supports one source of Incident or all or specific set of Incident sources. System will perform a 3-way check to make sure Incident source matches Team source and also matches corresponding Endpoint source. If a source is not set, the corresponding team will be applied for all the Incidents and pick an endpoint with a similar rule to match.

9.1.3 Condition

This gets run prior to applying any mapping. It can be used to filter and pick applicable input data based on the incoming data. For each set of data, like alert_source in our below example, we can dynamically pick the most applicable mapper.

9.1.4 Mandatory Fields

By default except for mappings and keepUnmapped, everything else is optional. However, based on downstream applications like ServiceNow, PagerDuty, some fields are

required.

- For ServiceNow and PagerDuty

{

"to": "customerid",

"from": "customerid"

},

{

"to": "roomid",

"from": "roomid"

},

{

"to": "projectid",

"from": "projectId"

},

{

"to": "team_id",

"from": "team_id"

},

{

"to": "team_name",

"from": "team_name"

},

{

"to": "tool_name",

"from": "tool_name"

},

{

"to": "incidentid",

"from": "incidentid"

}

9.1.4.1 Mandatory Fields ServiceNow

Here user can find information on additional mandatory Fields for creating ServiceNow incident

{

"to": "state",

"from": "state",

"func": {

"map_values": {

"New": "New",

"Assigned": "New",

"OnHold": "On Hold",

"On Hold": "On Hold",

"Resolved": "Resolved",

"Closed": "Resolved",

"Cancelled": "Canceled",

"default": "New"

}

}

},

{

"to": "ticketStatus",

"from": "state"

},

{

"to": "impact",

"from": "priority",

"func": {

"map_values": {

"1 - Critical": "Total Loss of Service",

"2 - High": "Partial Loss",

"3 - Moderate": "Partial Loss",

"4 - Low": "No Impact",

"5 - Planning": "No Impact",

"default": "No Impact"

}

}

},

{

"to": "urgency",

"from": "priority",

"func": {

"map_values": {

"1 - Critical": "P1",

"2 - High": "P2",

"3 - Moderate": "P3",

"4 - Low": "P3",

"5 - Planning": "P4",

"default": "P3"

}

}

},

{

"to": "notes_text",

"func": {

"evaluate": {

"expr": "'getAlertsAsNotes'"

}

}

},

{

"to": "notes_author",

"func": {

"evaluate": {

"expr": "'CFX'"

}

}

},

{

"to": "channel",

"func": {

"evaluate": {

"expr": "'Fault Management'"

}

}

},

{

"to": "account",

"func": {

"evaluate": {

"expr": "'The Tata Power Company Limited'"

}

}

},

{

"to": "shortDescription",

"from": "_tmp_ci_aa_alert_email_sub"

},

{

"to": "description",

"func": {

"evaluate": {

"expr": "'getDescription'"

}

}

}

9.1.4.2 Functional Fields

The following are optional, however important for preparing payload based on changes to the source transaction.

alert_changes: A list that is helpful to show how many new alerts are correlated in this latest update event.

clear_alerts: A list of all cleared alert-ids. It helps us to prepare notes/comments with a list of alert subjects that were cleared.

incident_changes: List of incident model fields changed in the latest update.

exclude_on_clear: For ServiceNow, we don't have to send state, priority etc fields when they are not changed. So on clear event, we want to send only a comment and not touch other fields on ITSM.

exclude_on_update: Similar to exclude_on_clear, we remove fields that are NOT updated. By default the system will send state and priority. However, if they are not changed in current update even we will remove them before sending to ITSM.

{

"to": "alert_changes",

"from": "alert_changes"

},

{

"to": "clear_alerts",

"from": "clear_alerts"

},

{

"to": "incident_changes",

"from": "incident_changes"

},

{

"to": "exclude_on_clear",

"func": {

"evaluate": {

"expr": "'[\"description\",\"summary\",\"short_description\",\"shortDescription\",\"priority\",\"impact\",\"state\",\"urgency\",\"ticketStatus\"]'"

}

}

},

{

"to": "exclude_on_update",

"func": {

"evaluate": {

"expr": "'[\"description\",\"summary\",\"short_description\",\"shortDescription\",\"priority\",\"impact\",\"state\",\"urgency\",\"ticketStatus\"]'"

}

}

}

delay_between_actions: Configure the following to add an additional delay, default 1 sec, between Create and Resolved state updates. This is applicable only when ITSM ticket is not existing until Resolve update is processed. System will attempt to create a new ITSM first then give a gap of specified number of seconds and then make a Resolve update call on the new ITSM ticket.

allow_state_reopen: If the ITSM system wants all forms of state updates including reopen after Resolved/Closed, then the following should be set to True. Default is False, system wont post reopen state updates to ITSM.

cfx_to_itsm_status: This is useful when translating latest incident status to corresponding ITSM status.{

"to": "cfx_to_itsm_status",

"func": {

"evaluate": {

"expr": "'{\"New\":\"1\",\"Open\":\"1\",\"Assigned\":\"2\",\"OnHold\":\"3\",\"OnHold\":\"3\",\"Resolved\":\"6\",\"Closed\":\"7\",\"Cancelled\":\"8\"}'"

}

}

}

itsm_to_cfx_status: This is useful when translating the ITSM status back to CFX status.

{

"to": "itsm_to_cfx_status",

"func": {

"evaluate": {

"expr": "'{\"4\":\"Resolved\",\"5\":\"Closed\",\"6\":\"Resolved\",\"7\":\"Closed\"}'"

}

}

}

retry_interval: Retry interval in seconds, default 5, when ITSM create or update failed. Delay between each retry.

skip_retry_on_keywords: List of keywords that should not go through retry when found in ITSM action response. Default [not available,invalid,inactive state,operation failed,doctype]

{

"to": "skip_retry_on_keywords",

"func": {

"evaluate": {

"expr": "'[\"not available\",\"invalid\",\"inactive state\",\"operation failed\",\"doctype\"]'"

}

}

},

9.1.5 Supported Functions

9.1.5.1 JSON Parsing and Traversing

9.1.5.2 Mapping

{

"to": "configuration_items",

"from": "configuration_items",

"func": {

"jsonDecode": {}

}

},

{

"to": "_tmp_ci_aa",

"from": "configuration_items",

"func": {

"valueRef": {

"path": "alert_attributes"

}

}

},

{

"to": "_tmp_ci_aa",

"from": "_tmp_ci_aa",

"func": {

"jsonDecode": {}

}

},

{

"to": "_tmp_ci_aa_assetName",

"from": "_tmp_ci_aa",

"func": {

"valueRef": {

"path": "assetName"

}

}

}

9.1.5.3 Split

{

"from": "assetName",

"to": "asset_shortname",

"func": [

{

"split": {

"sep": "."

}

},

{

"valueRef": {

"path": "0"

}

}

]

}

9.1.5.4 RegEx

{

"from": "_tmp_body",

"to": "message",

"func": [

{

"match": {

"expr": ".*Failure Reason:?\\s?(?P<message>.*?)\\n",

"flags": [

"DOTALL"

]

}

},

{

"when_null": {

"value": "Backup job failed."

}

}

]

}

9.1.5.5 DateTime Function

{

"from": "_tmp_time",

"to": "raisedAt",

"func": [

{

"datetime": {

"tzmap": {

"IST": "Asia/Kolkata"

}

}

}

]

}

9.1.5.6 If-Else Condition

{

"to": "_tmp_ci_aa_assetName",

"func": {

"evaluate": {

"expr": "_tmp_ci_aa_assetName if _tmp_ci_aa_assetName else _tmp_ci_aa_assetNameL"

}

}

}

9.1.5.7 Function

Apply a function to a field

9.1.5.8 Join Multiple fields

{

"from": [

"assetName",

"comcell_name",

"backup_job_id"

],

"to": "key",

"func": {

"join": {

"sep": "#"

}

}

}

9.1.5.9 Map

{

"to": "state",

"from": "state",

"func": {

"map_values": {

"New": "New",

"Assigned": "New",

"OnHold": "On Hold",

"On Hold": "On Hold",

"Resolved": "Resolved",

"Closed": "Resolved",

"Cancelled": "Canceled",

"default": "New"

}

}

}

9.1.5.10 Constant

9.1.5.11 Concat

{

"to": "Title",

"func": {

"evaluate": {

"expr": "(_tmp_ci_aa_status + ' in ' + _tmp_ci_aa_ResolvedDuration +

':' + _tmp_ci_aa_Hostname + ' by ' + _tmp_ci_aa_RecoveryName) if

(_tmp_ci_aa_status != '' and _tmp_ci_aa_ResolvedDuration != '' and

_tmp_ci_aa_Hostname != '' and _tmp_ci_aa_RecoveryName != '' and

(_tmp_ci_aa_status == 'CLEARED' or _tmp_ci_aa_status=='RESOLVED')) else ''"

}

}

}

9.1.6 Custom Implementation

9.1.6.1 Description Body

Sometimes it is required to concat multiple fields to prepare one field and then send it to ITSM.

Example: Description field could consist of fields from Incident level and parent alert level.

{

"to": "desc_fields",

"func": {

"evaluate": {

"expr": "'{\"tmp_ci_aa_alert_email_body\":

\"Description\",\"shortDescription\": \"Short

Description\",\"serviceIdentifier\": \"Service Identifier\",\"channel\":

\"Channel\",\"category\": \"Category\",\"priority\":

\"Priority\",\"alert_source\": \"Alarm Source\"}'"

}

}

},

{

"to": "description",

"func": {

"evaluate": {

"expr": "'getDescription'"

}

}

}

When preparing a description field, the application will fetch a list of fields defined in desc_fields and for each defined field, it looks in the payload at both Incident Level and Alert Level.

9.1.6.2 Notes/Comments

Sometimes the user needs to send a different set of fields based on the Incident state. Here is an example of such a use case.

{

"to": "alert_fields",

"func": {

"evaluate": {

"expr": "'[{\"ACTIVE\":{\"alert_source_event_id\": \"Alert ID\", \"assetipaddress\": \"IP Address\", \"message\": \"Message\", \"alert_severity\": \"Severity\", \"sourcestatus\": \"Status\", \"assetname\": \"Device\", \"alert_open_time\": \"EventTime\", \"alert_type_key\": \"Event\", \"HostGroup\": \"HostGroup\", \"Tags\": \"Tags\", \"alert_os_version\": \"OS Type & Version\", \"alert_trigger_message\": \"Detail Message\"}},{\"CLEARED\":{\"alert_source_event_id\": \"Alert ID\", \"message\": \"Message\", \"assetipaddress\": \"IP Address\",\"sourcestatus\": \"Status\",\"assetname\": \"Device\", \"alert_cleart_time\": \"EventTime\", \"HostGroup\": \"HostGroup\",\"Tags\": \"Tags\", \"alert_detail_message\": \"Detail Message\", \"alert_type_key\": \"Monitor Type\", \"Title\":\"Title\", \"LastEventTime\":\"LastEventTime\", \"ProblemResolvedTime\":\"ProblemResolvedTime\"}}]'"

}

}

},

{

"to": "comments",

"func": {

"evaluate": {

"expr": "'getAlertsAsNotes'"

}

}

}

9.1.6.3 Specific Field from Alert

Our base payload fields are from Incident model. However application fetches alerts for the given incident, We support accessing the alert fields using following convention.

9.1.6.4 Template

This helps in contacting multiple fields and preparing one final value. Values are fetched from corresponding Incident or Alert data.

{

"to": "alert_template",

"func": {

"evaluate": {

"expr": "'[{\"ACTIVE\":{\"template\":\"Active Alert<br>Message: $message<br>Device:$assetname<br>Body $text\"}},{\"CLEARED\":{\"template\":\"Cleared Alert<br>Message:$message<br>Content: $text\"}}]'"

}

}

}

9.1.6.5 Notification

Application supports posting the confirmation to RDA Persistent Stream. This helps any downstream application to listen and respond as needed by the business logic. Based on the defined set of fields and stream name, application will prepare and payload and write it to the stream.

{

"to": "notify-itsm-action-fields",

"func": {

"evaluate": {

"expr": "'[\"summary\",\"incidentid\",\"customerid\",\"roomid\",\"projectid\",\"sourcekey\",\"state\"]'"

}

}

},

{

"to": "notify-itsm-action-stream",

"func": {

"evaluate": {

"expr": "'itsm_ticket_kafka_stream'"

}

}

},

{

"to": "publish-stream-type",

"func": {

"evaluate": {

"expr": "'KAFKA'"

}

}

}

9.1.7 Skip ITSM Ticketing

Suppose user wants to skip the ITSM ticketing for this alert source, user can set the following fields and save the incident mapping

{

"to": "itsm_ticket",

"func": {

"evaluate": {

"expr": "'false'"

}

}

},

{

"to": "itsm_ticket_override",

"from": "false"

}

9.1.8 Examples

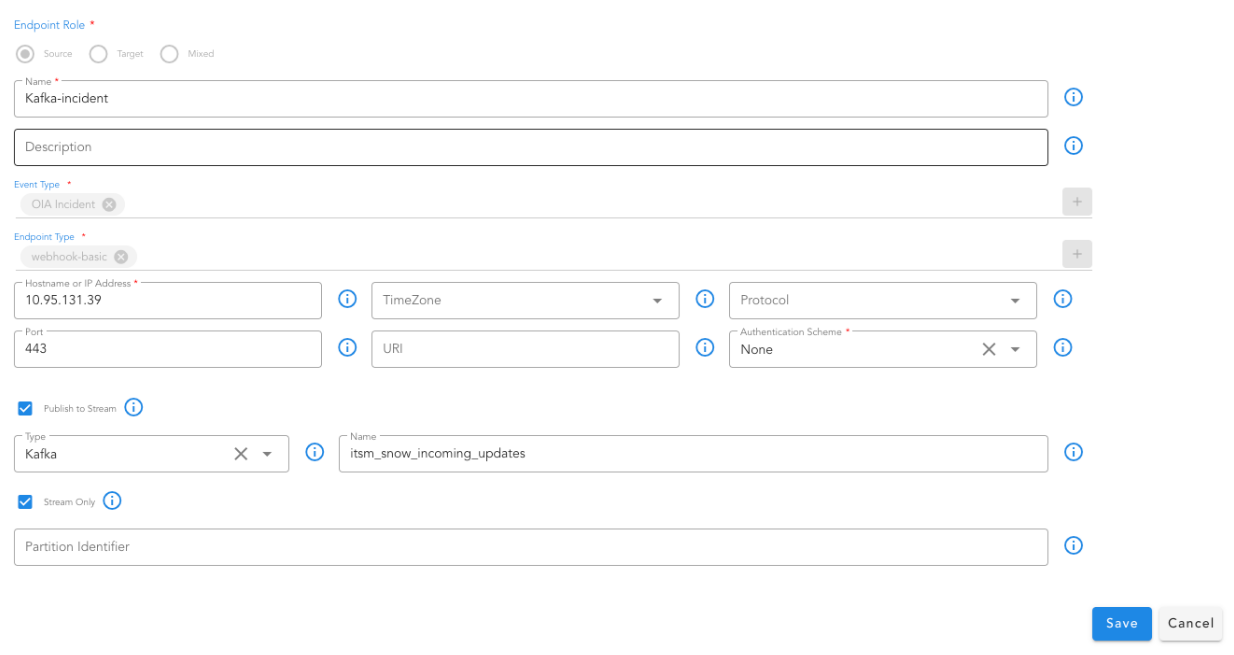

9.1.8.1 Endpoint to Read from Kafka

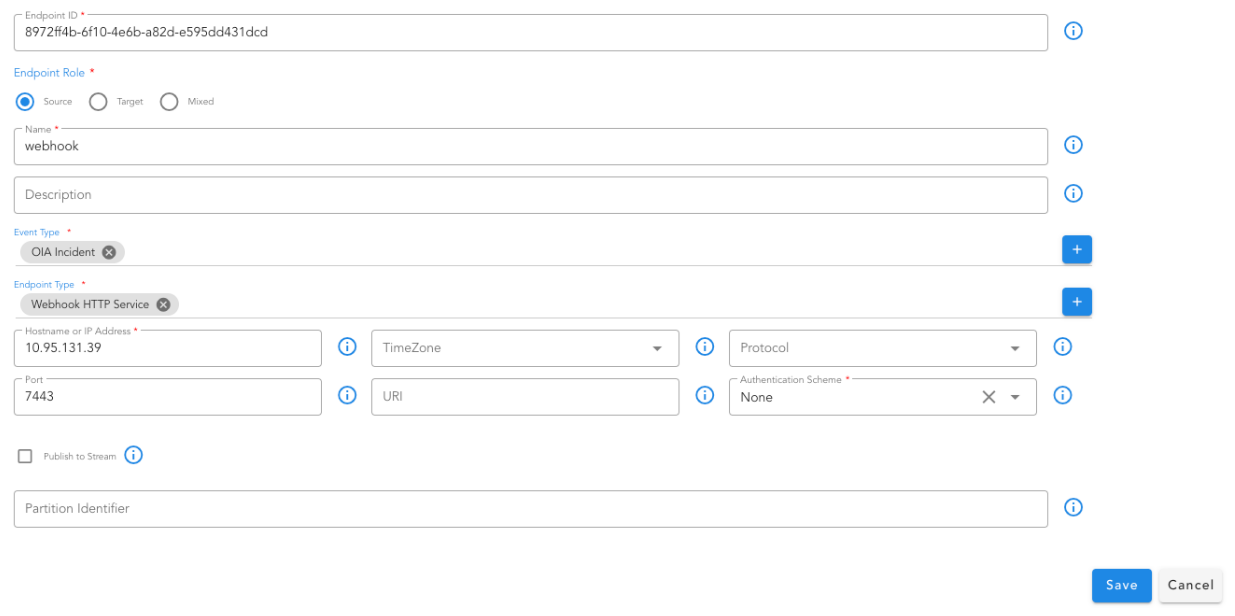

9.1.8.2 Webhook

9.1.8.3 ServiceNow

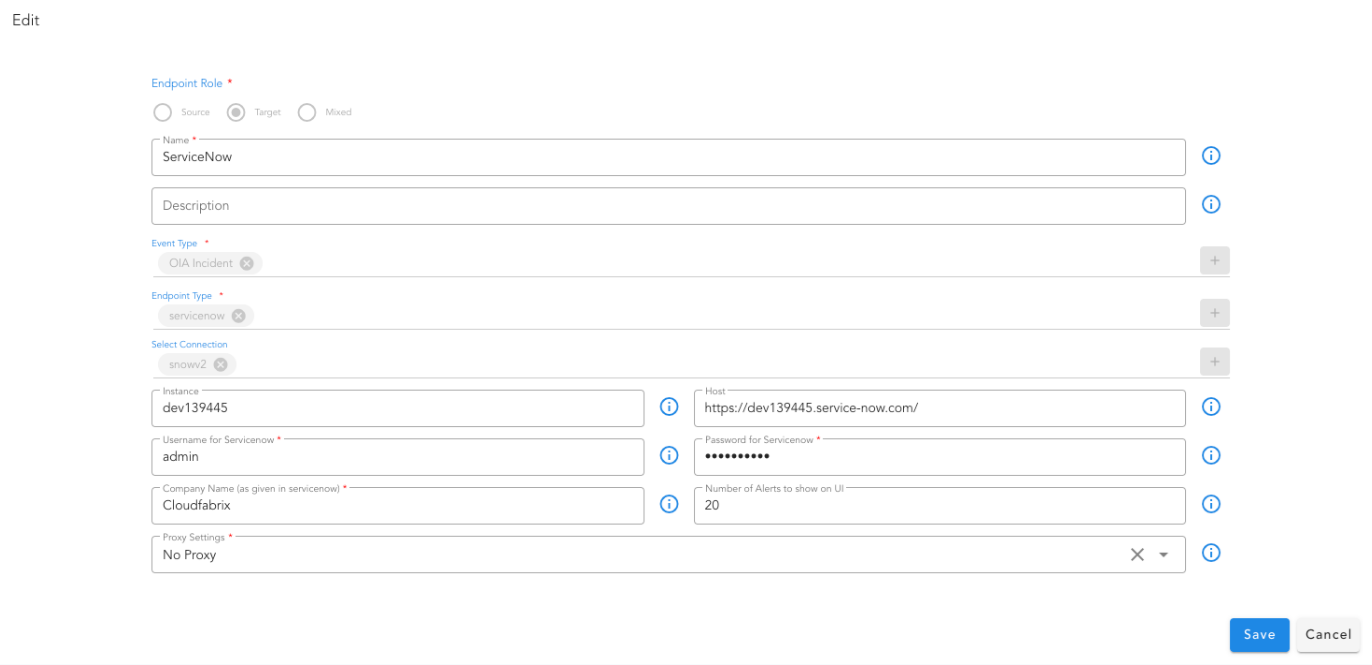

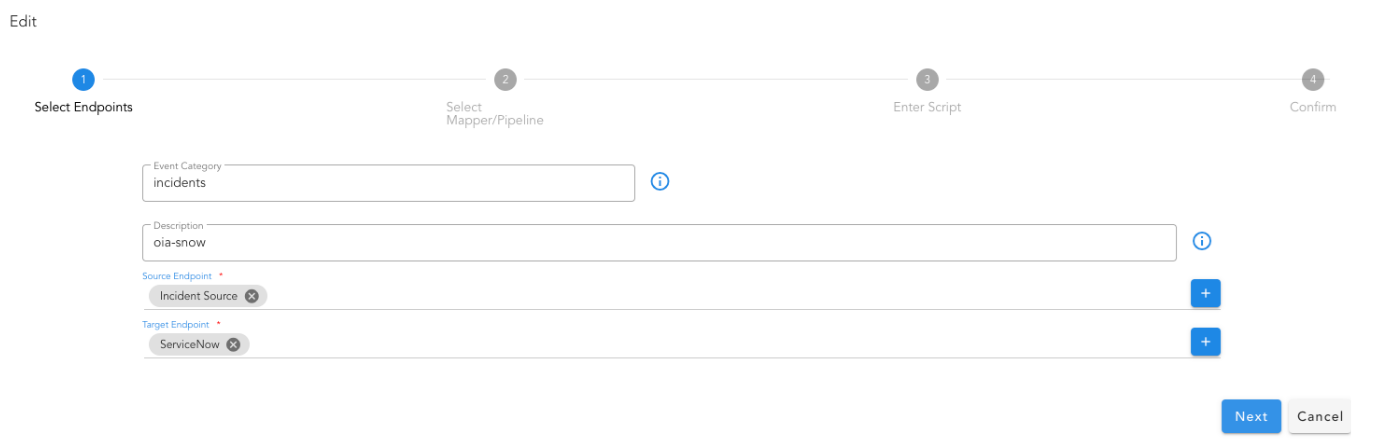

9.1.8.3.1 Incident Endpoint

9.1.8.3.2 Incident Mapping

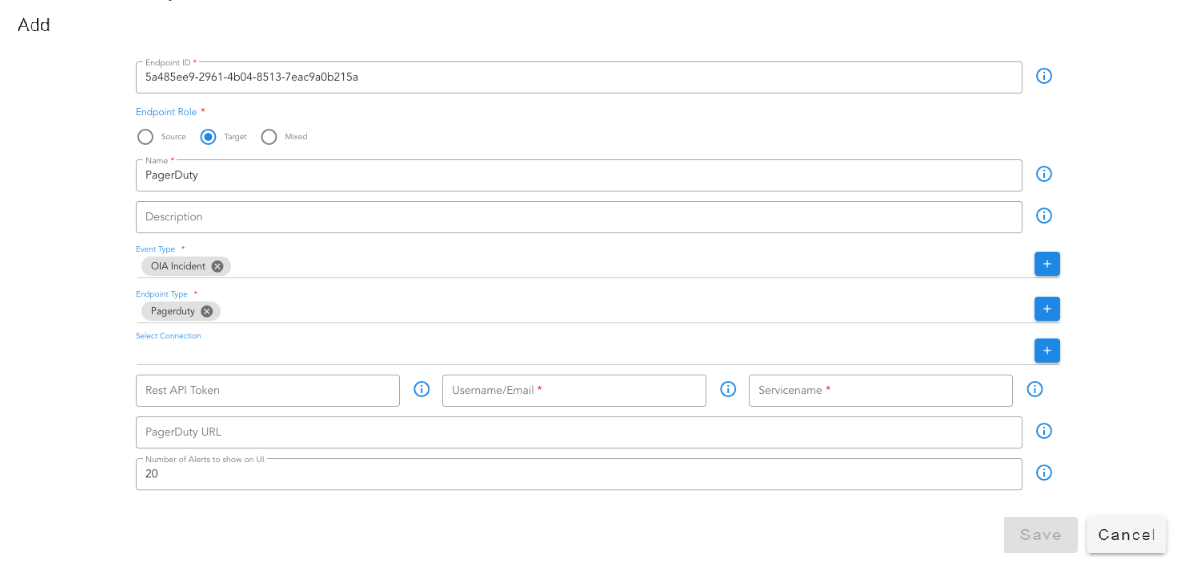

9.1.8.4 PagerDuty

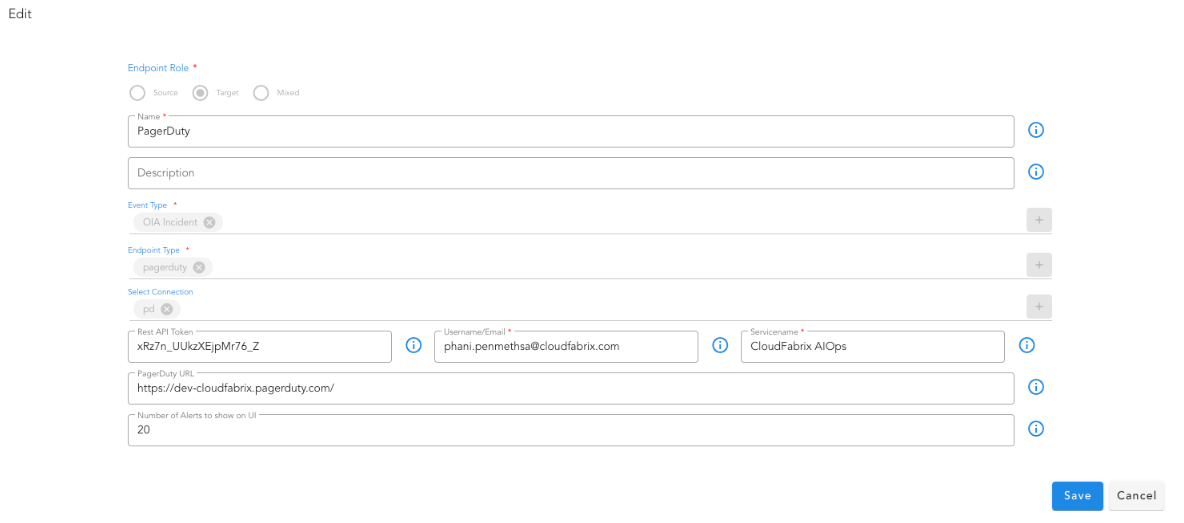

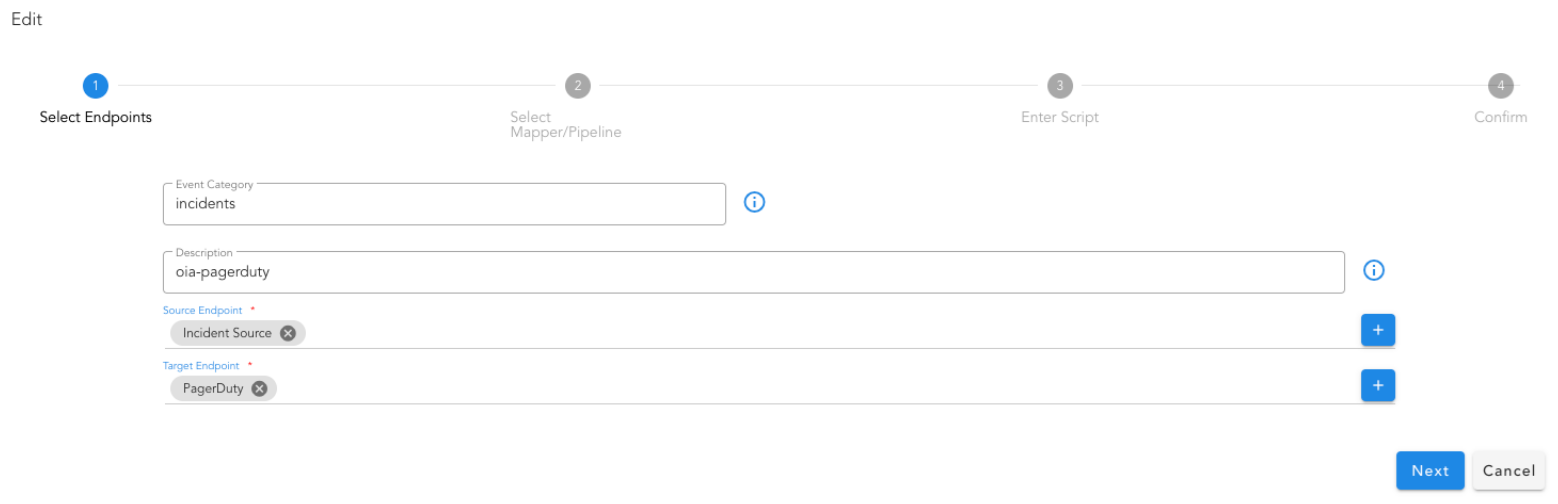

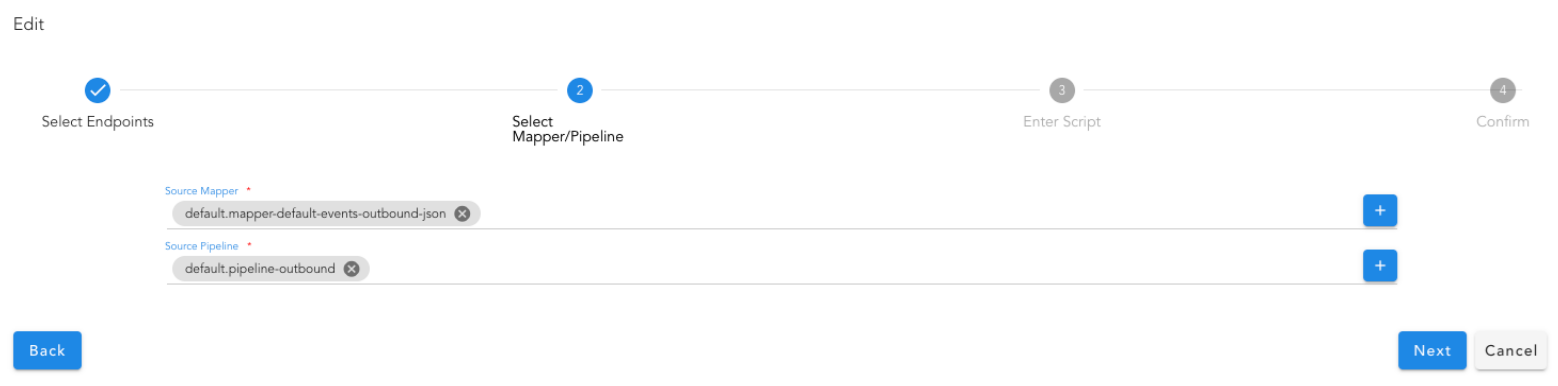

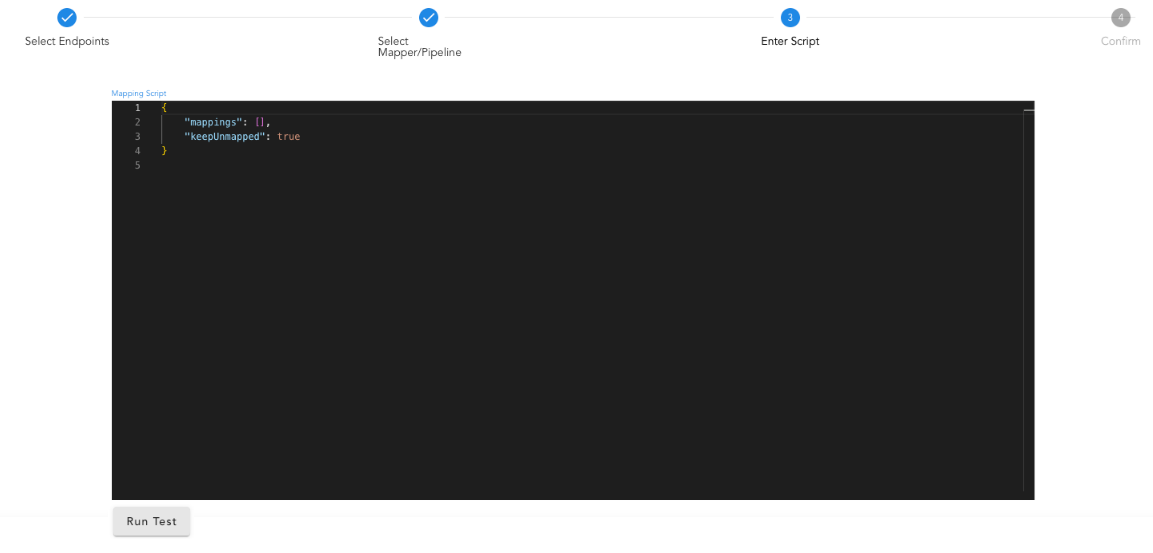

9.1.8.4.1 Incident Endpoint

9.1.8.4.2 Incident Mapping

9.1.8.5 Create Team

10. ML Driven Operations

cfxOIA uses machine learning (ML) at its core to intelligently learn patterns from huge volumes of historical as well as streaming data and automate key IT operational activities and decisions at large scale.

Key ML driven operations include:

- Alert Correlation (uses unsupervised ML)

- Log Clustering

- Alert volume seasonality

- Alert volume anomaly detection

- Alert volume prediction

- Incident triage data anomaly detection and noticeable changes

- Similar incidents

Prediction insights consist of forecasting alert volume or ingestion rate, providing a perspective into how many alerts Operations team can expect in future. cfxOIA can perform this prediction analysis on multiple dimensions, including alerts coming from a certain source, or alerts of certain application, severity, site or even alerts of certain symptom. In addition to prediction insights, cfxOIA also provides seasonality and anomaly detection when ML jobs are run, which can be executed on-demand or scheduled to be run periodically, which helps in continuos learning, training and testing of models.

cfxOIA currently supports 3 ML pipelines out of the box, Clustering, Classification and Regression. ML jobs allow hyper parameter tuning by making the selections from UI itself. Advanced customization scenarios allow uploading of new ML pipelines.

Learn more about:

11. Analytics

cfxOIA provides key analytics to track AIOps related KPIs like noise reduction efficiency, Alert ingestion trends, Most chatty alert types, etc. cfxOIA has a unique data exploration feature called Quick Insights that provides an at-a-glance visual clue of distribution and other characteristics of data. Quick Insights on Incidents provide visual clues about the distribution of Incidents based on priority, Support-group, Incident-age, Environment, Application, Department, etc.

Learn more about:

12. UI features

cfxOIA provides a web-based portal that is accessible via a standard browser and uses HTML 5 to render User Interface (UI). There is no need to install any thick client to access the cfxOIA web-portal. cfxOIA portal provides certain advanced UI features for efficient data handling and customization.

Filters: Allow efficient filtering, Saving, and Reusing filters. Table View Customization

Learn more about:

Customize Columns: Displayed in the table, Change the order of columns.

Learn more about:

Exporting Data: Exporting data like Incidents, Alerts, etc.

Learn more about:

13. OIA Diagnostic & Remediation Tools Setup Guide

This document describes the prerequisites and instructions for setting up tools for any setup.

13.1 Prerequisites

-

The first and most important prerequisite is that the setup must have a stack, and each incoming alert (and corresponding incident) should be mapped to that stack, along with the enriched

node_idof the affected node by that alert/Incident. -

Next, the team member making the changes should have a thorough understanding of the nodes and their attributes. This is essential because not all tools are suitable for every node in the stack. Therefore, selecting the appropriate tool for each node based on its attributes is crucial and depends on the setup and node characteristics in the field.

-

Each tool operates within its own pipeline, and specific inputs must be provided to each tool within that pipeline. The first step in every pipeline is to read the selected node and other form inputs before proceeding with the bot execution.

-

Additionally, oia,diagnostictools and remediationtools bot credentials must already be added to the list of integrations.

13.2 Steps to Setup Tools

-

Begin by updating the

oia_tools_descriptordraft pipeline to reflect the appropriate nodes for each tool in the stack. Based on the selected nodes, adjust thenode_filtercolumn for each tool in the pipeline and execute it. -

Next, verify that each tool listed in the

oia_tools_descriptorpipeline has its corresponding named pipeline published.

13.3 Output Structure of the Tool Pipeline

-

The output must be a json with a semi-flexible structure, as detailed below.

-

The output json must contain at least four mandatory columns, in addition to any optional columns:

-

Collection_status – Valid values: Success or Failed.

-

Collection_timestamp – A long integer representing the number of milliseconds since the epoch.

-

Reason – A string with an empty value for success, or a short error message in case of failure.

-

full_json_output – This is a string representation of a JSON dictionary that must contain the following mandatory keys (in addition to any optional data):

- diag-status – Valid values: Success or Failed.

- diag-message – A string, empty for success or containing a short error message in case of failure.

- displayAttributes – A JSON array where each item includes a “key” and a “value” pair. For a successful response, this data will be rendered as a visible table in the UI.

-

- A Example Pipeline for the checkport tool, which verifies whether a specified port is open on a selected asset.

@exec:get-input

--> @dm:explode-json column='node'

--> @dm:add-missing-columns columns='vm_ip_address,switch_ip_address,app_comp_ip_address,port'

--> @dm:to-type columns='port' and type='int'

--> @dm:selectcolumns include='^vm_ip_address$|^switch_ip_address$|^app_comp_ip_address$|^port$'

--> @dm:eval tool_ip_address = "vm_ip_address if vm_ip_address else (switch_ip_address if switch_ip_address else app_comp_ip_address)"

--> @diagnostictools:checkport column_name_host = 'tool_ip_address' and column_name_port = 'port'

-

Example Pipeline content for the tools descriptor. Each row represents a tool, with the following fields:

- tool_type: Specifies whether the tool is for "diagnostics" or "remediation".

- tool_id: A unique identifier for each tool, specific to its type.

- tool_label: The name of the tool, as displayed on the UI.

- pipeline_name: The name of the published pipeline that should be executed for the tool.

- description: A brief overview of the tool's function.

- node_filter: A CFXQL filter used to select the applicable nodes from the incident stack for the tool.

- inputs (optional): Defines any additional inputs that may be required from the user for the tool's execution.

@dm:empty

--> @dm:addrow type='diagnostics' and tool_id = 'icmp_ping' and tool_label = 'ICMP Ping' and pipeline_name = 'oia_tools_diagnostic_ping' and description='Verify connectivity using ICMP Ping' and node_filter = 'node_type in ["Controller", "Database", "Datastore", "Host", "VM", "Switch"]'

--> @dm:addrow type='diagnostics' and tool_id = 'check_open_port' and tool_label = 'Check Open Port' and pipeline_name = 'oia_tools_diagnostic_checkport' and description='Checks whether asked port is open on the target host' and node_filter = 'node_type in ["Controller", "Database", "Datastore", "Host", "VM", "Switch"]' and inputs='{ "attributes": [ { "name": "port", "label": "Port", "description": "Port number", "mandatory": true, "value-type": "string"} ] }'

--> @dm:save name='oia_tools_descriptor'

13.4 Functionality Of All The Tools

Important

Supported IP address formats for tools: Examples include 192.168.10.10,192.168.10.11, 192.168.20.0/24, 192.168.30.10-192.168.30.100, or any combination of these formats as comma-separated values.

All tools support IP addresses, except for the URL check bot, which takes a URL as input.

13.4.1 Traceroute

Core Functionality:

- This tool performs a traceroute to determine the path packets take to reach the destination, providing detailed information about each hop along the route.

Command: (Linux/macOS)

The main command used in this traceroute function is

Traceroute works on both Linux and macOS platforms.

Input:

- A list of IP addresses or IP ranges provided in the specified column of the input.

Output:

- A json containing the destination IP, destination name, and the hops traversed.

@dm:empty

-> @dm:addrow ip = '192.168.125.126'

--> @diagnostictools:traceroute column_name_host="ip"

13.4.2 Ping

Core Functionality:

- Sends ICMP echo requests to determine the reachability and latency of a host.

Command: (Linux/macOS)

Ping works on both Linux and macOS platforms.

Input:

- A list of IP addresses or IP ranges provided in the specified column of the input and a defined number of pings (count).

Output:

- A json containing the minimum, maximum, and average round-trip time (RTT), as well as packet loss.

@dm:empty

--> @dm:addrow ip = '192.168.131.111'

--> @diagnostictools:ping column_name_host="ip" and column_name_count=4 and concurrent_discovery=10

13.4.3 MemoryCheck

Core Functionality:

- Connects to remote machines via SSH to retrieve memory usage statistics.

Command: (Linux/macOS)

Input:- An IP address, username, and password for the SSH connection provided in the respective columns of the input.

Output:

- A json containing memory information such as total, free, and available memory.

@dm:empty

--> @dm:addrow ip = '10.95.125.126, 10.95.107.60' and column_name_username = 'rdauser' and column_name_password= 'xxxxxxxxxx

--> @diagnostictools:memorycheck column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password'

13.4.4 DiskSpace

Core Functionality:

- Connects to remote machines via SSH to retrieve disk space usage.

Command: (Linux/macOS)

Input:

- An IP address, username, and password for the SSH connection provided in the respective columns of the input.

Output:

- A json containing disk usage information such as filesystem, size, used, available space, percentage used, and mount point.

@dm:empty

--> @dm:addrow ip = '10.95.107.60' and column_name_username = 'rdauser' and column_name_password= 'xxxxxxxxxx

--> @diagnostictools:diskspace column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password'

13.4.5 UpTime

Core Functionality:

- Connects to remote machines via SSH to retrieve system uptime.

Command: (Linux/macOS)

Input:

- An IP address, username, and password for the SSH connection provided in the respective columns of the input.

Output:

- A json containing system uptime in a human-readable format (days, hours, minutes, seconds).

@dm:empty

--> @dm:addrow ip = '10.95.125.126' and column_name_username = 'rdauser' and column_name_password= 'xxxxxxxxxx

--> @diagnostictools:uptime column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password'

13.4.6 SystemLoad

Core Functionality:

- Connects to remote machines via SSH to retrieve system load information, including CPU, memory, swap, disk, and network stats.

Command: (Linux/macOS)

Input:

- An IP address, username, and password for the SSH connection provided in the respective columns of the input.

Output: A json containing system metrics like:

-

Load Average 1 min: The 1-minute load average.

-

Load Average 5 min: The 5-minute load average.

-

Load Average 15 min: The 15-minute load average.

-

CPU Usage (%): The percentage of CPU usage.

-

Total Memory (kB): The total memory.

-

Used Memory (kB): The used memory.

-

Free Memory (kB): The free memory.

-

Swap Total (kB): The total swap space.

-

Swap Used (kB): The used swap space.

-

Swap Free (kB): The free swap space.

-

Disk Total (kB): The total disk space.

-

Disk Used (kB): The used disk space.

-

Disk Free (kB): The free disk space.

-

Bytes Sent (kB): The bytes sent.

-

Bytes Received (kB): The bytes received.

@dm:empty

--> @dm:addrow ip = '10.95.125.126' and column_name_username = 'rdauser' and column_name_password= 'xxxxxxxxxx

--> @diagnostictools:systemload column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password'

13.4.7 Check_SSL

Core Functionality:

- Fetches the certificate details such as issuer, subject, validity dates, and other certificate metadata.

Command: (Windows/Linux/macOS)

Input:

- A list of IP addresses or IP ranges provided in the specified column of the input and port number list for respective IPs

Output:

- A json containing SSL certificate details for each IP address and port.

@dm:empty

--> @dm:addrow ip = '10.95.125.126' & port=443

--> @diagnostictools:checkssl column_name_host="ip" and column_name_port="port" and json_output_flag = true

13.4.8 AWS Alarms

Core Functionality:

- Fetches and processes AWS CloudWatch alarms using provided credentials and region information

Command: (Windows/Linux)

Input:

- An AWS region (e.g., us-east-1), AWS access key, AWS secret key in the respective columns of the input.

Output: A json containing CloudWatch alarm metrics:

-

Alarm Name: Name of the CloudWatch alarm.

-

State: Current state of the alarm (e.g., ALARM, OK, INSUFFICIENT_DATA).

-

Metric Name: Name of the associated metric (e.g., CPUUtilization).

-

Namespace: AWS namespace (e.g., AWS/EC2).

-

Actions Enabled: Boolean indicating if actions are enabled

@dm:empty

--> @dm:addrow ip = 'us-east-1' and column_name_username = 'AKIASOVSWMOI6DVPEL5N' and column_name_password= 'j+FSAs/Wbrir7nDP6lK4iAXynGrjr8fOcTJH8lQA'

--> @diagnostictools:AwsAlarms region_name="ip" and aws_access_key_id = 'column_name_username' and aws_secret_access_key= 'column_name_password'

--> @dm:save name= 'awsnew'

13.4.9 vCenter Monitoring

Core Functionality:

- Uses pyVmomi (VMware's Python SDK) to connect to VMware vCenter , retrieves VM Metrics (CPU, Memory) and triggers alarms if input CPU or memory usage thresholds are exceeded.

Note

Instead of direct vCenter commands, user can utilize the pyVmomi Python module for core functionality, ensuring a consistent and programmatic approach.

Input:

- A list of IP addresses or IP ranges, username, and password for the connection, metric type and threshold provided in the specified column of the input.

Output:

- A json containing VM metrics and alarm statuses (CPU/Memory usage) for respective IP addresses

@dm:empty

--> @dm:addrow ip = '10.95.159.151' and column_name_username = 'administrator@vsphere.local' and column_name_password= 'Abcd123$' and th = 80 and metric = 'cpu'

--> @diagnostictools:vcenteralarm column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password' and column_name_metric_type = 'metric' and column_name_threshold = 'th'

13.4.10 NetApp

Core Functionality:

- Retrieves system version information using NetApp’s API.

Command: Retrieve system status and version information from NetApp systems based on provided credentials.

Input:

- A list of IP addresses or IP ranges, username, and password for the HTTP connection provided in the respective columns of the input.

Output:

- A json containing System version or failure reason for respective IP addresses

@dm:empty

--> @dm:addrow ip = '10.95.131.51' and column_name_username = 'rouser' and column_name_password= 'abcd123$'

--> @diagnostictools:NetAppStatus column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password' and json_output_flag = true

13.4.11 Service Controller:(Belongs to remediation tools)

Core Functionality:

- Triggers start, stop, check status on remote systems

Linux Commands:

-

systemctl status

: Get the service status. -

sudo systemctl start

: Start the service. -

sudo systemctl stop

: Stop the service.

Windows Commands:

-

sc query

: Query the status of the service. -

sc start

: Start the service. -

sc stop

: Stop the service.

Input:

- A list of IP addresses or IP ranges, username, password, service name, action(start, stop, status) provided in the respective columns of the input.

Output:

- A json containing Result of the command execution for respective IP addresses and optionally Service Name, Active, Docs, Main PID, Tasks, Memory (for Linux services)

@dm:empty

--> @dm:addrow ip = '10.95.107.60' and column_name_username = 'rdauser' and column_name_password= 'rdauser1234' and column_name_service_name = 'docker' and column_name_action = 'status'

--> @remediationtools:servicecontroller column_name_host="ip" and column_name_username = 'column_name_username' and column_name_password= 'column_name_password' and column_name_service_name = 'column_name_service_name' and column_name_action = 'column_name_action' and json_output_flag = true